May 4, 2025

Building AI-Powered Search and RAG with PostgreSQL and Vector Embeddings

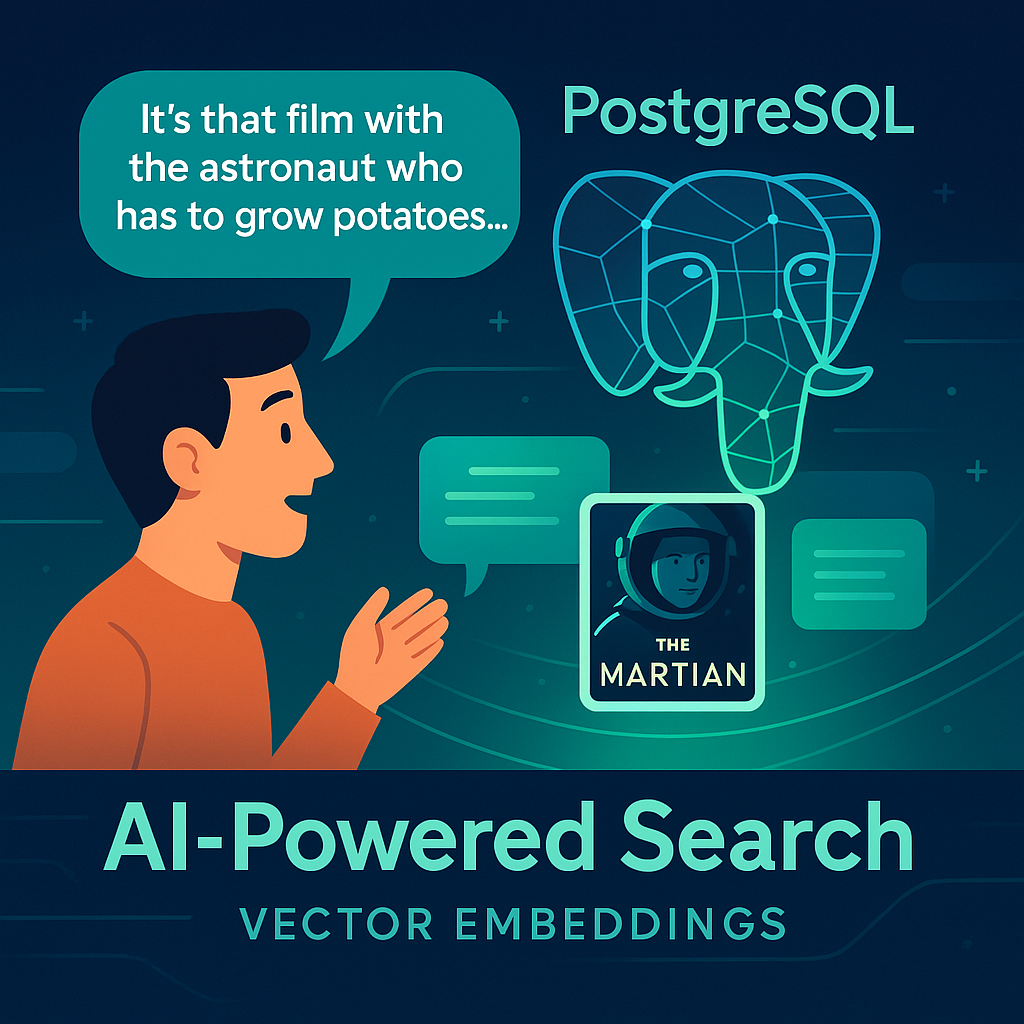

Remember the last time you tried describing a movie to a friend? “It’s that film with the astronaut who gets stranded on another planet and has to grow potatoes…” Your friend knew exactly what you meant, even though you never mentioned “The Martian.”

Humans understand meaning beyond exact keywords. But until recently, databases couldn’t do the same—they needed precise matches.

That’s changed. With PostgreSQL’s vector search capabilities, your database can now understand meaning, not just match text patterns.

This opens up a world of possibilities for developers building the next generation of intelligent applications.

The Problem with Traditional Search

Traditional database queries excel at finding exact matches: products with a specific category, documents containing certain keywords, or users in particular locations. But they fall short when dealing with meaning and similarity.

Consider these challenges:

- A user searches for “affordable laptops for coding” but your products are tagged as “budget-friendly computers for programming”

- You need to recommend articles similar to what a user just read, even when they use different terminology

- Your support chatbot needs to retrieve answers based on the meaning of a question, not just matching words

These scenarios demand semantic understanding—the ability to grasp meaning beyond literal text. Enter vector embeddings and PostgreSQL’s pgvector extension.

Vector Embeddings: Turning Meaning into Numbers

A vector embedding is a list of numbers that captures the semantic essence of text, images, or other data types. Think of it as converting meaning into mathematical coordinates in a high-dimensional space.

Here’s what makes embeddings powerful:

1.Semantic representation: Similar concepts have similar vectors, even with different words 2.Measurable similarity: You can calculate how close two embeddings are 3.Language-model powered: Generated by AI models trained on vast text corpora

For example, the sentences “I need a vacation” and “Looking for a holiday getaway” might use different words, but their embeddings would be close in vector space—like two points near each other on a map of meaning. Think of a text embedding as an idea index.

Think of a text embedding as an idea index.

Enter pgvector: PostgreSQL Becomes Vector-Ready

Until recently, implementing vector search required specialized vector databases or cloud services that added complexity, new APIs, and extra costs to your stack. The pgvector extension changes that by bringing vector operations directly into PostgreSQL.

With pgvector, you can:

- Store embeddings as a native VECTOR type

- Perform similarity searches using specialized operators

- Index vectors for blazing-fast retrieval at scale

- Combine vector search with traditional queries

This means you can build AI-powered search and recommendation features right inside your existing PostgreSQL database—using tools and SQL you already know.

Getting Started with pgvector

Let’s walk through the basics of setting up and using pgvector in your PostgreSQL database.

1. Install the Extension

CREATE EXTENSION IF NOT EXISTS vector;

Most major cloud providers (AWS RDS/Aurora, Google Cloud SQL/AlloyDB, Azure Database) now offer pgvector as a managed extension.

2. Create a Table with a Vector Column

CREATE TABLE documents (

id SERIAL PRIMARY KEY,

content TEXT,

embedding VECTOR(1536) -- Adjust dimension to match your embedding model

);

The VECTOR(1536) column is designed to store embeddings from OpenAI’s text-embedding-3-small model, which outputs 1536-dimensional vectors. Different models produce vectors of different dimensions, so you’ll need to adjust this value accordingly. (You can use APIs from OpenAI, Cohere, Hugging Face, Google Vertex AI, or open-source models to create embeddings, then load them into PostgreSQL.).Pro tip: For quick experiments, you can use smaller dimensions (like 3 or 10), but production applications should match the exact dimension of your embedding model.

3. Generate and Insert Embeddings

Now let’s use Python to generate embeddings and insert them into PostgreSQL:

import openai

import psycopg

client = openai.OpenAI(api_key="your-api-key")

# Generate an embedding

response = client.embeddings.create(

model="text-embedding-3-small",

input="A suspenseful sci-fi adventure with a twist ending."

)

embedding = response.data[0].embedding # List of 384 floats

# Insert into PostgreSQL

conn = psycopg.connect("dbname=vector_demo user=postgres")

with conn.cursor() as cur:

cur.execute(

"INSERT INTO documents (content, embedding) VALUES (%s, %s)",

("A suspenseful sci-fi adventure with a twist ending.", embedding)

)

conn.commit()

Modern PostgreSQL drivers like psycopg3 automatically convert Python lists to PostgreSQL’s VECTOR type, making the integration seamless.Size does not always matter - note on newer embedding models: text-embedding-3-large is more efficient than older models like text-embedding-ada-002. For example, on the MTEB benchmark, a text-embedding-3-large embedding can be shortened to a size of 256 while still outperforming an unshortened text-embedding-ada-002 embedding with a size of 1536. This means better performance with significantly smaller vector dimensions! Smaller vector dimension require less compute and memory constraints to the database server too. Keep this in mind.

4. Search for Similar Documents

Once you’ve populated your database with embeddings, you can search for similar content using vector similarity operators:

SELECT id, content

FROM documents

ORDER BY embedding <-> '[0.12, -0.44, ...]' -- Replace with your query vector

LIMIT 5;

The <-> operator computes the Euclidean distance between vectors (smaller distance = more similar). PostgreSQL will return the five documents whose embeddings are closest to your query vector.

Understanding Vector Indexes

As your data grows, scanning every vector becomes impractical. Vector indexes solve this performance challenge, enabling similarity search at scale. PostgreSQL’s pgvector offers two primary index types:HNSW (Hierarchical Navigable Small World):

-

A graph-based index structure where nodes represent vectors and edges connect similar vectors

-

Provides efficient navigation to nearest neighbors through a multi-layer structure

-

Offers excellent query performance without requiring a training step

-

Well-suited for datasets up to tens of millions of vectorsIVFFlat (Inverted File with Flat quantization):

-

Partitions vectors into clusters (lists) using k-means

-

Searches only the most promising clusters during queries

-

Requires a training step (ANALYZE) to build the clusters

-

Designed for very large datasets (hundreds of millions of vectors)

Let’s see how to create these indexes:

Creating Vector Indexes for Fast Searches

Building the right index is crucial for performance as your vector database grows. Let’s explore how to set up and tune both HNSW and IVFFlat indexes.

HNSW Index

CREATE INDEX ON documents USING hnsw (embedding vector_l2_ops);

This creates an HNSW index optimized for L2 (Euclidean) distance. For cosine similarity, use vector_cosine_ops instead.

HNSW is the recommended choice for most applications due to its:

- High recall (accuracy) out of the box

- No training requirements

- Good performance on diverse datasets

- Support for parallel query execution

IVFFlat Index

CREATE INDEX ON documents USING ivfflat (embedding vector_l2_ops) WITH (lists = 100);

ANALYZE documents; -- Critical training step!

IVFFlat works by clustering your vectors. The lists parameter controls how many clusters to create—more lists mean finer-grained clustering but require more memory. The ANALYZE command trains the index on your data, a crucial step many developers forget!

IVFFlat is useful when:

- Your dataset is extremely large (100M+ vectors)

- You can accept slightly lower recall for faster queries

- You want to tune the speed/accuracy trade-off

Analogy: Imagine your database as a vast library. HNSW is like a librarian with shortcuts between shelves—she finds the right book quickly and rarely misses. IVFFlat divides the library into districts (clusters), so you search the most promising district instead of the entire collection. This is faster on massive libraries, but may occasionally overlook a close match.

Balancing Recall and Performance

Vector indexes use approximate nearest neighbor (ANN) algorithms that trade perfect accuracy for speed. You can adjust this balance using index and query parameters:

For HNSW:

SET hnsw.ef_search = 100; -- Higher values increase accuracy but slow queries

For IVFFlat:

SET ivfflat.probes = 10; -- More probes means searching more clusters

In production applications, you’ll want to tune these parameters based on benchmarks with your actual data and query patterns.

Building a Hybrid Search System

Real-world search isn’t just about finding similar vectors—it often combines semantic similarity with metadata filters and traditional text search. This hybrid approach gives users extremely relevant results.

For example, to find recent sci-fi articles most similar to a specific concept:

SELECT id, content

FROM documents

WHERE

category = 'sci-fi' AND

published_date > '2023-01-01' AND

to_tsvector('english', content) @@ plainto_tsquery('space')

ORDER BY embedding <-> '[0.12, -0.44, ...]' -- Your query vector

LIMIT 10;

This powerful hybrid query:

- Filters by category (sci-fi)

- Ensures recency (published this year)

- Requires a keyword match (space)

- Ranks results by semantic similarity to your query vector

The result? Extremely relevant content that meets all criteria while capturing the meaning behind the user’s query.

Real-World Applications

Let’s explore some practical applications where vector search transforms user experiences:

1. Smart Product Search

Imagine an e-commerce site where users can search: “lightweight laptop good for video editing” and get relevant results—even if those exact words aren’t in the product descriptions.

-- First in the application code:

query_embedding = get_embedding("lightweight laptop good for video editing")

-- Then in PostgreSQL:

SELECT id, name, price, description

FROM products

WHERE category = 'computers' AND in_stock = true

ORDER BY embedding <-> query_embedding

LIMIT 10;

This returns products semantically similar to the query, filtered to in-stock computers.

2. Knowledge Base for Support Chatbots

For customer support chatbots, vector search enables better retrieval of relevant answers:

-- In application code:

question_embedding = get_embedding("How do I reset my password?")

-- In PostgreSQL:

SELECT id, question, answer

FROM knowledge_base

ORDER BY embedding <-> question_embedding

LIMIT 3;

This retrieves the most semantically similar support articles, improving the chatbot’s ability to give relevant responses.

3. Content Recommendations

For news sites or content platforms, recommend articles similar to what a user is currently reading:

-- Current article embedding:

article_embedding = get_embedding(current_article.content)

-- Find similar articles:

SELECT id, title, summary

FROM articles

WHERE

id != current_article.id AND

published_date > (CURRENT_DATE - INTERVAL '30 days')

ORDER BY embedding <-> article_embedding

LIMIT 5;

This provides content recommendations based on semantic similarity, not just shared tags or categories.

Optimizing for Production

As you move from experimentation to production, keep these best practices in mind:

1. Choose the Right Embedding Model

OpenAI’s text-embedding-3-small (1536 dimensions) balances quality and efficiency for most applications. For maximum performance, text-embedding-3-large (3000+ dimensions) provides state-of-the-art results but with higher API costs.

| Model | ~ Pages per dollar | Performance on MTEB eval | Max input |

|---|---|---|---|

| text-embedding-3-small | 62,500 | 62.3% | 8191 |

| text-embedding-3-large | 9,615 | 64.6% | 8191 |

| text-embedding-ada-002 | 12,500 | 61.0% | 8191 |

| local embedding modes | “free” | varies | varies |

2. Batch Process Embeddings

Generate embeddings in batches to minimize API calls:

# Batch embedding generation (more efficient)

documents = ["Document 1 content", "Document 2 content", "Document 3 content"]

response = client.embeddings.create(

model="text-embedding-3-small",

input=documents

)

embeddings = [item.embedding for item in response.data]

3. Bulk Insert for Performance

Use PostgreSQL’s COPY command or batch inserts for efficient loading:

# Prepare data

data = [(docs[i], embeddings[i]) for i in range(len(docs))]

# Bulk insert

with conn.cursor() as cur:

cur.executemany(

"INSERT INTO documents (content, embedding) VALUES (%s, %s)",

data

)

conn.commit()

4. Monitor and Verify Index Usage

Use EXPLAIN to confirm your queries are using vector indexes properly:

EXPLAIN SELECT id, content

FROM documents

ORDER BY embedding <-> '[0.12, -0.44, ...]'

LIMIT 5;

Look for “Index Scan using documents_embedding_idx” in the output to confirm the index is being used. If you see “Seq Scan” instead, your index isn’t being utilized.

5. Consider Partitioning for Very Large Tables

For massive collections (100M+ vectors), consider partitioning your table to improve maintenance and query performance:

CREATE TABLE documents (

id SERIAL,

category TEXT,

content TEXT,

embedding VECTOR(384)

) PARTITION BY LIST (category);

CREATE TABLE documents_tech PARTITION OF documents FOR VALUES IN ('technology');

CREATE TABLE documents_health PARTITION OF documents FOR VALUES IN ('health');

-- Create more partitions as needed

The Future of Vector Search in PostgreSQL

The PostgreSQL ecosystem continues to evolve with each pgvector release bringing new features:

- Improved ANN algorithms and index structures

- Support for more distance metrics and vector types

- Better parallelization and scaling capabilities

- Enhanced query planner optimizations for hybrid searches

These ongoing improvements make PostgreSQL an increasingly powerful platform for AI-enhanced applications.

Business Applications: Recommendations, Semantic Search, and BeyondVector similarity and hybrid search patterns power many of today’s most engaging digital experiences providing intelligent search. Here are a few real-world examples:

-**Product recommendations:**Suggest items that are semantically similar to what a user has viewed or described, even if the terms or categories differ. -**Semantic search:**Surface articles or help docs that match the intent behind a user’s question, not just the keywords they typed. -**Conversational AI & RAG:**Retrieve contextually relevant responses for chatbots and assistants by blending user queries, conversation history, and metadata. Retrieval-augmented generation (RAG) pipelines use vector search to pull in relevant data for AI models. -**Personalization:**Tailor content, offers, or support based on user behavior and semantic similarity to previous actions. -**In-database embedding generation:**With modern extensions and external model integrations, you can now generate embeddings directly in PostgreSQL, making RAG and AI-powered features even more seamless.

By combining vector search with classic SQL features, PostgreSQL becomes a platform for features that once required specialized infrastructure. This means faster innovation, simpler architectures, and smarter user experiences.

For advanced patterns—including RAG pipelines, hybrid ranking strategies, and in-database embedding generation check out this article (Stop the Hallucinations: Hybrid Retrieval with BM25, pgvector, embedding rerank, LLM Rubric Rerank & HyDE). (I have been at this a while as this article from 2023 shows.)

Conclusion: Making Your Database AI-Ready

Vector search transforms PostgreSQL from a traditional relational database into a powerful engine for AI-enhanced applications. With pgvector, you can:

- Store and search by meaning, not just keywords

- Build semantic search, recommendations, and AI-powered features

- Keep everything in your familiar PostgreSQL environment

- Combine vector search with your existing queries and filters

- Scale to millions of embeddings with proper indexing

The ability to find data by meaning rather than exact matching is revolutionizing how we build search, discovery, and recommendation systems. Best of all, you can implement these advanced capabilities using the PostgreSQL database you already know and trust.

As you embark on your vector search journey, remember that the real power comes from combining traditional database strengths with these new semantic capabilities. Your PostgreSQL database isn’t just storing data anymore—it’s understanding it.

Have you implemented vector search in your applications? What challenges or insights have you discovered? Share your experiences in the comments below.

About the Author

Rick Hightower is a seasoned software architect and technology leader specializing in distributed systems, database optimization, and AI integration. With extensive experience in enterprise software development, Rick has helped numerous organizations implement cutting-edge solutions using PostgreSQL and vector embeddings.

As a frequent contributor to technical publications and open-source projects, Rick is passionate about making complex technologies accessible to developers of all skill levels. When not writing about or implementing database solutions, he can be found mentoring junior developers and speaking at technology conferences.

Connect with Rick on LinkedIn or follow his technical blog for more insights into database optimization and AI integration.

TweetApache Spark Training

Kafka Tutorial

Akka Consulting

Cassandra Training

AWS Cassandra Database Support

Kafka Support Pricing

Cassandra Database Support Pricing

Non-stop Cassandra

Watchdog

Advantages of using Cloudurable™

Cassandra Consulting

Cloudurable™| Guide to AWS Cassandra Deploy

Cloudurable™| AWS Cassandra Guidelines and Notes

Free guide to deploying Cassandra on AWS

Kafka Training

Kafka Consulting

DynamoDB Training

DynamoDB Consulting

Kinesis Training

Kinesis Consulting

Kafka Tutorial PDF

Kubernetes Security Training

Redis Consulting

Redis Training

ElasticSearch / ELK Consulting

ElasticSearch Training

InfluxDB/TICK Training TICK Consulting