January 1, 2024

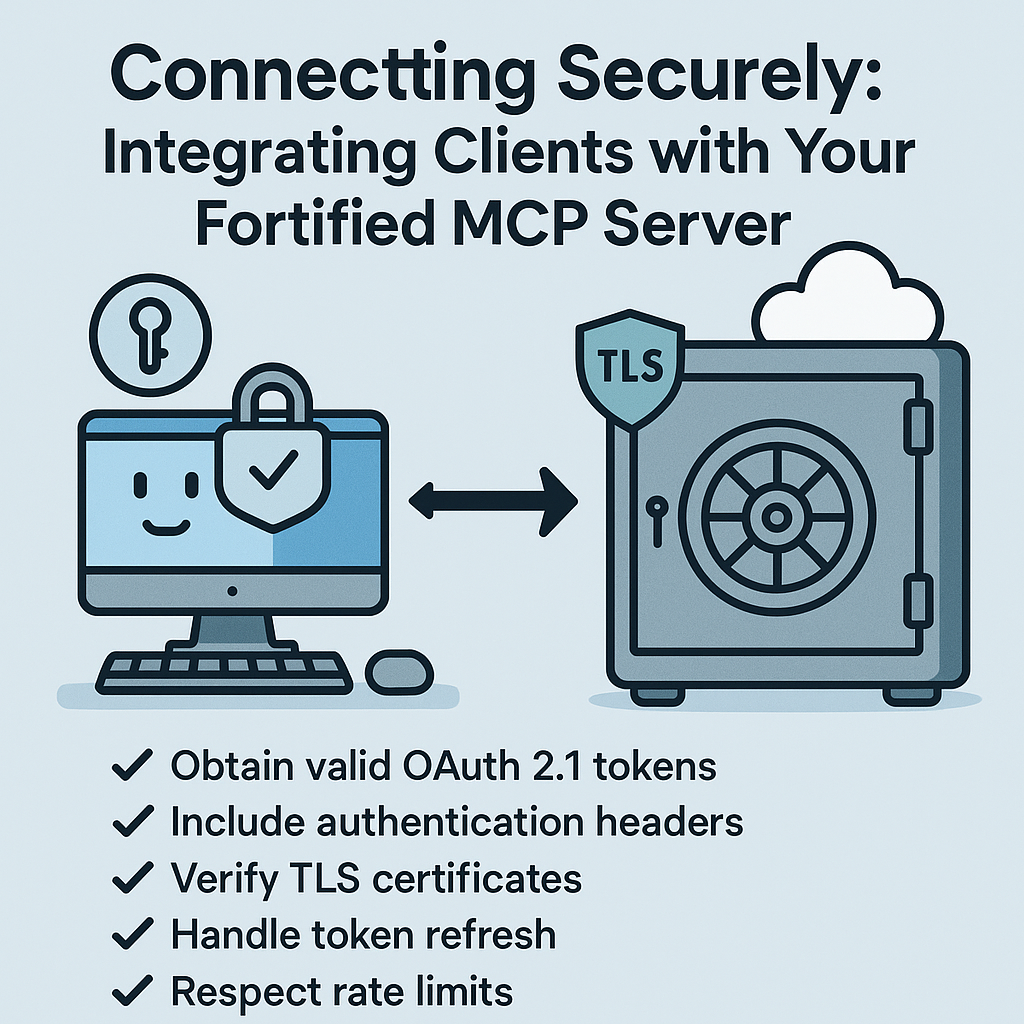

Securing MCP: From Vulnerable to Fortified — Building Secure HTTP-based AI Integrations

In a world where data breaches are becoming the norm, securing your HTTP-based AI integrations is not just a choice—it’s a necessity! Join us as we delve into the transformative journey of fortifying your Model Context Protocol (MCP) servers. Discover real-world strategies that will turn your vulnerable systems into impenetrable fortresses against lurking cyber threats. Are you ready to elevate your AI game and protect your innovations? Dive into our comprehensive guide now!

**Transform Your AI Integrations: From Vulnerable to Fortified!**In an age where security breaches are rampant, deploying your Model Context Protocol (MCP) server without robust protection is like leaving your front door wide open.

**Transform Your AI Integrations: From Vulnerable to Fortified!**In an age where security breaches are rampant, deploying your Model Context Protocol (MCP) server without robust protection is like leaving your front door wide open.

Discover how to build a secure HTTP-based AI integration that not only safeguards your data but also empowers your applications.

Learn about real-world security patterns, bulletproof authentication, and essential tools to fortify your MCP server against lurking threats!

Dive into this comprehensive guide and make certain your AI systems are secure as they scale!

Securing MCP: From Vulnerable to Fortified — Building Secure HTTP-based AI Integrations

Imagine leaving your house with all doors and windows wide open, valuables in plain sight, and a sign saying “Come on in!” That’s basically what many developers do when deploying Model Context Protocol (MCP) servers without proper security. As MCP adoption explodes in 2025, the rush to connect AI systems to external tools has created a perfect storm of security vulnerabilities. But here’s the good news: securing your MCP implementation doesn’t require a PhD in cryptography — it just needs the right approach.

In this guide, we’ll transform your MCP server from an open invitation to hackers into a fortified digital fortress. We’ll explore real-world security patterns, implement bulletproof authentication, and show you how to protect your AI integrations from the threats lurking in production environments. By the end, you’ll have a complete security toolkit for building MCP servers that are both powerful and protected.

This article builds upon our previous MCP tutorial where we created a FastMCP server and integrated it with LangChain, OpenAI Chat Completion, Anthropic Native, LiteLLM, and DSPy. While all these tools support MCP, the previous article focused on local stdio connections. However, deploying an AI system to production requires HTTP connectivity along with proper authentication and encryption.

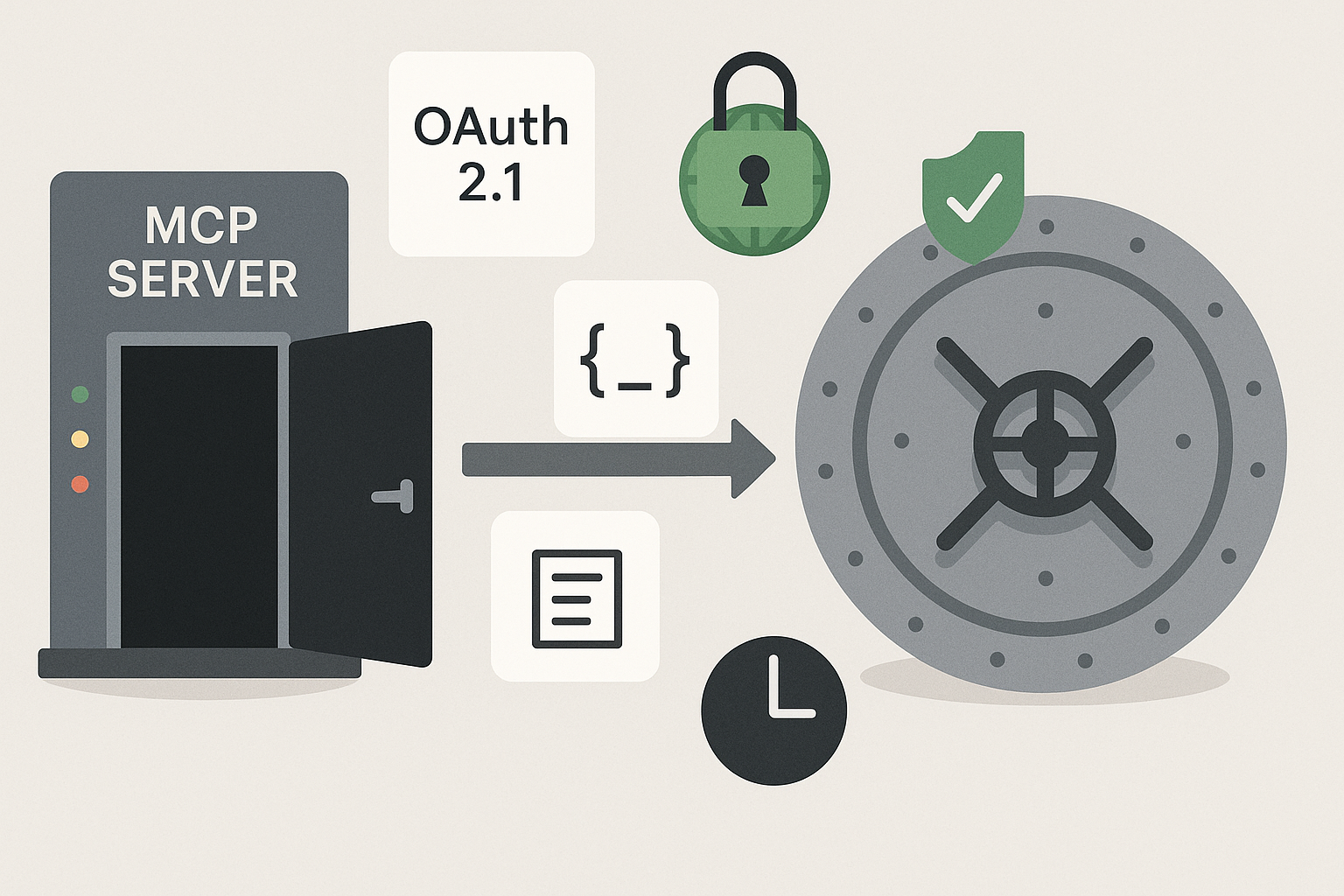

This article demonstrates how to create a hardened and secure MCP server. We implemented OAuth 2.1 using our own vendor-neutral test server. The MCP server integrates with LangChain, DSPy, native OpenAI Chat Completion, and native Anthropic. For security, clients authenticate using OAuth, communicate over TLS transport, and validate JWT token origins. We also explore additional security fundamentals needed for a robust MCP server. This article comes with a companion Github repo, mcp_security, where the examples live and a Wiki that documents other aspects of the examples that just wont fit into a single article.

The Security Nightmare That Keeps Developers Awake

Before MCP, integrating AI with external systems was complex enough. Now, as we expose these integrations over HTTP, we’ve inherited every web security vulnerability known to humanity — plus some new ones unique to AI systems. Recent security audits reveal a shocking statistic:43% of MCP servers in production have critical command injection vulnerabilities. That’s nearly half of all deployments sitting vulnerable to attack.

Picture this scenario: You’ve built a brilliant customer service MCP server that queries databases, creates tickets, and processes payments. Without proper security, an attacker could manipulate your AI to:

- Extract your entire customer database through crafted prompts

- Execute arbitrary commands on your server

- Hijack user sessions and impersonate legitimate users

- Launch denial-of-service attacks that drain your resources

- Inject malicious responses that corrupt your AI’s behavior

The transition from local MCP deployments to HTTP-based production systems introduces what security experts call an “attack surface explosion.” Every endpoint, parameter, and connection becomes a potential entry point for malicious actors. Additionally, when deploying an MCP Server into production for a custom AI solution—which I’m currently doing with a couple of projects—these security considerations become even more critical.

Understanding the Threat Landscape: What Makes MCP Different

MCP’s unique architecture creates security challenges that traditional web applications don’t face. When you combine AI’s unpredictability with HTTP’s openness, you get a cocktail of vulnerabilities that require special attention.The AI Factormakes MCP security particularly challenging. Unlike traditional APIs with predictable inputs and outputs, MCP servers must handle dynamic tool invocations from AI models that might be influenced by clever prompt engineering. An attacker doesn’t need to hack your server directly — they just need to trick your AI into doing it for them.The Tool Execution Problemrepresents another unique challenge. MCP servers execute functions based on AI decisions, creating a new class of confused deputy attacks where the server can’t distinguish between legitimate AI requests and malicious manipulations. Without proper validation, your helpful AI assistant becomes an unwitting accomplice to security breaches.The Session State Challengecompounds these issues. MCP’s Streamable HTTP transport maintains stateful sessions across multiple requests, creating opportunities for session hijacking and replay attacks that persist longer than traditional stateless API calls.

Building Your Security Foundation: The Four Pillars

Just as a fortress needs walls, gates, guards, and surveillance, your MCP server needs four fundamental security pillars to stay protected.

Pillar 1: Authentication and Authorization — Your Digital Identity Check

Modern MCP security relies onOAuth 2.1 with PKCE(Proof Key for Code Exchange). As of March 2025, this isn’t optional — it’s required for all HTTP-based MCP servers. PKCE acts like a secure handshake that verifies the identity of both parties, even when the connection is being monitored.

OAuth 2.1, released in 2023, is the latest evolution of the OAuth framework, addressing security vulnerabilities found in OAuth 2.0. The addition of PKCE (Proof Key for Code Exchange) is a crucial security enhancement that prevents authorization code interception attacks by requiring clients to prove they’re the same application that initiated the authorization request.

Major cloud providers and identity platforms supporting OAuth 2.1 with PKCE include:

- AWS Cognito - Full OAuth 2.1 support with PKCE requirement for public clients

- Auth0 - Native implementation of OAuth 2.1 with enhanced security features

- Okta - Complete OAuth 2.1 stack with PKCE enforcement

- Microsoft Azure AD - OAuth 2.1 compliance with PKCE support for all client types

- Google Cloud Identity Platform - OAuth 2.1 implementation with mandatory PKCE for mobile apps

- Facebook - OAuth 2.1 support with enhanced PKCE implementation for web and mobile apps

- GitHub - Full OAuth 2.1 compliance with mandatory PKCE for public clients

- LinkedIn - OAuth 2.1 integration with PKCE requirement for all client types

The key advantage of OAuth 2.1 with PKCE is its ability to secure both public clients (like mobile apps and single-page applications) and confidential clients (server-side applications) using the same robust security model. This uniformity simplifies implementation while maintaining strong security standards.

Pillar 2: Transport Security — Your Encrypted Highway

Think of TLS (Transport Layer Security) as an armored car transporting your data. Without it, all your information travels exposed—visible to anyone watching the network. For MCP servers,TLS 1.2 is the absolute minimum, and TLS 1.3 is strongly recommended.

TLS (Transport Layer Security) secures data in transit by creating an encrypted connection through a handshake process where both parties:

- Verify identities with digital certificates

- Choose encryption algorithms

- Exchange keys securely

This encrypted tunnel keeps data confidential and tamper-proof, protecting MCP servers from eavesdropping and man-in-the-middle attacks.

Pillar 3: Input Validation — Your Security Scanner

Every input to your MCP server should be treated as potentially malicious. Command injection vulnerabilities plague nearly half of all MCP implementations because developers place too much trust in AI-generated inputs. Here’s our bulletproof Pydantic v2 validation with Bleach sanitization.

Pillar 4: Rate Limiting — Your Traffic Controller

AI operations consume significant resources, and attackers exploit this vulnerability. Without rate limiting, a malicious actor can quickly drain your computing power and budget.

Rate limiting is essential for protecting your API resources and maintaining service quality. Major cloud providers offer built-in rate limiting services:

- AWS API Gateway - Offers throttling and usage plans

- Google Cloud Armor - Provides rate limiting and DDoS protection

- Azure API Management - Includes flexible rate limiting policies

Popular open-source rate limiting tools include:

- Redis-based limiters (Redis-cell, RedisTimeSeries)

- HAProxy - Enterprise-grade TCP/HTTP rate limiting

- Nginx Plus - Commercial version with advanced rate limiting

- Kong API Gateway - Open-source API gateway with rate limiting plugins

How the example implements the pillars

Here’s how we implement each security pillar in our example MCP server:

- **Authentication with a demo OAuth 2.1:**We’ve built a complete OAuth 2.1 server with PKCE support, handling client credentials and token generation using industry-standard JWT tokens. This is just for testing and demonstrating the concepts.

- **Transport Security:**Our nginx configuration provides TLS 1.2/1.3 termination with proper cipher selection, OCSP stapling, and security headers for maximum protection.

- **Input Validation:**We use Pydantic v2 models with custom validators and Bleach sanitization to prevent injection attacks and verify data integrity.

- **Rate Limiting:**A hybrid rate limiter combines in-memory tracking with Redis fallback to protect against resource exhaustion and DoS attacks.

Example MCP Server

We write an exampleModel Context Protocol (MCP) demo serverwith FastMCP and turns show how to create aproduction-grade, HTTPS-secured, OAuth-protected integration hub for AI agents.

We will cover:

- anOAuth 2.1 authorization server with PKCE(

src/oauth_server.py) for issuing RS256-signed JWTs; - asecure FastMCP server(

src/main.py) that verifies those JWTs, enforces scope-based authorization, validates every input with Pydantic, and exposes three demo business tools (customer lookup, ticket creation, account value calculation); - rate-limiting, security logging and monitoring helpers(

src/security); - example AI clients(OpenAI, Claude, LangChain, DSPy, LiteLLM);

- dev + prod run-books(Taskfile, Docker, nginx TLS proxy, mkcert helpers).

The project’s goal is to show**“from vulnerable to fortified”**techniques for anyone embedding AI tool-calling behind HTTP.

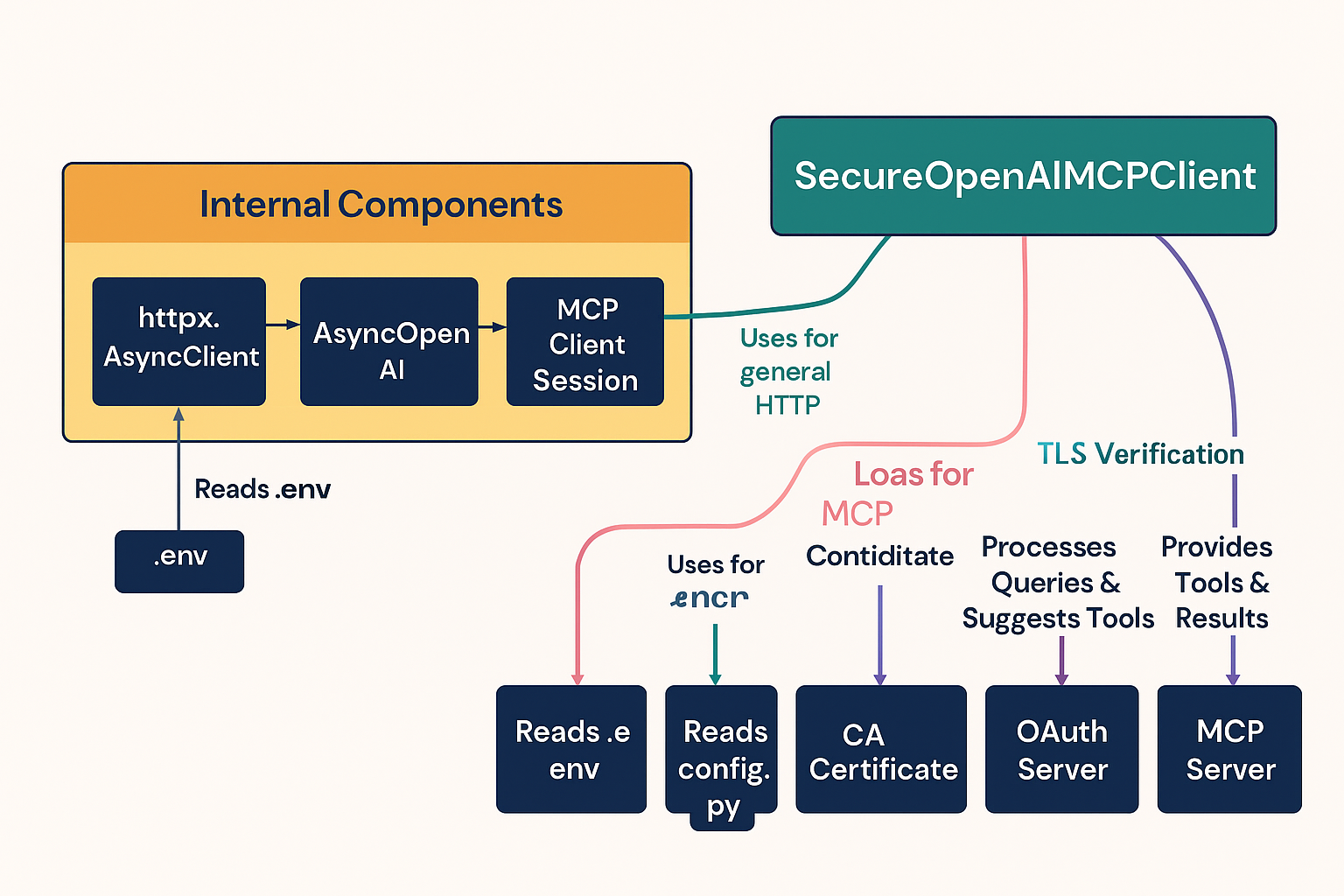

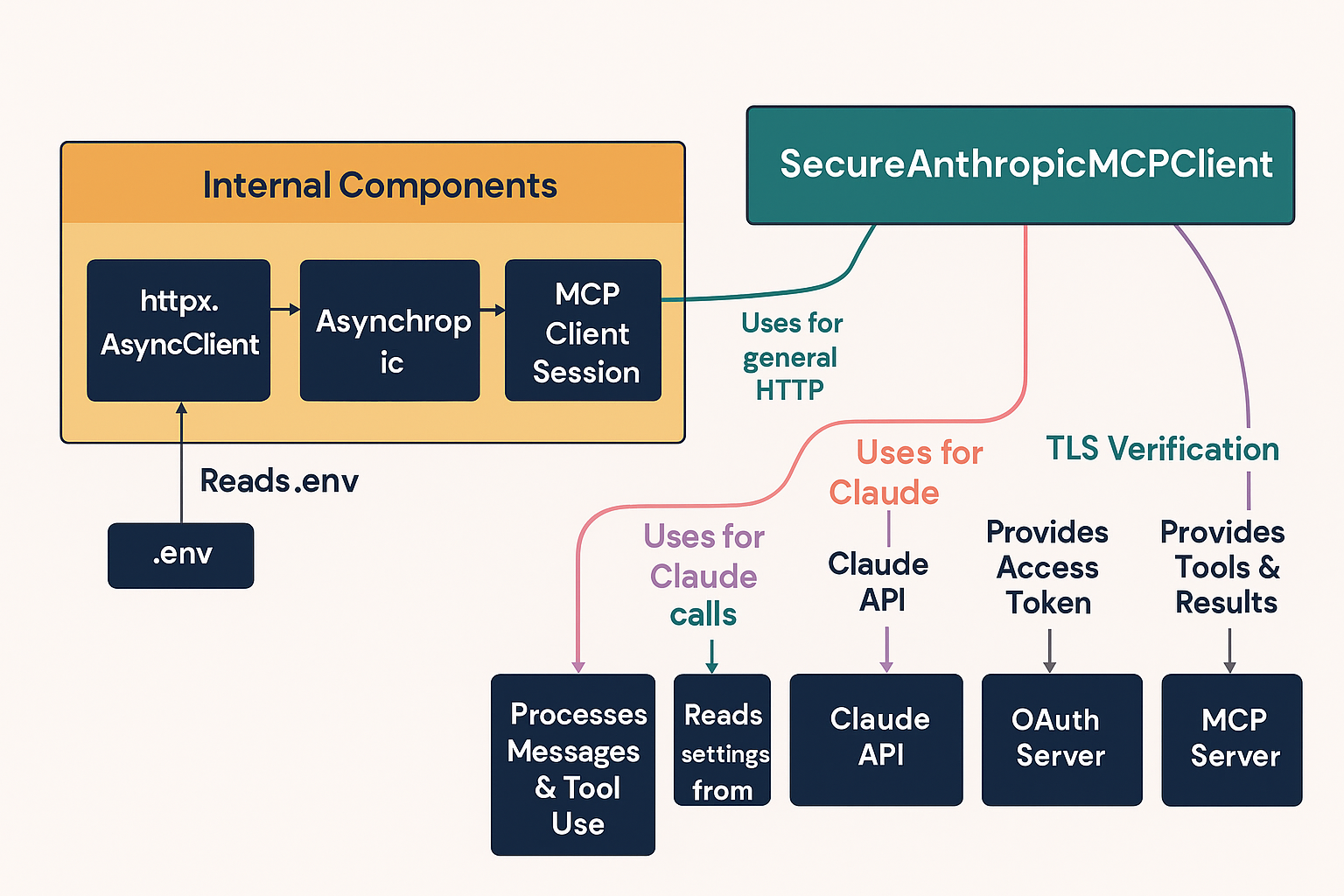

Example Technology Stack

- Languages: Python 3.12 (Poetry), Bash (automation scripts).

- Frameworks/Libraries- FastMCP 2.8 (agent+server)

- FastAPI / Uvicorn (OAuth server)

- Pydantic v2 (validation)

- redis-py (optional Redis backend)

- cryptography + PyJWT (RS256)

- httpx (async HTTP)

- NGinx reverse proxy

- LangChain / DSPy / LiteLLM adapters for clients

- pytest / pytest-asyncio, black, ruff, isort (dev)

- Infrastructure / Ops: Docker, docker-compose, nginx (TLS), mkcert, Go Task, Poetry.

- External services: OpenAI, Anthropic, Ollama (config-selectable).

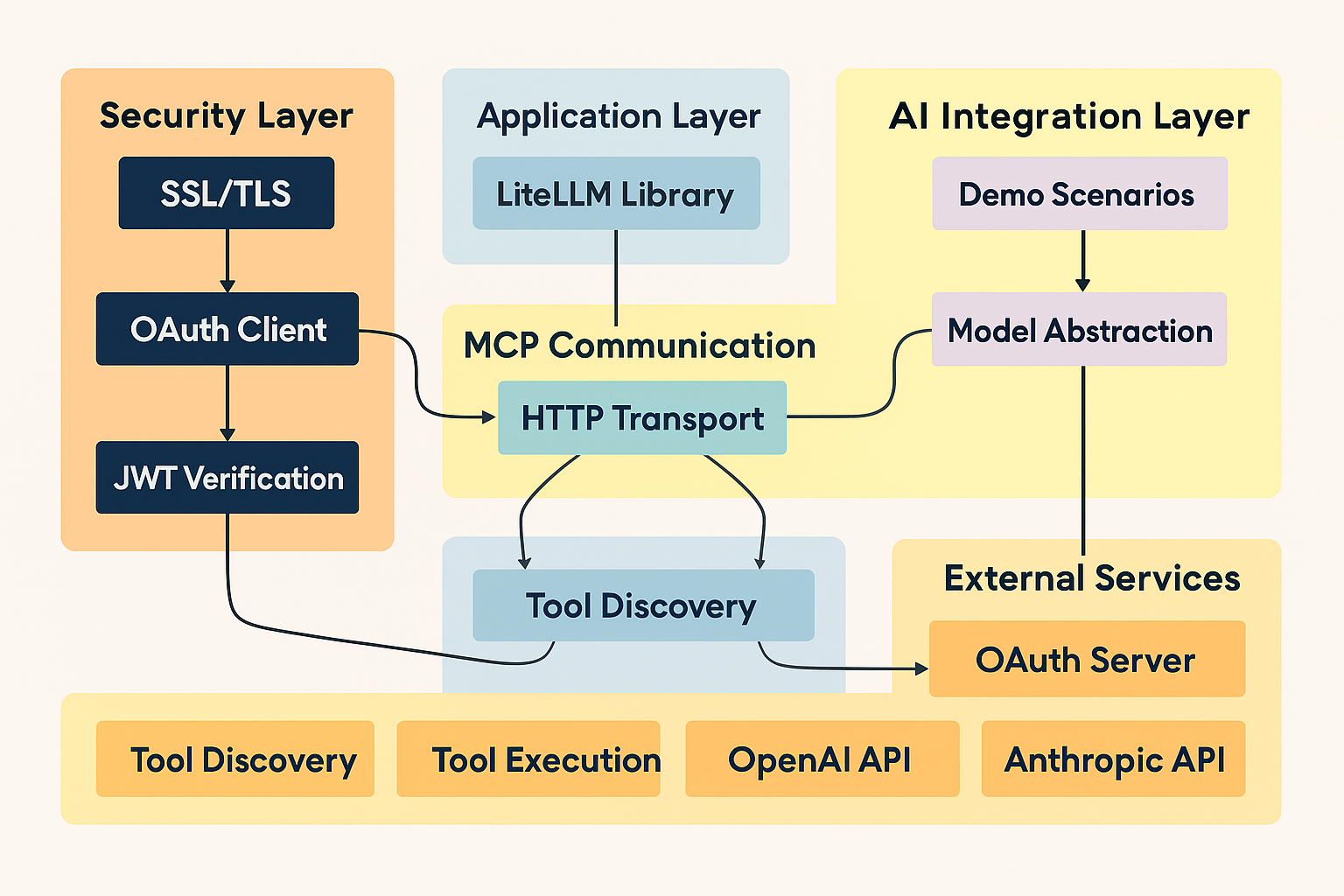

4 Architecture and Design

The solution follows atwo-service, layered micro-servicepattern.

┌─────────────┐ RS256 JWT ┌─────────────────┐

│ OAuth Svc │ ───────────────▶ │ MCP API Server │

└─────────────┘ └─────────────────┘

▲ ▲

PKCE / │ │ FastMCP tools

Browser │ │

│ ▼

┌──────────┐ ┌─────────────┐

│ AI Client│──HTTPS/JSON/Tools──│ LLM Provider│

└──────────┘ └─────────────┘

- OAuth Server– issues/validates JWTs, exposes JWKS; persists tokens in memory (swap for DB).

- MCP Server– stateless FastMCP app, BearerAuthProvider verifies JWT, then passes Context to tool functions.

- Security Layer– Pydantic validation,

RateLimiter,SecurityLogger. - Adapters– client scripts wrap OpenAI / Claude / LangChain etc., adding OAuth token acquisition and TLS pinning.

Component responsibilities:

| Module | Responsibility |

|---|---|

src/main.py |

Actual MCP Server. Register tools/resources, lifecycle, JWT verify, scope checks. |

src/oauth_server.py |

AuthZ endpoint, token endpoint, PKCE, JWKS, refresh & revoke. |

src/security/validation.py |

Strict schemas for every inbound payload. |

src/security/rate_limiting.py |

Sliding-window rate & token quota (in-mem or Redis). |

src/security/monitoring.py |

Structured event log + summary. |

scripts/*.sh |

Dev TLS (mkcert), LetsEncrypt, CA bundling. |

Our example code is laid out like this - Directory Tree (top-level)

.

├── src/ # Python source package

│ ├── main.py # Secure FastMCP server (HTTP transport)

│ ├── oauth_server.py # OAuth 2.1 + PKCE auth server

│ ├── config.py # Central env / secrets config

│ ├── secure_clients/ # Example AI clients (OpenAI, Claude, etc.)

│ └── security/ # Validation, rate-limit, monitoring helpers

├── tests/ # Pytest coverage for security & clients

├── scripts/ # TLS automation, mkcert, certbot

├── certificates/ # Generated dev certificates

├── Taskfile.yml # Go Task workflow commands

├── pyproject.toml # Poetry deps + tooling

└── README.md # Full usage & security guide

High level architectural diagram of the example

graph TD

subgraph Agentic Clients

C1(AI client OpenAI SDK)

C2(AI client Claude desktop)

LLM[LLM provider<br>OpenAI / Claude / Ollama]

end

subgraph Edge

NGINX{{TLS Proxy}}

end

subgraph Backend

OAUTH(OAuth 2.1 Server)

MCP(FastMCP Secure Server)

REDIS[(Redis<br>rate-limit)]

end

C1 -- HTTPS / PKCE --> OAUTH

C2 -- HTTPS / PKCE --> OAUTH

OAUTH -- RS256 JWT --> C1

C1 -- Bearer token + JSON --> NGINX

C2 -- Bearer token + JSON --> NGINX

NGINX -- mTLS --> MCP

MCP -- verify scope --> OAUTH

MCP -- stats --> REDIS

C1 -- prompt + tools --> LLM

C2 -- prompt + tools --> LLM

style NGINX fill:#f9f,stroke:#333,stroke-width:1px,color:black

Let me break down this architecture from a senior dev perspective:

The system employs a classic three-tier architecture with some modern twists. At the client layer, we have AI clients (OpenAI SDK and Claude desktop) that initiate the auth flow using OAuth 2.1 with PKCE - a crucial security upgrade from basic OAuth 2.0.

The edge layer is handled by NGINX, acting as a TLS termination proxy. This is where we implement crucial security headers, SSL/TLS configuration, and potentially rate limiting at the network level. The mTLS connection between NGINX and the MCP server adds an extra layer of service-to-service authentication.

The backend is where things get interesting. Instead of a monolithic design, we’ve split the concerns into discrete services:

- The OAuth 2.1 server handles all auth flows and token management, using RS256 JWTs for secure token signing

- The FastMCP server focuses on business logic and tool integration, with proper scope verification against the OAuth server

- Redis handles distributed rate limiting - smart choice for horizontal scalability

- The LLM providers are treated as external services, with the MCP server acting as a secure gateway

What’s particularly elegant about this design is how it maintains clear separation of concerns while implementing defense in depth. Each service has its specific security responsibilities, from edge TLS to application-level scope verification.

Mind Map (key concepts)

mindmap

root((MCP Security))

Security

OAuth 2.1

TLS 1.3

RS256 JWT

Rate-Limiting

Input Validation

Services

OAuth Server

FastMCP Server

nginx TLS

Redis

AI Clients

OpenAI

Anthropic

LangChain

DSPy

LiteLLM

DevOps

Docker

Taskfile

mkcert

Monitoring

SecurityLogger

/health

src/main.py – Sequence (get_customer_info)

sequenceDiagram

participant Client

participant MCP

participant Validator

participant Logger

Client->>MCP: HTTP GET /tool/get_customer_info

MCP->>MCP: _check_tool_permissions()

MCP->>Validator: SecureCustomerRequest.parse()

Validator-->>MCP: valid model

MCP->>Logger: info("Retrieved customer info")

MCP-->>Client: JSON payload

The sequence diagram illustrates an elegant security flow for customer info retrieval. The implementation follows a robust request-validation-logging pattern where each request to /tool/get_customer_info undergoes a series of security validations. We start with OAuth scope verification through _check_tool_permissions(), followed by comprehensive input sanitization via Pydantic validation. The flow concludes with business logic execution and security event logging.

The architecture demonstrates a clean separation of concerns with multiple security layers woven seamlessly into the request pipeline. The permission checking and validation logic form the cornerstone of our defense against unauthorized access and injection attacks - we’ll examine these components in detail shortly.

src/oauth_server.py – Sequence (authorization-code grant)

sequenceDiagram

participant AIClient

participant OAuth

participant UserDB

AIClient->>OAuth: GET /authorize?client_id...

OAuth->>AIClient: HTML login form

AIClient->>OAuth: POST creds + approve

OAuth->>UserDB: verify(username,pwd)

UserDB-->>OAuth: OK

OAuth->>AIClient: 302 redirect_uri?code=XYZ

AIClient->>Client: deliver code

Client->>OAuth: POST /token (code)

OAuth-->>Client: access_token, refresh_token

The sequence diagram above demonstrates how we secure token generation and validation. Our OAuth server first verifies client credentials, then uses RS256 signing to create tamper-proof JWTs. The JWT payload includes crucial claims like scope, expiration, and audience to prevent token misuse.

Security Helpers – Class Diagram

classDiagram

class SecureTicketRequest {

+customer_id: str

+subject: str

+description: str

+priority: str

}

class RateLimiter {

-requests_window

-token_window

+check_rate_limit(user_id, est_tokens)

}

class SecurityLogger {

+events: List

+log_security_event(type, details)

+get_security_summary()

}

SecureTicketRequest <|-- SecureCustomerRequest

SecureTicketRequest <|-- SecureCalculationRequest

This architecture provides robust security through multiple layers of protection. The first line of defense is OAuth 2.1 with PKCE, which provides secure client authentication. This is complemented by JWT-based access tokens using RS256 signing, providing cryptographic verification of client identities and permissions.

Theflowfor a typical AI client call is:

1.User / clientperforms the OAuth PKCE dance → receives an RS256 JWT access token.

2.Clientsends HTTPS POST to https://…/mcp with Bearer token.

3.nginxterminates TLS and forwards toFastMCP server.

4. FastMCP’sBearerAuthProviderverifies signature & claims via JWKS.

5. _check_tool_permissions enforces required scopes.

6. Payload is parsed byPydantic validators; bad input → HTTP 400.

7.RateLimiterchecks quotas (Redis-backed in prod).

8. Tool executes;SecurityLoggerrecords event.

9. JSON result returns to client; client renders answer.

This architecture cleanlyseparates concerns(auth, business logic, security controls) and is deployment-ready thanks to its Docker/Taskfile scripts. Clone, task docker-up, point any GPT/Claude client at the endpoints, and you have a fully secured AI integration demo out-of-the-box.

Let’s examine each implementation in detail using our pillars concept.

Pillar 1: Authentication and Authorization — Your Digital Identity CheckOAuth 2.1 with PKCEis required for all HTTP-based MCP servers as of March 2025. This security protocol works like a secure handshake, verifying both parties’ identities during communication.

Here’s our actual development OAuth 2.1 server implementation (oauth_server.py) with PKCE:

src/oauth_server.py

"""

OAuth 2.1 Authorization Server with PKCE support for MCP security.

Condensed version focusing on security essentials.

"""

import jwt

from cryptography.hazmat.primitives import serialization

from fastapi import FastAPI, Form, HTTPException

from datetime import datetime, timedelta

app = FastAPI(title="OAuth 2.1 Authorization Server")

# Pre-configured MCP OAuth clients

clients = {

"mcp-secure-client": {

"client_secret": "secure-client-secret",

"redirect_uris": ["http://localhost:8080/callback"],

"scopes": ["customer:read", "ticket:create", "account:calculate"]

},

# ... other MCP clients

}

def generate_access_token(user_id: str, client_id: str, scopes: List[str]) -> str:

"""Generate JWT access token with RS256 algorithm for MCP authentication."""

now = datetime.utcnow()

payload = {

"sub": user_id,

"aud": client_id,

"iss": Config.get_oauth_issuer_url(),

"iat": int(now.timestamp()),

"exp": int((now + timedelta(hours=1)).timestamp()),

"scope": " ".join(scopes),

"jti": str(uuid.uuid4())

}

private_key = load_private_key()

return jwt.encode(payload, private_key, algorithm="RS256")

@app.post("/token")

async def token(

grant_type: str = Form(...),

client_id: str = Form(...),

client_secret: Optional[str] = Form(None),

# ... other parameters

):

"""Token endpoint for MCP client authentication."""

# Verify MCP client credentials

if client_id not in clients:

raise HTTPException(400, "Invalid MCP client")

if grant_type == "authorization_code":

# ... PKCE verification logic

# Generate JWT for MCP tool access

access_token = generate_access_token(

user_id,

client_id,

scopes # MCP tool permissions

)

return {

"access_token": access_token,

"token_type": "Bearer",

"expires_in": 3600,

"scope": " ".join(scopes)

}

# ... other grant types

@app.get("/jwks")

async def get_jwks():

"""Return JSON Web Key Set for MCP token verification."""

public_key = load_public_key()

public_numbers = public_key.public_numbers()

jwk = {

"kty": "RSA",

"use": "sig",

"alg": "RS256",

"kid": "mcp-oauth-key-1",

"n": int_to_base64url(public_numbers.n),

"e": int_to_base64url(public_numbers.e)

}

return {"keys": [jwk]}

# TLS configuration for production

if __name__ == "__main__":

import uvicorn

# For production: Enable TLS for secure MCP communication

uvicorn.run(

app,

host="0.0.0.0",

port=443,

ssl_keyfile="path/to/key.pem",

ssl_certfile="path/to/cert.pem"

)

You might wonder why I built an OAuth 2.1 server for an example article instead of using an existing solution. I wanted to gain a deeper understanding of the protocol, and I preferred to keep the article vendor-neutral, though I may write vendor-specific follow-up articles.

Let’s walk through the code to better understand how it works conceptually.

OAuth 2.1 Authorization Server for MCP Security - Code Analysis

The code has one primary entry point:

__main__block: Launches the FastAPI server with optional TLS configuration

High-level Control Flow

1.Server Startup: The __main__ block initializes a Uvicorn web server

2.Client Request: External MCP clients make HTTP requests to various endpoints

3.Authentication Flow: Clients authenticate via OAuth 2.1 protocol

4.Token Generation: Server generates JWT tokens for authorized clients

5.Token Verification: Clients can verify tokens using the JWKS endpoint

6.Server Termination: Process ends when the server stops

2. Global Sequence Diagram

sequenceDiagram

participant MCP as MCP Client

participant OAuth as OAuth Server

participant JWT as JWT Library

participant Crypto as Cryptography

MCP->>OAuth: POST /token (credentials)

OAuth->>OAuth: Verify client_id

OAuth->>OAuth: Validate credentials

OAuth->>Crypto: Load private key

Crypto-->>OAuth: RSA private key

OAuth->>JWT: Generate token

JWT-->>OAuth: Signed JWT

OAuth-->>MCP: Access token response

MCP->>OAuth: GET /jwks

OAuth->>Crypto: Load public key

Crypto-->>OAuth: RSA public key

OAuth-->>MCP: JSON Web Key Set

This diagram shows how an MCP (Model Context Protocol) client receives authorization. The client sends its credentials to the OAuth server. The server validates the client, then creates a secure token using cryptography. The token acts as a temporary ID card that proves the client can use specific tools. The client can later verify this token’s authenticity by checking it against the server’s public key.

Function-by-Function Analysis

generate_access_token()Purpose: Creates a secure JWT token that MCP clients use to prove their identity and permissions.Signature & Parameters:

| Parameter | Type | Description |

|---|---|---|

user_id |

str |

The identifier of the user making the request |

client_id |

str |

The identifier of the MCP client application |

scopes |

List[str] |

List of permissions granted (e.g., “customer:read”) |

| Returns | str |

A signed JWT token as a string |

- Reads from filesystem to load private key

- May raise exceptions if key loading fails

generate_access_tokenCode Listing:

def generate_access_token(user_id: str, client_id: str, scopes: List[str]) -> str:

"""Generate JWT access token with RS256 algorithm for MCP authentication."""

now = datetime.utcnow()

payload = {

"sub": user_id, # Subject: who the token is for

"aud": client_id, # Audience: which app can use it

"iss": Config.get_oauth_issuer_url(), # Issuer: who created it

"iat": int(now.timestamp()), # Issued at: when created

"exp": int((now + timedelta(hours=1)).timestamp()), # Expires: when invalid

"scope": " ".join(scopes), # Permissions granted

"jti": str(uuid.uuid4()) # Unique token ID

}

private_key = load_private_key()

return jwt.encode(payload, private_key, algorithm="RS256")

generate_access_tokenMini Sequence Diagram:

sequenceDiagram

participant Func as generate_access_token

participant Time as datetime

participant Config as Config

participant Key as load_private_key

participant JWT as jwt.encode

Func->>Time: Get current UTC time

Time-->>Func: timestamp

Func->>Config: Get issuer URL

Config-->>Func: OAuth server URL

Func->>Func: Build payload dict

Func->>Key: Load RSA private key

Key-->>Func: Private key object

Func->>JWT: Encode with RS256

JWT-->>Func: Signed token string

This function creates a secure pass for MCP tools. It generates a temporary ID card containing the person’s name, accessible doors (scopes), and expiration time (1 hour). The server signs the card with a secret stamp (private key) that only it possesses.

token() EndpointPurpose: Main endpoint that exchanges credentials for access tokens following OAuth 2.1 protocol.Signature & Parameters:

| Parameter | Type | Required | Description |

|---|---|---|---|

grant_type |

str |

Yes | Type of authentication flow (e.g., “authorization_code”) |

client_id |

str |

Yes | Identifier of the MCP client requesting access |

client_secret |

Optional[str] |

No | Secret password for the client |

code |

Optional[str] |

No | Authorization code from previous step |

redirect_uri |

Optional[str] |

No | Where to send the user after login |

code_verifier |

Optional[str] |

No | PKCE security parameter |

refresh_token |

Optional[str] |

No | Token to get a new access token |

scope |

Optional[str] |

No | Requested permissions |

| Returns | dict |

- | Token response with access_token, type, expiry |

- Modifies in-memory token storage

- Raises HTTP exceptions for invalid requests

- Performs I/O to load cryptographic keys

token()Code Listing:

@app.post("/token")

async def token(

grant_type: str = Form(...),

client_id: str = Form(...),

client_secret: Optional[str] = Form(None),

# ... other parameters

):

"""Token endpoint for MCP client authentication."""

# Step 1: Verify the MCP client is registered

if client_id not in clients:

raise HTTPException(400, "Invalid MCP client")

# Step 2: Handle different authentication flows

if grant_type == "authorization_code":

# ... PKCE verification logic

# Step 3: Generate JWT for MCP tool access

access_token = generate_access_token(

user_id,

client_id,

scopes # MCP tool permissions like "customer:read"

)

# Step 4: Return standardized OAuth response

return {

"access_token": access_token,

"token_type": "Bearer",

"expires_in": 3600, # 1 hour in seconds

"scope": " ".join(scopes)

}

# ... other grant types

token()Mini Sequence Diagram:

sequenceDiagram

participant Client as MCP Client

participant Endpoint as token()

participant Storage as clients dict

participant Gen as generate_access_token()

Client->>Endpoint: POST (grant_type, client_id)

Endpoint->>Storage: Check client exists

Storage-->>Endpoint: Client config

Endpoint->>Endpoint: Validate grant type

Endpoint->>Gen: Create JWT token

Gen-->>Endpoint: Signed token

Endpoint-->>Client: Token response JSON

This function operates as a security desk that verifies credentials and issues passes. MCP clients present their ID (client_id) and prove their identity. After verification succeeds, they receive a temporary pass (JWT token) that grants access to specific tools for one hour.

get_jwks() EndpointPurpose: Provides public cryptographic keys that clients use to verify token authenticity.Signature & Parameters:

| Parameter | Type | Description |

|---|---|---|

| None | - | This endpoint takes no parameters |

| Returns | dict |

JSON Web Key Set containing public keys |

- Reads public key from filesystem

- May raise exceptions if key file is missing

get_jwksCode Listing:

@app.get("/jwks")

async def get_jwks():

"""Return JSON Web Key Set for MCP token verification."""

# Step 1: Load the server's public key

public_key = load_public_key()

public_numbers = public_key.public_numbers()

# Step 2: Convert to standard JWK format

jwk = {

"kty": "RSA", # Key type

"use": "sig", # Used for signatures

"alg": "RS256", # Algorithm

"kid": "mcp-oauth-key-1", # Key identifier

"n": int_to_base64url(public_numbers.n), # RSA modulus

"e": int_to_base64url(public_numbers.e) # RSA exponent

}

# Step 3: Return in standard JWKS format

return {"keys": [jwk]}

get_jwksMini Sequence Diagram:

sequenceDiagram

participant Client as MCP Client

participant Endpoint as get_jwks()

participant Crypto as load_public_key()

participant Convert as int_to_base64url()

Client->>Endpoint: GET /jwks

Endpoint->>Crypto: Load public key

Crypto-->>Endpoint: RSA public key

Endpoint->>Endpoint: Extract n, e values

Endpoint->>Convert: Convert to base64

Convert-->>Endpoint: Encoded values

Endpoint-->>Client: {"keys": [...]}

This function shares the server’s public stamp that clients use to verify token authenticity. It publishes the official seal design, enabling anyone to verify whether a document bears the genuine seal or a counterfeit.

__main__ BlockPurpose: Entry point that starts the web server with security configuration.Context: Executes when the script runs directly, not when imported as a module.Side Effects:

- Starts a long-running web server process

- Binds to network ports

- Loads SSL certificates in productionCode Listing:

if __name__ == "__main__":

import uvicorn

# For production: Enable TLS for secure MCP communication

uvicorn.run(

app, # FastAPI application

host="0.0.0.0", # Listen on all interfaces

port=443, # HTTPS port

ssl_keyfile="path/to/key.pem", # TLS private key

ssl_certfile="path/to/cert.pem" # TLS certificate

)

OAuth Architectural Mapping

OAuth Demo Server System Architecture Diagram

graph TB

subgraph "External Layer"

MCP[MCP Clients]

Browser[Web Browsers]

end

subgraph "API Layer"

Token[token endpoint]

JWKS[jwks endpoint]

end

subgraph "Business Logic Layer"

Auth[Authentication Logic]

JWT[JWT Generation]

Validation[Client Validation]

end

subgraph "Security Layer"

TLS[TLS Encryption]

RSA[RSA Cryptography]

PKCE[PKCE Verification]

end

subgraph "Data Layer"

ClientDB[(Client Registry)]

Keys[(Cryptographic Keys)]

end

MCP -->|HTTPS| Token

MCP -->|HTTPS| JWKS

Browser -->|HTTPS| Token

Token --> Auth

Token --> Validation

Auth --> JWT

JWT --> RSA

JWKS --> RSA

Validation --> ClientDB

RSA --> Keys

TLS -.->|Encrypts| Token

TLS -.->|Encrypts| JWKS

This diagram is structured like a stack of building blocks! At the top, MCP clients and web browsers interact with our server. In the API layer below, our endpoints receive these requests and direct them appropriately. The business logic layer then springs into action, verifying identities and creating specialized tokens. For maximum protection, our security layer handles all the sophisticated encryption operations. At the foundation, our data layer acts as a secure vault, safeguarding all client information and cryptographic keys.

Layer Descriptions

1.External Layer: Connection point for MCP clients and external applications 2.API Layer: REST endpoints that handle HTTP requests and responses 3.Business Logic Layer: Core OAuth 2.1 protocol implementation 4.Security Layer: Cryptographic operations and transport security (TLS) 5.Data Layer: Storage of configuration and keys

Interfaces Between Layers

- External → API: HTTPS requests with OAuth parameters

- API → Business Logic: Function calls with validated parameters

- Business Logic → Security: Cryptographic operations (signing, verification)

- Security → Data: File I/O for key retrieval

- All Layers: Cross-cutting TLS encryption for transport security

Cross-cutting Concerns

1.Security: - TLS encryption on all endpoints - JWT signing with RS256 - PKCE for authorization code flow 2.Error Handling: - HTTP exceptions with proper status codes - Validation at each layer 3.Configuration: - Client registry (in-memory for demo) - Cryptographic key management

OAuth Demo Server

1.Entry Point: Server startup via __main__ block with TLS configuration

2.Core Endpoints: /token for authentication and /jwks for key distribution

3.Security Features: JWT with RS256 signing, PKCE support, TLS encryption

4.Architecture: Clean separation between API, business logic, security, and data layers

5.MCP Integration: Pre-configured clients with specific scopes for tool access

The implementation provides a secure foundation for MCP tool authentication, making certain that only authorized clients can access protected resources through cryptographically signed tokens.

Pillar 2: Transport Security — Your Encrypted Highway

TLS (Transport Layer Security) protects your data in transit by encrypting it. Without TLS, data is exposed to network eavesdroppers. MCP servers requireTLS 1.2 minimum, though TLS 1.3 is recommended.

Here’s our production nginx configuration with proper TLS termination and upstream service routing:

nginx/nginx.conf

# Production-ready nginx configuration for secure MCP deployment

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

# Security headers

add_header X-Frame-Options "SAMEORIGIN" always;

add_header X-Content-Type-Options "nosniff" always;

add_header X-XSS-Protection "1; mode=block" always;

add_header Referrer-Policy "strict-origin-when-cross-origin" always;

add_header Content-Security-Policy "default-src 'self'; script-src 'self' 'unsafe-inline'; style-src 'self' 'unsafe-inline';" always;

# HSTS (HTTP Strict Transport Security)

add_header Strict-Transport-Security "max-age=31536000; includeSubDomains" always;

# SSL Configuration

ssl_protocols TLSv1.2 TLSv1.3;

ssl_ciphers 'ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256';

ssl_prefer_server_ciphers off;

# SSL session caching

ssl_session_cache shared:SSL:10m;

ssl_session_timeout 10m;

# OCSP Stapling

ssl_stapling on;

ssl_stapling_verify on;

resolver 8.8.8.8 8.8.4.4 valid=300s;

resolver_timeout 5s;

# Logging

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

# OAuth Server (HTTPS)

server {

listen 443 ssl http2;

server_name localhost;

ssl_certificate /etc/nginx/certs/server.crt;

ssl_certificate_key /etc/nginx/certs/server.key;

location / {

proxy_pass http://oauth-server:8080;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

# Important: Pass original headers

proxy_pass_request_headers on;

}

}

# MCP Server (HTTPS with streamable-http support)

server {

listen 8001 ssl http2;

server_name localhost;

ssl_certificate /etc/nginx/certs/server.crt;

ssl_certificate_key /etc/nginx/certs/server.key;

# CRITICAL: The trailing slash matters for MCP connections!

# /mcp will fail with "Session terminated" errors

# /mcp/ will work correctly

location /mcp/ {

proxy_pass http://mcp-server:8000/;

# Required headers for MCP

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

# Pass Authorization header

proxy_set_header Authorization $http_authorization;

# WebSocket support (if needed)

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

# Timeouts for long-running connections

proxy_connect_timeout 300s;

proxy_send_timeout 300s;

proxy_read_timeout 300s;

# Disable buffering for streaming

proxy_buffering off;

proxy_cache off;

# Increase buffer sizes

proxy_buffer_size 8k;

proxy_buffers 8 8k;

proxy_busy_buffers_size 16k;

}

}

# HTTP to HTTPS redirect

server {

listen 80;

server_name localhost;

return 301 https://$server_name$request_uri;

}

}

Let’s break down what this nginx configuration does:

What’s Under the Hood

- How It Runs: Our nginx setup uses worker processes that automatically scale based on system demands

- Keeping Things Safe: We’ve implemented essential security features including:

- Protection against clickjacking attempts

- Content security policies that control resource loading

- Enforced HTTPS encryption

- Extra Security Stuff:

- Latest TLS protocols (1.2 and 1.3)

- Military-grade encryption for data protection

- Efficient certificate validation

Where Everything Goes

- Login Server (Port 443):

- Manages secure authentication

- Routes requests appropriately

- Maintains session tracking

- MCP Server (Port 8001):

- Secures MCP communications

- Manages real-time connections

- Maintains persistent sessions

- Maintains smooth data streaming

- Safety Net (Port 80):

- Redirects HTTP to HTTPS automatically

- Provides encrypted connections

Think of this setup as a vigilant security guard, directing traffic and keeping your data fortress secure.OCSP Staplingis a performance and privacy optimization that allows nginx to fetch certificate revocation status from the Certificate Authority and “staple” it to the TLS handshake. This reduces client-side OCSP queries and speeds up SSL negotiations.Critical SSL Discovery:During development, we discovered thattrailing slashes matter enormouslyfor MCP connections. URLs like https://localhost:8001/mcp will fail with “Session terminated” errors, while https://localhost:8001/mcp/ (with trailing slash) work correctly. This nginx configuration handles this automatically.

MCP Security Architecture Diagrams with TLS Reverse Proxy

Nginx TLS Reverse Proxy for MCP Server - System Architecture Overview

graph TB

subgraph "External Clients"

Client1[MCP Client<br/>Claude Desktop]

Client2[MCP Client<br/>OpenAI]

Client3[Web Browser<br/>Admin]

end

subgraph "Edge Layer - nginx"

NGINX[nginx Reverse Proxy<br/>:443, :8001, :80]

TLS[TLS Termination<br/>TLSv1.2/1.3]

Headers[Security Headers<br/>HSTS, CSP, etc.]

end

subgraph "Authentication Layer"

OAuth[OAuth 2.1 Server<br/>:8080]

JWT[JWT Token<br/>Generation]

JWKS[JWKS Endpoint<br/>Public Keys]

end

subgraph "Application Layer"

MCP[MCP Server<br/>:8000]

Tools[MCP Tools<br/>Customer Service]

Session[Session Management]

end

subgraph "Data Layer"

Redis[(Redis Cache<br/>:6379)]

Keys[(Cryptographic<br/>Keys)]

end

Client1 -->|HTTPS/WSS| NGINX

Client2 -->|HTTPS/WSS| NGINX

Client3 -->|HTTPS| NGINX

NGINX -->|Proxy Pass| OAuth

NGINX -->|Proxy Pass /mcp/| MCP

OAuth --> JWT

OAuth --> JWKS

OAuth --> Keys

MCP --> Tools

MCP --> Session

MCP --> Redis

TLS -.->|Encrypts| NGINX

Headers -.->|Protects| NGINX

Think of of the above showing a high-tech home security system. Visitors (external clients) enter through a sophisticated front door (nginx reverse proxy) that verifies IDs and maintains security. A dedicated security guard (OAuth server) checks credentials independently from core operations (MCP server). A smart database (Redis) tracks all authentication and session data. Best of all, each component runs in its own secure container (Docker), providing complete isolation between services.

Network Architecture

graph LR

subgraph "Internet Outer"

Internet[Public Internet]

end

subgraph "Host Machine"

Port443[Port 443<br/>HTTPS/OAuth]

Port8001[Port 8001<br/>HTTPS/MCP]

Port80[Port 80<br/>HTTP Redirect]

end

subgraph "Docker Network: mcp-network"

subgraph "nginx Container"

NGINX_443[nginx:443]

NGINX_8001[nginx:8001]

NGINX_80[nginx:80]

end

subgraph "oauth Container"

OAuth_8080[oauth:8080]

end

subgraph "mcp Container"

MCP_8000[mcp:8000]

end

subgraph "redis Container"

Redis_6379[redis:6379]

end

end

Internet --> Port443

Internet --> Port8001

Internet --> Port80

Port443 --> NGINX_443

Port8001 --> NGINX_8001

Port80 --> NGINX_80

NGINX_443 -->|proxy_pass| OAuth_8080

NGINX_8001 -->|proxy_pass /mcp/| MCP_8000

NGINX_80 -->|301 redirect| NGINX_443

MCP_8000 --> Redis_6379

The network architecture show how traffic flows between Docker containers through port mappings. All containers communicate via an isolated bridge network called “mcp-network.” When external traffic reaches the mapped host ports, nginx routes these requests to the appropriate backend services. By acting as a single entry point, the nginx container enhances security through limited service exposure.

NGinx Security Architecture

graph LR

subgraph "Security Layers"

subgraph "Transport Security"

TLS12[TLS 1.2]

TLS13[TLS 1.3]

Ciphers[Strong Cipher Suites<br/>ECDHE-ECDSA-AES256-GCM]

OCSP[OCSP Stapling]

end

subgraph "HTTP Security Headers"

HSTS[HSTS<br/>max-age=31536000]

CSP[Content Security Policy]

XFrame[X-Frame-Options: SAMEORIGIN]

XContent[X-Content-Type-Options: nosniff]

XXSS[X-XSS-Protection: 1; mode=block]

end

subgraph "Authentication & Authorization"

OAuth2[OAuth 2.1 + PKCE]

JWT_RS256[JWT with RS256]

Scopes[Granular Scopes<br/>customer:read, ticket:create]

end

subgraph "Application Security"

NonRoot[Non-root User<br/>appuser]

ReadOnly[Read-only Volumes]

HealthCheck[Health Checks]

Secrets[Environment Secrets]

end

end

subgraph "Defense in Depth"

L1[Layer 1: Network Isolation]

L2[Layer 2: TLS Encryption]

L3[Layer 3: Authentication]

L4[Layer 4: Authorization]

L5[Layer 5: Container Security]

end

L1 --> L2 --> L3 --> L4 --> L5

This security architecture diagram demonstrates the defense-in-depth approach. Multiple security layers protect the system: transport security (TLS 1.2/1.3 with strong ciphers), HTTP security headers (HSTS, CSP, etc.), OAuth 2.1 authentication with JWT tokens, and container-level security (non-root users, read-only volumes). Each layer provides independent protection, providing system resilience even if one layer is compromised.

Think of this security setup like a building with multiple security checkpoints. At the entrance, you have military-grade locks (the TLS encryption), followed by a team of security guards checking IDs at various stations (the HTTP headers and OAuth verification). Finally, all valuable assets are stored in separate, fortified vaults (the container security). If an intruder somehow breaches one checkpoint, they’ll face multiple additional barriers—a clever defense strategy. Like a series of backup plans, this layered approach keeps your system secure even if one protection measure becomes compromised.

Demo Deployment Container Architecture

graph TB

subgraph "Base Image"

Python[python:3.12.9-slim]

System[System Dependencies<br/>curl, build-essential]

AppUser[appuser:appuser]

Poetry[Poetry 1.7.1]

end

subgraph "OAuth Container"

OAuth_Base[Base Stage]

OAuth_Code[OAuth Server Code]

OAuth_Port[EXPOSE 8080]

OAuth_Health[Health Check<br/>/health endpoint]

OAuth_CMD[CMD python src/oauth_server.py]

end

subgraph "MCP Container"

MCP_Base[Base Stage]

MCP_Code[MCP Server Code]

MCP_Port[EXPOSE 8000]

MCP_Health[Health Check<br/>/health endpoint]

MCP_CMD[CMD python src/main.py]

end

subgraph "Volume Mounts"

Keys[./keys:/app/keys:ro]

Logs[./logs:/app/logs]

Certs[./certificates:/etc/nginx/certs:ro]

end

Python --> OAuth_Base

Python --> MCP_Base

OAuth_Base --> OAuth_Code

MCP_Base --> MCP_Code

Keys --> OAuth_Base

Keys --> MCP_Base

Logs --> OAuth_Base

Logs --> MCP_Base

Certs --> Python

The container architecture uses a multi-stage Dockerfile approach with a shared base image. Both OAuth and MCP containers inherit from the base stage, reducing image size and maintaining consistency. Containers run as non-root users (appuser) for security. Volume mounts provide read-only access to cryptographic keys and certificates, while logs are writable. Health checks monitor container status for automatic recovery.

This setup is built like interlocking building blocks. The containers share a common foundation (the base image) to maintain efficiency and organization. Like siblings, the OAuth and MCP containers inherit from the same parent, maintaining consistency across the system. For enhanced security, all processes run as standard users rather than administrators. The system includes specialized storage areas—some strictly locked down for sensitive data like cryptographic keys, others accessible for routine items like logs. Built-in health monitors constantly watch over everything, ready to respond automatically if issues arise.

Nginx Reverse Proxy TLS / OAuth → Request Flow Sequence

sequenceDiagram

participant Client as MCP Client

participant Nginx as nginx (TLS)

participant OAuth as OAuth Server

participant MCP as MCP Server

participant Redis as Redis Cache

Note over Client,Redis: Initial Authentication Flow

Client->>+Nginx: HTTPS POST /token

Nginx->>Nginx: TLS Termination

Nginx->>Nginx: Apply Security Headers

Nginx->>+OAuth: HTTP POST /token

OAuth->>OAuth: Verify Credentials

OAuth->>OAuth: Generate JWT (RS256)

OAuth-->>-Nginx: JWT Token Response

Nginx-->>-Client: HTTPS Token Response

Note over Client,Redis: MCP Tool Invocation

Client->>+Nginx: HTTPS /mcp/ (Bearer Token)

Nginx->>Nginx: Validate Headers

Nginx->>+MCP: HTTP /mcp/ (Forward Auth)

MCP->>MCP: Verify JWT Signature

MCP->>+Redis: Check Session Cache

Redis-->>-MCP: Session Data

MCP->>MCP: Execute Tool

MCP-->>-Nginx: Tool Response

Nginx-->>-Client: HTTPS Response

This sequence diagram shows the complete request flow for MCP tool invocation. Clients first authenticate with the OAuth server to obtain a JWT token. For subsequent requests, they include this token in the Authorization header. The nginx proxy handles TLS termination and forwards requests to the appropriate backend. The MCP server verifies the JWT signature and checks Redis for cached session data before executing tools.

Here’s how this sequence works: First, a client obtains a special pass (JWT token) from the security desk (OAuth server). Then, when they need to use a tool, they present this pass at the front door (nginx). The front door verifies their pass, handles security measures (TLS), and directs them to the appropriate department (backend). Finally, the tool department (MCP server) verifies their pass again and checks their information in the quick-access files (Redis) before granting tool access.

Rate Limiter, Keys, Env, Data Flow Diagram

graph LR

subgraph "Configuration Data"

ENV[Environment Variables]

Secrets[Secrets<br/>JWT_SECRET, API_KEYS]

Config[Config Files<br/>nginx.conf]

end

subgraph "Cryptographic Data"

PrivKey[RSA Private Key<br/>/app/keys/private_key.pem]

PubKey[RSA Public Key<br/>/app/keys/public_key.pem]

TLSCert[TLS Certificate<br/>/etc/nginx/certs/server.crt]

TLSKey[TLS Private Key<br/>/etc/nginx/certs/server.key]

end

subgraph "Runtime Data"

Tokens[JWT Tokens<br/>In-Memory]

Sessions[Session Data<br/>Redis]

Logs[Application Logs<br/>/app/logs]

end

subgraph "Persistent Storage"

RedisVol[(redis_data<br/>Volume)]

LogVol[(logs<br/>Directory)]

end

ENV --> OAuth

ENV --> MCP

Secrets --> OAuth

Secrets --> MCP

Config --> nginx

PrivKey --> OAuth

PubKey --> OAuth

PubKey --> MCP

TLSCert --> nginx

TLSKey --> nginx

OAuth --> Tokens

MCP --> Sessions

Sessions --> RedisVol

Logs --> LogVol

The data flow architecture is straightforward: Configuration data like environment variables and secrets are loaded at startup. For security, all cryptographic materials are stored in read-only folders to prevent tampering. During runtime, we maintain JWT tokens in memory (more secure than persistent storage) and keep session information in Redis for rapid access. Data persistence is ensured through Docker volumes, which safely store Redis data and logs even through container restarts.

Transport Security Key Architectural Decisions

1.nginx as Reverse Proxy: Provides TLS termination, security headers, and routing in a single layer 2.Container Isolation: Each service runs in its own container with minimal privileges 3.OAuth 2.1 + JWT: Modern authentication with stateless token verification 4.Redis for Sessions: Fast, distributed session storage supporting horizontal scaling 5.Multi-stage Builds: Optimized container images with shared base configuration 6.Non-root Execution: Enhanced security by running processes as unprivileged users 7.Health Checks: Automatic recovery and monitoring capabilities 8.Volume Strategy: Read-only mounts for sensitive data, writable mounts only where necessary

Pillar 3: Input Validation — Your Security Scanner

Every input to your MCP server is a potential weapon in an attacker’s arsenal. Command injection vulnerabilities affect nearly half of all MCP implementations because developers trust AI-generated inputs too much. Here’s our ”bulletproof” Pydantic v2 validation with Bleach sanitization:

security/validation.py

"""

Input validation and sanitization for MCP security.

Prevents injection attacks and verifies data integrity.

"""

import re

from typing import List

import bleach

from pydantic import BaseModel, Field, field_validator

class SecureTicketRequest(BaseModel):

"""Validates support ticket creation requests."""

customer_id: str = Field(pattern=r"^[A-Z0-9]{5,10}$", description="Strict ID format")

subject: str = Field(min_length=1, max_length=200)

description: str = Field(min_length=1, max_length=2000)

priority: str

@field_validator('subject', 'description')

@classmethod

def sanitize_text(cls, v):

"""Remove any potential injection attempts."""

# Strip HTML and dangerous characters

cleaned = bleach.clean(v, tags=[], strip=True)

# Prevent command injection patterns

dangerous_patterns = [

r'<script', # XSS attempts

r'javascript:', # JavaScript injection

r'DROP TABLE', # SQL injection

r'\$\{.*\}', # Template injection

r'`.*`', # Command substitution

]

for pattern in dangerous_patterns:

if re.search(pattern, cleaned, flags=re.IGNORECASE):

raise ValueError(f"Invalid characters detected: {pattern}")

return cleaned.strip()

@field_validator('priority')

@classmethod

def validate_priority(cls, v):

"""Ensure priority is from allowed list."""

allowed_priorities = ['low', 'normal', 'high', 'urgent']

if v not in allowed_priorities:

raise ValueError(f"Priority must be one of {allowed_priorities}, got {v}")

return v

class SecureCustomerRequest(BaseModel):

"""Validates customer lookup requests."""

customer_id: str = Field(pattern=r"^[A-Z0-9]{5,10}$")

class SecureCalculationRequest(BaseModel):

"""Validates financial calculation requests."""

customer_id: str = Field(pattern=r"^[A-Z0-9]{5,10}$")

amounts: List[float] = Field(min_length=1, max_length=100)

@field_validator('amounts')

@classmethod

def validate_amounts(cls, v):

"""Ensure all amounts are within acceptable range."""

for amount in v:

if amount < 0 or amount > 1000000:

raise ValueError("Amount must be between 0 and 1,000,000")

return v

```**Bleach Library**is a security-focused HTML sanitization library that removes potentially dangerous HTML tags and attributes. Unlike basic string replacement, Bleach understands HTML structure and can safely strip scripting elements while preserving safe formatting. This makes it ideal for handling user-generated content that might contain embedded HTML or JavaScript.

Let’s break the code down step by step and see how it fits into our pillars.

### Input Validation and Sanitization Module - Code Walk through

This module contains no traditional entry points (no `main()` function). Instead, it exports three Pydantic model classes that serve as validation entry points:

- **`SecureTicketRequest`**: Validates support ticket creation

- **`SecureCustomerRequest`**: Validates customer lookup operations

- **`SecureCalculationRequest`**: Validates financial calculations

### High-level Control Flow

1.**Import Time**: Module imports dependencies (re, typing, bleach, pydantic)

2.**Class Definition**: Three validation classes are defined with field validators

3.**Runtime Usage**: External code instantiates these classes with user data

4.**Validation Execution**: Pydantic automatically triggers field validators

5.**Result**: Either returns validated data or raises `ValueError` exceptions

### Input Validation - Global Sequence Diagram

```mermaid

sequenceDiagram

participant Client as MCP Client

participant Model as Pydantic Model

participant Validator as Field Validator

participant Bleach as Bleach Library

participant Regex as Regex Engine

Client->>Model: Create instance with data

Model->>Model: Validate field types

Model->>Validator: Call field validators

alt Text fields (subject/description)

Validator->>Bleach: Clean HTML/dangerous chars

Bleach-->>Validator: Sanitized text

Validator->>Regex: Check injection patterns

Regex-->>Validator: Pattern match results

else Priority field

Validator->>Validator: Check allowed list

else Amount fields

Validator->>Validator: Check numeric ranges

end

alt Validation passes

Validator-->>Model: Cleaned data

Model-->>Client: Valid model instance

else Validation fails

Validator-->>Model: ValueError

Model-->>Client: Validation error

end

This diagram shows how the security validation works. When an MCP client tries to create a support ticket or perform other operations, it sends data to one of the Pydantic models. The model checks basic requirements first (like data types), then runs special validators that clean dangerous content and check for security threats. If everything passes inspection, the client receives clean, safe data. If problems are found, the client receives an error message explaining what went wrong.

Class by Class and Function-by-Function Analysis

SecureTicketRequest ClassPurpose: Validates and sanitizes support ticket creation requests to prevent injection attacks.Class Attributes:

| Attribute | Type | Description |

|---|---|---|

customer_id |

str |

Customer identifier with strict format (5-10 alphanumeric characters) |

subject |

str |

Ticket subject line (1-200 characters) |

description |

str |

Detailed ticket description (1-2000 characters) |

priority |

str |

Ticket priority level from predefined list |

sanitize_text() ValidatorPurpose: Removes HTML tags and detects dangerous injection patterns in text fields.Signature & Parameters:

| Parameter | Type | Description |

|---|---|---|

cls |

type |

Class reference (automatic in classmethod) |

v |

str |

Input value to sanitize |

| Returns | str |

Cleaned, safe text |

| Raises | ValueError |

If dangerous patterns are detected |

- No I/O operations

- Raises

ValueErrorfor dangerous input - Modifies input by removing HTML and whitespace

sanitize_textCode Listing:

@field_validator('subject', 'description')

@classmethod

def sanitize_text(cls, v):

"""Remove any potential injection attempts."""

# Strip HTML and dangerous characters

cleaned = bleach.clean(v, tags=[], strip=True)

# Prevent command injection patterns

dangerous_patterns = [

r'<script', # XSS attempts

r'javascript:', # JavaScript injection

r'DROP TABLE', # SQL injection

r'\$\{.*\}', # Template injection

r'`.*`', # Command substitution

]

for pattern in dangerous_patterns:

if re.search(pattern, cleaned, flags=re.IGNORECASE):

raise ValueError(f"Invalid characters detected: {pattern}")

return cleaned.strip()

sanitize_textMini Sequence Diagram:

sequenceDiagram

participant Validator as sanitize_text

participant Bleach as bleach.clean

participant Regex as re.search

Validator->>Bleach: Clean HTML (tags=[], strip=True)

Bleach-->>Validator: HTML-stripped text

loop For each dangerous pattern

Validator->>Regex: Search pattern (case-insensitive)

alt Pattern found

Validator->>Validator: Raise ValueError

else Pattern not found

Validator->>Validator: Continue checking

end

end

Validator->>Validator: Strip whitespace

Validator-->>Validator: Return cleaned text

This function acts as a security guard for text input. It first removes any HTML code that could cause problems. Then it checks for known attack patterns like SQL injection attempts or JavaScript code. If it finds anything suspicious, it rejects the input with an error. If the text is safe, it removes extra spaces and returns the clean version.

To simplify our understanding of this validation process, imagine it as a multi-layered security checkpoint at an airport. First, all input goes through a metal detector (HTML sanitization), then through passport control (pattern matching), and finally through customs (business rule validation). Each layer adds another crucial level of protection against potential security threats.

validate_priority() ValidatorPurpose: Ensures priority values match the predefined allowed list.Signature & Parameters:

| Parameter | Type | Description |

|---|---|---|

cls |

type |

Class reference (automatic in classmethod) |

v |

str |

Priority value to validate |

| Returns | str |

Validated priority value |

| Raises | ValueError |

If priority not in allowed list |

- Raises

ValueErrorfor invalid priorities - No data modification

validate_priorityCode Listing:

@field_validator('priority')

@classmethod

def validate_priority(cls, v):

"""Ensure priority is from allowed list."""

allowed_priorities = ['low', 'normal', 'high', 'urgent']

if v not in allowed_priorities:

raise ValueError(f"Priority must be one of {allowed_priorities}, got {v}")

return v

SecureCustomerRequest ClassPurpose: Validates customer lookup requests with strict ID format requirements.Class Attributes:

| Attribute | Type | Description |

|---|---|---|

customer_id |

str |

Customer identifier matching pattern ^[A-Z0-9]{5,10}$ |

SecureCalculationRequest ClassPurpose: Validates financial calculation requests with safe numeric ranges.Class Attributes:

| Attribute | Type | Description |

|---|---|---|

customer_id |

str |

Customer identifier with strict format |

amounts |

List[float] |

List of monetary amounts (1-100 items) |

validate_amounts() ValidatorPurpose: Ensures all monetary amounts fall within acceptable business ranges.Signature & Parameters:

| Parameter | Type | Description |

|---|---|---|

cls |

type |

Class reference (automatic in classmethod) |

v |

List[float] |

List of amounts to validate |

| Returns | List[float] |

Validated amount list |

| Raises | ValueError |

If any amount outside 0-1,000,000 range |

- Raises

ValueErrorfor out-of-range amounts - No data modification

validate_amountsCode Listing:

@field_validator('amounts')

@classmethod

def validate_amounts(cls, v):

"""Ensure all amounts are within acceptable range."""

for amount in v:

if amount < 0 or amount > 1000000:

raise ValueError("Amount must be between 0 and 1,000,000")

return v

Input Validation Architectural Mapping

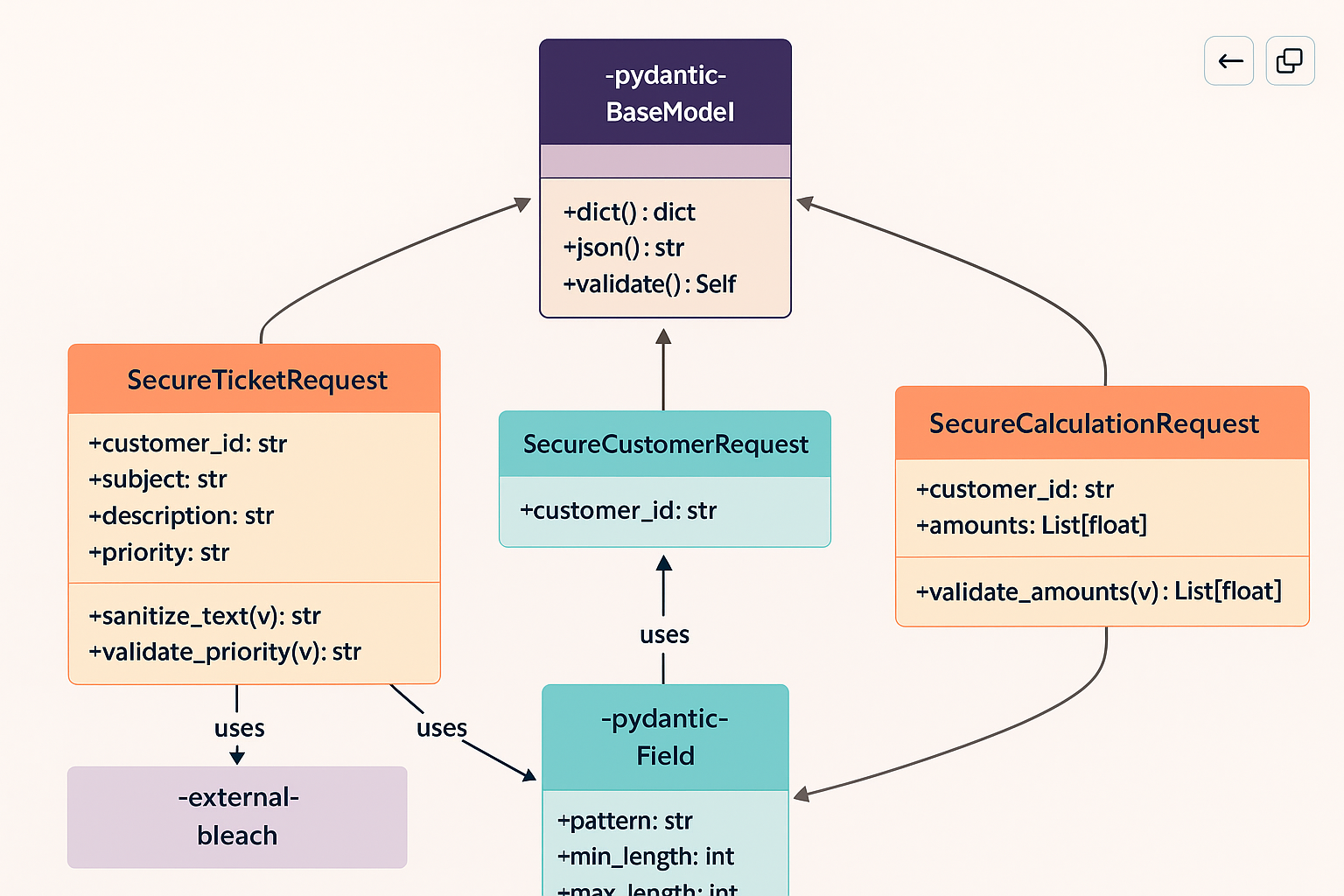

Input Validation Class Diagram

classDiagram

class BaseModel {

<<pydantic>>

}

class SecureTicketRequest {

+customer_id: str

+subject: str

+description: str

+priority: str

+sanitize_text(v) str

+validate_priority(v) str

}

class SecureCustomerRequest {

+customer_id: str

}

class SecureCalculationRequest {

+customer_id: str

+amounts: List[float]

+validate_amounts(v) List[float]

}

BaseModel <|-- SecureTicketRequest

BaseModel <|-- SecureCustomerRequest

BaseModel <|-- SecureCalculationRequest

class SecurityPatterns {

<<enumeration>>

XSS_PATTERN

JS_INJECTION

SQL_INJECTION

TEMPLATE_INJECTION

COMMAND_INJECTION

}

SecureTicketRequest ..> SecurityPatterns : uses

This diagram shows the three security validation classes, all inheriting from Pydantic’s BaseModel. Each class specializes in validating different types of MCP operations. SecureTicketRequest is the most complex, with validators for text sanitization and priority checking. The SecurityPatterns enumeration (shown conceptually) represents the dangerous patterns that the text validator checks against.

Input Validation - System Architecture Position

graph TB

subgraph "MCP Client Layer"

Client[MCP Client Request]

end

subgraph "Validation Layer"

Models[Pydantic Models]

Validators[Field Validators]

Bleach[Bleach Sanitizer]

end

subgraph "Business Logic Layer"

Tools[MCP Tools]

Database[Data Storage]

end

Client -->|Raw Input| Models

Models -->|Triggers| Validators

Validators -->|Uses| Bleach

Models -->|Clean Data| Tools

Tools -->|Safe Operations| Database

style Validation Layer fill:#e1f5fe

```**Description**: This module sits in the Validation Layer, acting as a security gateway between MCP clients and business logic. It intercepts all incoming data, sanitizes dangerous content, and validates business rules before allowing data to proceed to MCP tools and storage.

### Layer Interfaces

- **Input Interface**: Accepts raw Python dictionaries or keyword arguments

- **Output Interface**: Returns validated Pydantic model instances or raises `ValueError`

- **Dependencies**: Requires `bleach` for HTML sanitization and `re` for pattern matching

### Cross-cutting Concerns

1.**Security**: Primary concern - prevents injection attacks across all input types

2.**Data Integrity**: Ensures data meets business rules (ID formats, amount ranges)

3.**Error Handling**: Provides descriptive error messages for validation failures

4.**Performance**: Regex compilation happens at import time for efficiency

### Input Validation- Summary

This input validation module provides comprehensive security for MCP operations through:

1.**Strict Type Validation**: Pydantic verifies correct data types

2.**Pattern Matching**: Regular expressions enforce ID formats

3.**Content Sanitization**: Bleach removes dangerous HTML/scripts

4.**Injection Prevention**: Detects and blocks common attack patterns

5.**Business Rule Enforcement**: Validates priorities and amount ranges

The module follows the principle of "fail fast" - rejecting invalid input immediately rather than allowing it deeper into the system. This defense-in-depth approach complements other security layers like authentication and encryption, providing robust protection against malicious input.

For further discussion of this, see our wiki under [security - validation](https://github.com/RichardHightower/mcp_security/wiki/security-%E2%80%90validation.py).

## Pillar 4: Rate Limiting — Your Traffic Controller

AI operations are expensive, and attackers know it. Without rate limiting, a malicious actor can drain your resources faster than you can say "token limit exceeded."

Here's our “production-ready” [rate limiter with memory](https://github.com/RichardHightower/mcp_security/blob/main/src/security/rate_limiting.py) + Redis fallback (it is still example code to demonstrate the concept):

### security/rate_limiting.py

```python

"""

Rate limiting implementation for MCP security.

Protects against abuse and denial-of-service attacks.

"""

import time

from collections import defaultdict

from typing import Dict, List, Optional, Tuple

class RateLimiter:

"""

Rate limiter with sliding window implementation.

Uses in-memory storage with Redis fallback capability.

"""

def __init__(self,

requests_per_minute: int = 60,

token_limit_per_hour: int = 100000,

redis_client=None,**kwargs):

"""

Initialize rate limiter.

Args:

requests_per_minute: Max requests per minute per user

token_limit_per_hour: Max AI tokens per hour per user

redis_client: Optional Redis client for distributed rate limiting

"""

self.requests_per_minute = requests_per_minute

self.token_limit_per_hour = token_limit_per_hour

self.redis_client = redis_client

# In-memory storage for rate limiting

self.request_counts: DefaultDict[str, List[float]] = defaultdict(list)

self.token_counts: DefaultDict[str, List[Tuple[float, int]]] = defaultdict(list)

async def check_rate_limit(self, user_id: str, estimated_tokens: int = 0) ->

Optional[Dict]:

"""

Check if request should be allowed based on rate limits.

Returns:

None if allowed, dict with error details if rate limited

"""

current_time = time.time()

# Clean old entries and check request rate limit

minute_ago = current_time - 60

self.request_counts[user_id] = [

timestamp for timestamp in self.request_counts[user_id]

if timestamp > minute_ago

]

if len(self.request_counts[user_id]) >= self.requests_per_minute:

return {

"error": "Rate limit exceeded",

"limit_type": "requests",

"retry_after": 60

}

# Check token rate limit if tokens specified

if estimated_tokens > 0:

hour_ago = current_time - 3600

self.token_counts[user_id] = [

(timestamp, tokens) for timestamp, tokens in\

self.token_counts[user_id]

if timestamp > hour_ago

]

total_tokens = sum(tokens for _, tokens in \

self.token_counts[user_id])

if total_tokens + estimated_tokens > self.token_limit_per_hour:

return {

"error": "Token rate limit exceeded",

"limit_type": "tokens",

"retry_after": 3600

}

# Record token usage

self.token_counts[user_id].append((current_time, estimated_tokens))

# Record request

self.request_counts[user_id].append(current_time)

return None

Our implementation prioritizesmemory-based rate limitingfor speed and simplicity, with Redis available as an optional backend for distributed deployments. This approach handlessliding window calculationsefficiently while automatically cleaning up expired entries to prevent memory leaks.

(Note we go into more detail than you probably care about but if you are interested, check out this rate limiting discussion and areas for improvement.)

Rate Limiting Module - Code Walkthrough

High-level Control Flow

1.Import Time: Module imports required dependencies (time, collections, typing) 2.Class Definition: RateLimiter class is defined with methods 3.Runtime Instantiation: External code creates RateLimiter instances 4.Request Validation: check_rate_limit() is called for each incoming request 5.Result: Either allows the request (returns None) or blocks it (returns error dict)

Rate Limiting - Global Sequence Diagram

sequenceDiagram

participant Client as MCP Client

participant API as API Endpoint

participant Limiter as RateLimiter

participant Storage as In-Memory Storage

participant Redis as Redis (Optional)

Client->>API: Request with user_id

API->>Limiter: check_rate_limit(user_id, tokens)

Limiter->>Limiter: Get current time

Limiter->>Storage: Clean old entries (>60s)

Limiter->>Storage: Count recent requests

alt Request limit exceeded

Limiter-->>API: Error dict (retry_after: 60)

API-->>Client: 429 Too Many Requests

else Within request limit

alt Token limit check needed

Limiter->>Storage: Clean old tokens (>3600s)

Limiter->>Storage: Sum token usage

alt Token limit exceeded

Limiter-->>API: Error dict (retry_after: 3600)

API-->>Client: 429 Too Many Requests

else Within token limit

Limiter->>Storage: Record token usage

end

end

Limiter->>Storage: Record request time

Limiter-->>API: None (allowed)

API-->>Client: Process request

end

This diagram shows how rate limiting protects the MCP server from overuse. When a client makes a request, the rate limiter checks two things: how many requests the user has made in the last minute, and how many AI tokens they’ve used in the last hour. If either limit is exceeded, the request is blocked with instructions on when to retry. If both checks pass, the request proceeds and the usage is recorded for future checks.

Think of our rate limiter as a savvy bouncer at a club—it monitors two key things: your server request frequency (requests per minute) and your AI resource usage (token consumption per hour). If you exceed either limit, it’ll politely let you know when to try again. Stay within the limits, and you’re welcome to proceed—the bouncer just logs your visit to keep tabs.

Rate Limiting - Function-by-Function Analysis

RateLimiter ClassPurpose: Implements sliding window rate limiting to prevent API abuse and protect system resources.Class Attributes:

| Attribute | Type | Description |

|---|---|---|

requests_per_minute |

int |

Maximum requests allowed per minute per user |

token_limit_per_hour |

int |

Maximum AI tokens allowed per hour per user |

redis_client |

Optional[Redis] |

Optional Redis client for distributed rate limiting |

request_counts |

DefaultDict[str, List[float]] |

Timestamps of requests per user |

token_counts |

DefaultDict[str, List[Tuple[float, int]]] |

Token usage history per user |

__init__() ConstructorPurpose: Initializes the rate limiter with configurable limits and optional Redis support.Signature & Parameters:

| Parameter | Type | Default | Description |

|---|---|---|---|

self |

RateLimiter |

- | Instance reference |

requests_per_minute |

int |

60 | Maximum requests per minute per user |

token_limit_per_hour |

int |

100000 | Maximum AI tokens per hour per user |

redis_client |

Optional[Redis] |

None | Redis client for distributed limiting |

**kwargs |

dict |

- | Additional keyword arguments (unused) |

- Initializes in-memory storage structures

- Stores Redis client reference if provided

__init__Code Listing:

def __init__(self, requests_per_minute: int = 60,

token_limit_per_hour: int = 100000,

redis_client=None,**kwargs):

"""

Initialize rate limiter.

Args:

requests_per_minute: Max requests per minute per user

token_limit_per_hour: Max AI tokens per hour per user

redis_client: Optional Redis client for distributed rate limiting

"""

self.requests_per_minute = requests_per_minute

self.token_limit_per_hour = token_limit_per_hour

self.redis_client = redis_client

# In-memory storage for rate limiting

self.request_counts: DefaultDict[str, List[float]] = defaultdict(list)

self.token_counts: DefaultDict[str, List[Tuple[float, int]]] = defaultdict(list)

check_rate_limit() MethodPurpose: Validates whether a request should be allowed based on rate limits for both request frequency and token usage.Signature & Parameters:

| Parameter | Type | Default | Description |

|---|---|---|---|

self |