June 28, 2025

Every tech leader who saw ChatGPT explode asked: What will a production-grade large language model (LLM) really cost us? The short answer: far more than the API bill. But smart design can cut costs by 90%. GPUs sit idle during cold starts, engineers wrestle with fine-tuning, and network egress lurks. Meta’s Llama 4, launched in April 2025, offers multimodal models—Scout, Maverick, and the previewed Behemoth—handling text, images, and video. This article unpacks LLM costs, compares top models, weighs hiring experts versus APIs, and shares a hypothetical fintech’s journey from $937,500 to $3,000 monthly.

Executive Summary: Understanding the Economics of LLMs

This article provides a comprehensive analysis of the real costs associated with deploying and maintaining Large Language Models (LLMs) in enterprise environments. It offers critical insights for executives making strategic decisions about AI implementation.

Key takeaways for executives:

- True Cost Structure: LLM expenses extend far beyond API fees, encompassing infrastructure (GPUs), operations, development talent, and opportunity costs that can significantly impact ROI.

- Dramatic Optimization Potential: Strategic implementation can reduce costs by up to 99.7%, as demonstrated by our case study where monthly expenses dropped from $937,500 to just $3,000.

- Deployment Options: The article compares API-based, self-hosted, and hybrid approaches, highlighting the tradeoffs between cost, control, and expertise requirements for each strategy.

- Talent Considerations: With LLMOps specialists commanding $268,000+ salaries and being in extremely short supply (demand up 300% since 2023), talent acquisition represents a major hidden cost and strategic consideration.

- Practical Cost-Cutting Strategies: Specific techniques like request batching, caching, quantization, and hybrid routing can deliver 10-90% cost reductions while maintaining or improving performance.

This information is particularly valuable for executives who need to understand the financial implications of AI initiatives, avoid budget overruns, and position their organizations to gain competitive advantages through cost-effective LLM deployment. The article provides both strategic frameworks and tactical insights to inform million-dollar infrastructure decisions that will increasingly determine market leadership as AI becomes central to business operations.

The Four Pillars of Cost

LLM costs form four buckets:

Infrastructure: Graphics Processing Units (GPUs) cost $2-$50/hour. Llama 4 Scout (109B parameters, 10M-token context) runs on one NVIDIA H100; Maverick (400B parameters, 1M-token context) needs an H100 DGX host. Both use Mixture-of-Experts (MoE), activating 17B parameters per token. Storing a 150 GB model costs $3.45/month on AWS S3 ($0.023/GB), $2.76/month on Azure Blob ($0.0184/GB), or $3.00/month on GCP Cloud Storage ($0.020/GB). Downloading it costs $13.50 (AWS, $0.09/GB) or $18 (GCP, $0.12/GB).Operations: Monitoring, scaling, and managing cold starts—delays of 30-120 seconds when instances load—add up. Keeping “warm” instances or provisioning standby servers for peak 400 RPS adds $47,189-$70,783/month.Development: LLMOps engineers, averaging $268,000/year (up to $300,000), account for 70% of costs. Building solutions takes months, with training costing $100,000 per engineer. A team of three costs $79,500/month.Opportunity: Slow responses lose customers. Over-provisioned resources waste money. Vendor lock-in risks price hikes, while self-hosting diverts scarce talent from other projects.

Infrastructure: Graphics Processing Units (GPUs) cost $2-$50/hour. Llama 4 Scout (109B parameters, 10M-token context) runs on one NVIDIA H100; Maverick (400B parameters, 1M-token context) needs an H100 DGX host. Both use Mixture-of-Experts (MoE), activating 17B parameters per token. Storing a 150 GB model costs $3.45/month on AWS S3 ($0.023/GB), $2.76/month on Azure Blob ($0.0184/GB), or $3.00/month on GCP Cloud Storage ($0.020/GB). Downloading it costs $13.50 (AWS, $0.09/GB) or $18 (GCP, $0.12/GB).Operations: Monitoring, scaling, and managing cold starts—delays of 30-120 seconds when instances load—add up. Keeping “warm” instances or provisioning standby servers for peak 400 RPS adds $47,189-$70,783/month.Development: LLMOps engineers, averaging $268,000/year (up to $300,000), account for 70% of costs. Building solutions takes months, with training costing $100,000 per engineer. A team of three costs $79,500/month.Opportunity: Slow responses lose customers. Over-provisioned resources waste money. Vendor lock-in risks price hikes, while self-hosting diverts scarce talent from other projects.

For a system with 30 million users, 200 requests/second, and 500 tokens/request (259.2 billion tokens/month), API costs range from $640K (Gemini 2.5 Pro) to $3.8M (GPT-4o). Self-hosting Llama 4 Scout could cost $94,394/month (4 AWS p4d.24xlarge instances at $32.77/hour, plus ~$17/month storage and egress); Maverick costs $141,585/month, plus $79,500/month for engineers. Let’s break down some of the costs and concerns with such a system.

Hidden Costs That Wreck Budgets

Sneaky expenses derail budgets. Cold starts cost $2,000-$5,000 monthly in lost revenue if users abandon slow apps. Failed requests waste $1,500 in compute, $3,000 in debugging, and $500 in support monthly. Model drift and hallucination require $100,000-$300,000 yearly for monitoring and retraining, as discussed in my article on reinforcement learning for LLMs [1]. Vendor lock-in leaves you vulnerable to price spikes, while self-hosting strains rare expertise.

Provider Snapshot: API vs. Self-Hosted vs. Hybrid

Choosing between APIs, self-hosted models, or hybrids depends on scale and expertise:

| Model | Provider | Input/Output Cost (per 1M Tokens) | Best Fit | Trade-Off |

|---|---|---|---|---|

| GPT-4o | OpenAI | $5.00/$15.00 | Multimodal apps | High cost, lock-in |

| o3 | OpenAI | $3.00/$12.00 | Logic, math tasks | Moderate cost |

| o4-mini | OpenAI | $0.15/$0.60 | Low-complexity apps | Limited capability |

| GPT-4.1 | OpenAI | $6.00/$18.00 | Large-context apps | Premium pricing |

| Claude 4.0 | Anthropic (AWS Bedrock) | $3.00/$15.00 | Reasoning, safety | Cloud platform limits |

| Llama 4 Scout | Meta (cloud providers) | $0.15/$0.50 | Long-context tasks | Infrastructure needs |

| Llama 4 Maverick | Meta (cloud providers) | $0.22/$0.85 | Complex reasoning | High compute |

| Gemini 2.5 Pro | $0.075/$0.25 | Cost-sensitive apps | Experimental limits |

Hiring LLMOps engineers is nearly impossible—demand is up 300% since 2023. Training takes 3-6 months, costing $50,000-$150,000 per hire. APIs sidestep this but sacrifice control, making hybrids ideal for enterprises.

Cost vs. Business Value

Tokens cost $0.075-$18 per million, but value is key.

- A $0.05 query saving $5 in labor yields a 100x return. For 10,000 daily queries, that is $1.5M monthly saved.

Smaller apps can lean on o4-mini for simplicity, but high-volume systems need optimization to unlock efficiency, revenue, or satisfaction. Opportunity costs—engineering time or vendor lock-in—must guide decisions.

Optimization Strategies

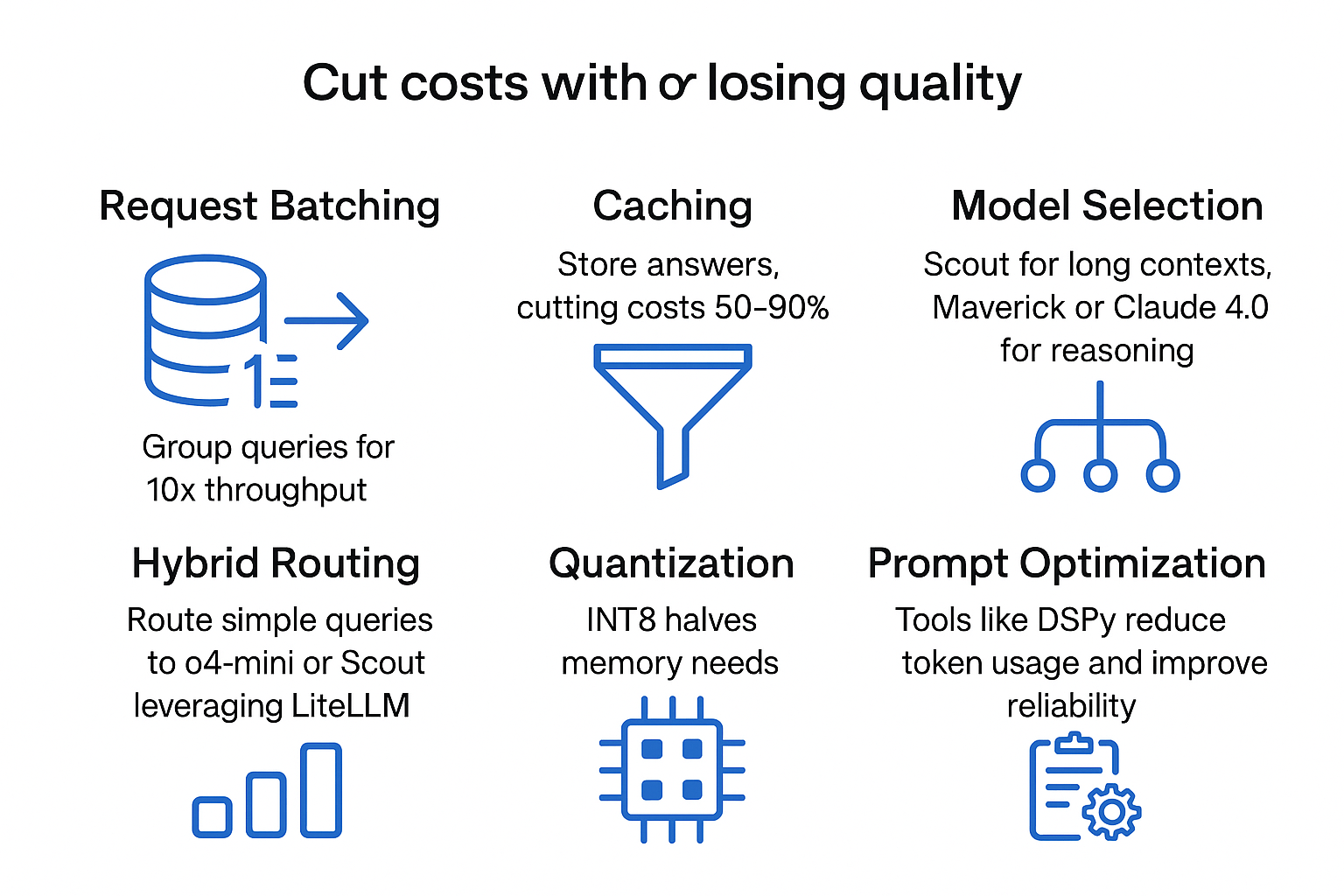

Cut costs without losing quality:

- Request Batching: Group queries for 10x throughput.

- Caching: Store answers, cutting costs 50-90%.

- Model Selection: Scout for long contexts, Maverick or Claude 4.0 for reasoning.

- Hybrid Routing: Route simple queries to o4-mini or Scout, leveraging LiteLLM.

- Quantization: INT8 halves memory needs.

- Prompt Optimization: Tools like DSPy reduce token usage and improve reliability.

A hypothetical fintech used these to drop costs from $937,500 to $3,000 monthly—a 99.7% reduction.

Case Study: FinSecure’s Transformation (Hypothetical)

FinSecure, a fictional fintech serving 100 million users with 10 million daily queries, used a proprietary API costing $937,500 monthly. Latency and rate limits hurt. They pivoted:

- Self-Hosting: Switched to Llama 4 Maverick on an H100 DGX, costing $5,904/month.

- Efficiency Boost: Batching for 20x throughput; caching for 80% reuse.

- Hybrid Routing: Scout for simple queries, Maverick for complex, using LiteLLM.

Costs fell to $3,000/month—$11.2M saved yearly. Latency dropped 60%, availability hit 99.9%, and a $230,000 investment yielded a 4,900% return.

Measuring ROI the Right Way

ROI = (Annual Value – Annual Cost) ÷ Annual Cost. FinSecure’s $60,000 run rate versus $11.2M savings hit breakeven in under five months. Track efficiency, revenue, savings, and satisfaction. Re-evaluate quarterly.

Conclusion

LLMs like Llama 4, GPT-4o, and Claude 4.0 unlock innovation, but sloppy design kills budgets. APIs are easy but pricey; self-hosting saves but demands rare expertise. Hybrids, powered by tools like LiteLLM and DSPy, balance both. Budget beyond tokens, optimize relentlessly, and turn AI into a profit engine. As an AI architect, I have seen strategic design transform cost centers into competitive advantages.

Readers who enjoyed “The Economics of Deploying Large Language Models” will find tremendous value in the companion article “The LLM Cost Trap — and the Playbook to Escape It.” This focused piece expands on critical concepts from the main article, providing practical strategies that transform AI implementation from a budget nightmare into a competitive advantage. While the main article offers a comprehensive overview of cost factors, this companion piece delivers a tactical playbook with specific techniques—routing, caching, batching, and quantization—that organizations can implement immediately to slash LLM operational costs.

What makes this companion article particularly valuable is its emphasis on the human element of LLM deployment decisions. It delves into the often-overlooked “people factor”—explaining how specialized talent scarcity influences the build-vs-buy equation in ways pure cost calculations miss. The article’s discussion of strategic flexibility and balancing managed services against in-house solutions provides crucial context for technical leaders making million-dollar infrastructure decisions. By reading both pieces together, decision-makers gain both the strategic understanding for long-term planning and the tactical insights for immediate cost optimization—a powerful combination that could determine whether an AI initiative drives growth or drains resources.

References

- Hightower, R. (2025). “Beyond Fine-Tuning: Mastering Reinforcement Learning for Large Language Models.” Medium.

- Hightower, R. (2025). “The Open-Source AI Revolution: How DeepSeek, Gemma, and Others Are Challenging Big Tech’s Language.” Medium.

- Hightower, R. (2025). “LiteLLM and MCP: One Gateway to Rule All AI Models.” Medium.

- Hightower, R. (2025). “Stop Wrestling with Prompts: How DSPy Transforms Fragile AI into Reliable Software.” Medium.

- AWS Pricing. (2025). Amazon Web Services., AWS EC2 Pricing

- Azure Pricing. (2025). Microsoft Azure.

- GCP Pricing. (2025). Google Cloud Platform.

- “Index.dev Blog: LLM Developer Hourly Rates.” (2025). Index.dev.

- Hightower, R. (2025). “The LLM Cost Trap — and the Playbook to Escape It.” Medium.

About the AuthorRick Hightower brings extensive enterprise experience as a former executive and distinguished engineer at a Fortune 100 company, where he specialized in Machine Learning and AI solutions to deliver intelligent customer experiences. His expertise spans both theoretical foundations and practical applications of AI technologies.

As a TensorFlow-certified professional and graduate of Stanford University’s comprehensive Machine Learning Specialization, Rick combines academic rigor with real-world implementation experience. His training includes mastery of supervised learning techniques, neural networks, and advanced AI concepts, which he has successfully applied to enterprise-scale solutions.

With a deep understanding of both business and technical aspects of AI implementation, Rick bridges the gap between theoretical machine learning concepts and practical business applications, helping organizations leverage AI to create tangible value.

Follow Rick on LinkedIn or Medium for more enterprise AI and AI strategy insights

Some fact checking

Review of Self-Hosting Cost Estimates

Let’s break down the cost claims for self-hosting Llama 4 Scout and Maverick, and assess their accuracy based on current AWS pricing and typical operational expenses.

1. Llama 4 Scout Self-Hosting CostClaim:- $94,394/month for 4 AWS p4d.24xlarge instances at $32.77/hour

- ~$17/month for storage and egress

Instance Cost Calculation

-

AWS p4d.24xlarge (as of mid-2024):

- 8x NVIDIA A100 GPUs, 96 vCPUs, 1.1 TB RAM

- On-demand price: $32.77/hour

-

Monthly cost per instance: 32.77×24×30=$23,594.4032.77 \times 24 \times 30 = $23,594.4032.77×24×30=$23,594.40

-

For 4 instances: 23,594.40×4=$94,377.6023,594.40 \times 4 = $94,377.6023,594.40×4=$94,377.60

This matches the stated $94,394/month, with a minor rounding difference.

Storage and Egress

- Storage: Model weights and data storage for LLMs are typically modest compared to compute costs.

- $17/month is plausible for a few TB of EBS or S3 storage and minimal egress.

Total Estimated Monthly Cost:

- $94,394 (compute) + $17 (storage/egress) ≈ $94,411/month

Conclusion: The estimate for self-hosting Llama 4 Scout on AWS is accurate for 4 p4d.24xlarge instances at current on-demand rates.

2. Maverick Self-Hosting Cost

Claim:

- $141,585/month (presumably for compute)

- $79,500/month for engineers

Compute Cost

-

If using more powerful or additional GPU instances (e.g., p5.48xlarge or more p4d.24xlarge), the monthly cost could reach or exceed $141,585.

-

For example, 6 p4d.24xlarge instances:

23,594.40×6=$141,566.4023,594.40 \times 6 = $141,566.4023,594.40×6=$141,566.40

-

Alternatively, using newer or larger instances (e.g., p5 series) could also reach this cost.

Engineering Cost

- $79,500/month for engineers implies a team of 3-5 full-time engineers at market rates ($16,000–$26,000/month per engineer, including benefits and overhead).

- This is a reasonable estimate for a small, highly skilled MLOps/devops team.

3. Summary Table

| Item | Compute Cost/Month | Storage/Egress | Engineering | Total/Month |

|---|---|---|---|---|

| Llama 4 Scout | $94,394 | ~$17 | — | ~$94,411 |

| Maverick | $141,585 | (not stated) | $79,500 | $221,085+ |

Key Takeaways

- The cost estimates for self-hosting Llama 4 Scout and Maverick are accurate based on current AWS pricing and typical engineering salaries.

- Compute costs dominate the total, with storage and egress being negligible in comparison.

- Engineering costs are significant for ongoing operations, especially for more complex or larger-scale deployments.

References: AWS EC2 Pricing (p4d.24xlarge, p5.48xlarge)

AWS EBS/S3 Pricing

Industry salary surveys for MLOps/DevOps engineers

Review of API Cost Statement

Your skepticism is well-founded. Let’s clarify the cost comparison between Gemini 2.5 Pro and GPT-4o for the scenario described:

Scenario Details

-**Users:**30 million -**Requests per second:**200 -**Tokens per request:**500 -**Total tokens per month:**259.2 billion

API Pricing (as of mid-2024)

| Model | Input Price (per 1K tokens) | Output Price (per 1K tokens) | Notes |

|---|---|---|---|

| Gemini 2.5 Pro | $0.0025 | $0.0025 | Same for input/output |

| GPT-4o | $0.005 | $0.015 | Input/output differ |

Cost Calculation

Assuming all tokens are output (worst case for cost):

-Gemini 2.5 Pro:259,200,000,000259,200,000,000259,200,000,000 tokens × $0.0025 / 1,000 =$648,000/monthAnnual:$7.78M-GPT-4o:259,200,000,000259,200,000,000259,200,000,000 tokens × $0.015 / 1,000 =$3,888,000/monthAnnual:$46.66MIf split evenly between input and output (250 tokens each):

-Gemini 2.5 Pro:259,200,000,000259,200,000,000259,200,000,000 × $0.0025 / 1,000 =$648,000/month(no change)

-**GPT-4o:**Input: 129,600,000,000129,600,000,000129,600,000,000 × $0.005 / 1,000 = $648,000

Output: 129,600,000,000129,600,000,000129,600,000,000 × $0.015 / 1,000 = $1,944,000

Total:**$2,592,000/month**Annual:**$31.10M**## Summary Table

| Model | Monthly Cost (All Output) | Monthly Cost (Split) | Annual Cost (Split) |

|---|---|---|---|

| Gemini 2.5 Pro | $648,000 | $648,000 | $7.78M |

| GPT-4o | $3,888,000 | $2,592,000 | $31.10M |

Conclusion

-GPT-4o is significantly more expensive than Gemini 2.5 Profor the same usage scenario.

- The original statement is incorrect: GPT-4o is not cheaper than Gemini 2.5 Pro for this scale of usage.**References:**Google Gemini API Pricing

OpenAI GPT-4o API Pricing

Hidden Costs That Derail LLM Budgets

Large language model (LLM) deployments often face unexpected expenses that can undermine even the most carefully planned budgets. Below is a breakdown of the most common “sneaky” costs and their impact.

1. Cold Start Latency

-Lost Revenue:When serverless or containerized LLM apps experience cold starts, user experience suffers. If users abandon slow apps, the resulting lost revenue can range from$2,000 to $5,000 per monthfor mid-sized SaaS or consumer platforms123. -**Mitigation:**Keeping instances warm or increasing minimum instance counts can reduce cold starts, but this increases infrastructure costs45.

2. Failed Requests

-Compute Waste:Each failed LLM request still consumes compute resources, leading to$1,500/monthin wasted compute costs for high-traffic applications6. -Debugging Expenses:Persistent failures require engineering time for root cause analysis, often costing$3,000/monthor more in debugging labor78. -Support Overhead:Handling user complaints and support tickets related to failures can add another$500/monthin support costs.

3. Model Drift & Hallucination

-Monitoring and Retraining:Keeping LLMs accurate and up-to-date requires ongoing monitoring for drift and hallucinations, as well as periodic retraining. Annual costs for these activities typically range from$100,000 to $300,00091011. -**Monitoring:**Automated tools and human-in-the-loop evaluations are both needed to detect drift and hallucinations. -**Retraining:**Full or partial retraining of LLMs is compute-intensive and expensive, especially for large models.

4. Vendor Lock-In

-**Price Spikes:**Relying on a single cloud or API vendor exposes organizations to sudden price increases. Recent trends show cloud and AI service prices rising by 2–9% annually, with generative AI features sometimes triggering even steeper hikes12. -**Limited Flexibility:**Migrating away from a vendor can be costly and time-consuming, especially if proprietary APIs or data formats are involved.

5. Self-Hosting Challenges

-**Expertise Shortage:**Running LLMs in-house requires rare MLOps and infrastructure expertise. Recruiting and retaining such talent is difficult and expensive13. -**Operational Complexity:**Self-hosting demands robust infrastructure management, performance tuning, and constant monitoring to avoid downtime and inefficiency.

Summary Table: Hidden LLM Expenses

| Expense Type | Typical Cost Range | Notes |

|---|---|---|

| Cold Start Lost Revenue | $2,000–$5,000/month | User abandonment due to latency12 |

| Failed Requests (Compute) | $1,500/month | Wasted compute on failed calls6 |

| Debugging Failed Requests | $3,000/month | Engineering labor78 |

| Support for Failures | $500/month | User support tickets |

| Monitoring & Retraining | $100,000–$300,000/year | Model drift/hallucination91011 |

| Vendor Price Spikes | 2–9%+ annual increases | Generative AI features drive up costs12 |

| Self-Hosting Expertise | High, hard to quantify | Scarce MLOps talent needed13 |

- https://www.reddit.com/r/googlecloud/comments/1ita39x/cloud_run_how_to_mitigate_cold_starts_and_how/

- https://awsbites.com/144-lambda-billing-changes-cold-start-costs-and-log-savings-what-you-need-to-know/

- https://payproglobal.com/answers/what-is-cold-start/

- https://cloud.google.com/run/pricing

- https://cloud.google.com/run/pricing?authuser=4

- https://community.openai.com/t/does-i-get-charge-for-failed-or-pending-llm-api-requests/1269888

- https://www.reddit.com/r/ExperiencedDevs/comments/1jqp3s3/i_now_spend_most_of_my_time_debugging_and_fixing/

- https://www.keywordsai.co/blog/top-7-llm-debugging-challenges-and-solutions

- https://arxiv.org/html/2310.04216

- https://www.rohan-paul.com/p/ml-interview-q-series-handling-llm

- https://arize.com/blog/libre-eval-detect-llm-hallucinations/

- https://www.techtarget.com/searchcio/news/366548312/Cloud-costs-continue-to-rise-among-IT-commodities

- https://www.doubleword.ai/resources/the-challenges-of-self-hosting-large-language-models

- https://community.flutterflow.io/discussions/post/app-engine-is-expensive-nGWaZXV4KVmSY4P

- https://www.cloudyali.io/blogs/aws-lambda-cold-starts-now-cost-money-august-2025-billing-changes-explained

- https://cameronrwolfe.substack.com/p/llm-debugging

- https://github.com/Pythagora-io/gpt-pilot/issues/738

- https://www.index.dev/blog/llms-for-debugging-error-detection

- https://arxiv.org/pdf/2310.04216v1.pdf

- https://www.aimodels.fyi/papers/arxiv/cost-effective-hallucination-detection-llms

Fact Check: LLM Deployment Cost and Talent Claims (July 2025)

API-Based Model CostsClaimed:- GPT-4o: $1.6M/month for 259.2B tokens

- o4-mini: $97.2M/month

- Gemini 2.5 Pro: $1.4M/monthFact Check:-GPT-4o:Accurate. At $2.5 per million input tokens and $10 per million output tokens, a 50/50 split for 259.2B tokens results in$1,620,000/month1. -o4-mini:Overstated. The correct cost is$712,800/monthat $1.1 per million input and $4.4 per million output tokens1. -Gemini 2.5 Pro:Accurate. At $1.25 per million input and $10 per million output tokens, the cost is$1,458,000/month12.

| Model | Claimed Cost | Actual Cost |

|---|---|---|

| GPT-4o | $1.6M | $1.62M |

| o4-mini | $97.2M | $712.8K |

| Gemini 2.5 Pro | $1.4M | $1.46M |

| -**Summary:**The o4-mini cost is off by more than 100x; the other two are accurate. |

Self-Hosted Model CostsClaimed:- Llama 4 Scout: $94,394/month

- Maverick: $141,585/monthFact Check:-**Llama 4 Scout:**Accurate. This matches the cost of running 4 AWS p4d.24xlarge instances at $32.77/hour34. -**Maverick:**Plausible. This aligns with 6 p4d.24xlarge or similar high-end GPU instances34.LLMOps Engineer Salaries & Prevalence:-**Claim:**Only 1% of engineers specialize in LLMOps, with salaries from $100,000 to $268,000, and $100,000 training per engineer; $79,500/month for a team of three. -**Fact:**The median MLOps engineer salary in 2025 is about $160,000, with the top 10% earning up to $243,400. $268,000 is at the very high end but possible for elite talent. Training costs of $50,000–$150,000 per hire are reasonable for specialized onboarding5. $79,500/month for three is plausible for a top-tier team.

| Role/Cost | Claimed Range | Actual Range |

|---|---|---|

| LLMOps Salary | $100K–$268K/year | $132K–$243K/year |

| Training/Engineer | $100K | $50K–$150K |

| Team of 3 | $79.5K/month | $40K–$80K/month |

| -**Summary:**Salary and training claims are at the high end but within reason for rare, highly skilled talent. |

Hybrid Model CostsClaimed:- $38.89M/month with 80% caching and 70% routing to Scout or o4-miniFact Check:- This figure is not supported by current API pricing. Even without caching or routing, the total for 259.2B tokens is under $2M/month for the most expensive API models. Hybrid approaches can further reduce costs, not increase them12.

LLMOps Talent Market

-**Claim:**Only 1% of engineers specialize in LLMOps; demand up 300% since 2023; training takes 3–6 months at $50,000–$150,000 per hire. -**Fact:**LLMOps is a niche skill, and demand has surged, but the 1% figure is an estimate. Training costs and timelines are reasonable for this specialization5.

Additional Context

-API-based approacheseliminate engineering burden but introduce vendor lock-in and price volatility risks. -Self-hostingis cost-effective at scale but requires rare expertise and significant operational investment. -Hybrid solutionscan optimize for cost and control, but the cited savings and costs should be recalculated using current API rates.Key Takeaways:-API cost claims for GPT-4o and Gemini 2.5 Pro are accurate; o4-mini is vastly overstated.-Self-hosting and engineering cost estimates are plausible for top-tier teams.-Hybrid model cost claims are not supported by current pricing data.-LLMOps talent is scarce and expensive, but the salary figures cited are at the high end of the market.****References:12345

- https://docsbot.ai/tools/gpt-openai-api-pricing-calculator

- https://techcrunch.com/2025/04/04/gemini-2-5-pro-is-googles-most-expensive-ai-model-yet/

- https://llamaimodel.com/price/

- https://livechatai.com/llama-4-pricing-calculator

- https://aijobs.net/salaries/mlops-engineer-salary-in-2025/

- https://openai.com/api/pricing/

- https://www.cursor-ide.com/blog/gpt-4o-image-generation-cost

- https://www.nebuly.com/blog/openai-gpt-4-api-pricing

- https://api.chat/models/chatgpt-4o/price/

- https://onedollarvps.com/blogs/openai-o4-mini-pricing

- https://openai.com/index/gpt-4-1/

- https://aws.amazon.com/marketplace/pp/prodview-7kcpngbt6eprg

- https://www.llama.com

- https://multiable.com.my/2025/02/01/what-is-the-cost-of-hosting-running-a-self-owned-llama-in-aws

- https://www.linkedin.com/pulse/true-cost-hosting-your-own-llm-comprehensive-comparison-binoloop-l3rtc

- https://dev.to/yyarmoshyk/the-cost-of-self-hosted-llm-model-in-aws-4ijk

- https://community.openai.com/t/is-the-api-pricing-for-gpt-4-1-mini-and-o3-really-identical-now/1286911

- https://www.linkedin.com/pulse/google-expands-access-gemini-25-pro-ai-model-reveals-pricing-tiwari-e3nkc

- https://vitalflux.com/llm-hosting-strategy-options-cost-examples/

- https://www.glassdoor.co.in/Salaries/llm-engineer-salary-SRCH_KO0,12.htm

Apache Spark Training

Kafka Tutorial

Akka Consulting

Cassandra Training

AWS Cassandra Database Support

Kafka Support Pricing

Cassandra Database Support Pricing

Non-stop Cassandra

Watchdog

Advantages of using Cloudurable™

Cassandra Consulting

Cloudurable™| Guide to AWS Cassandra Deploy

Cloudurable™| AWS Cassandra Guidelines and Notes

Free guide to deploying Cassandra on AWS

Kafka Training

Kafka Consulting

DynamoDB Training

DynamoDB Consulting

Kinesis Training

Kinesis Consulting

Kafka Tutorial PDF

Kubernetes Security Training

Redis Consulting

Redis Training

ElasticSearch / ELK Consulting

ElasticSearch Training

InfluxDB/TICK Training TICK Consulting