June 28, 2025

The LLM Cost Trap—and the Playbook to Escape It

Every tech leader who watched ChatGPT explode onto the scene asked the same question: What will a production‑grade large language model really cost us? The short answer is “far more than the API bill,” yet the long answer delivers hope if you design with care.

Introduction

Public pricing pages show fractions of a cent per token. Those numbers feel reassuring until the first invoice lands. GPUs sit idle during cold starts. Engineers baby‑sit fine‑tuning jobs. Network egress waits in the shadows. This article unpacks the full bill, shares a fintech case study, and offers a proven playbook for trimming up to ninety percent of spend while raising performance.

1. The Four Pillars of Cost

A realistic budget treats LLMs as systems, not single line items. Add up these four pillars:

| Pillar | What it Covers |

|---|---|

| Infrastructure | GPU hours, VRAM, storage, and data transfer |

| Operations | Monitoring, autoscaling, uptime targets, 24/7 support |

| Development | Salaries, prompt engineering, fine‑tuning, security reviews |

| Opportunity | Churn from slow answers, lost agility, vendor lock‑in |

A single p4d.24xlarge instance runs about $32 an hour. That is $280K a year even before you add redundancy or storage. Engineers often triple that figure through experiment cycles and on‑call coverage.

A deeper analysis of current instance pricing reveals cost-effective alternatives to the premium p4d.24xlarge that still deliver impressive performance:

| Instance Type | GPUs | Memory | Hourly Cost | vs. p4d.24xlarge |

|---|---|---|---|---|

| g6.48xlarge | 8× NVIDIA L4 | 768 GiB | $13.35 | 39% cheaper |

| g5.48xlarge | 8× NVIDIA A10G | 768 GiB | $16.29 | 26% cheaper |

| p4d.24xlarge | 8× NVIDIA A100 (40GB) | 768 GiB | $21.97 | baseline |

| p4de.24xlarge | 8× NVIDIA A100 (80GB) | 1152 GiB | $27.45 | 25% more |

The g-series instances can slash inference and light training costs by up to 40%, while p4de instances provide double the GPU memory for those massive parameter counts—a textbook example of balancing cost versus capability in your infrastructure pillar.

Hidden Costs That Wreck Budgets

Cold starts add 30–120 seconds of latency. Teams keep “warm” GPUs online to dodge the delay, which means paying for silence at three in the morning.

Failed requests still bill input tokens. A storm of retries can melt a budget in minutes.

Model drift and hallucination force constant monitoring, fact checks, and retraining. Annual upkeep can match the first build.

Vendor lock‑in removes pricing power. A sudden API increase leaves little room to escape.

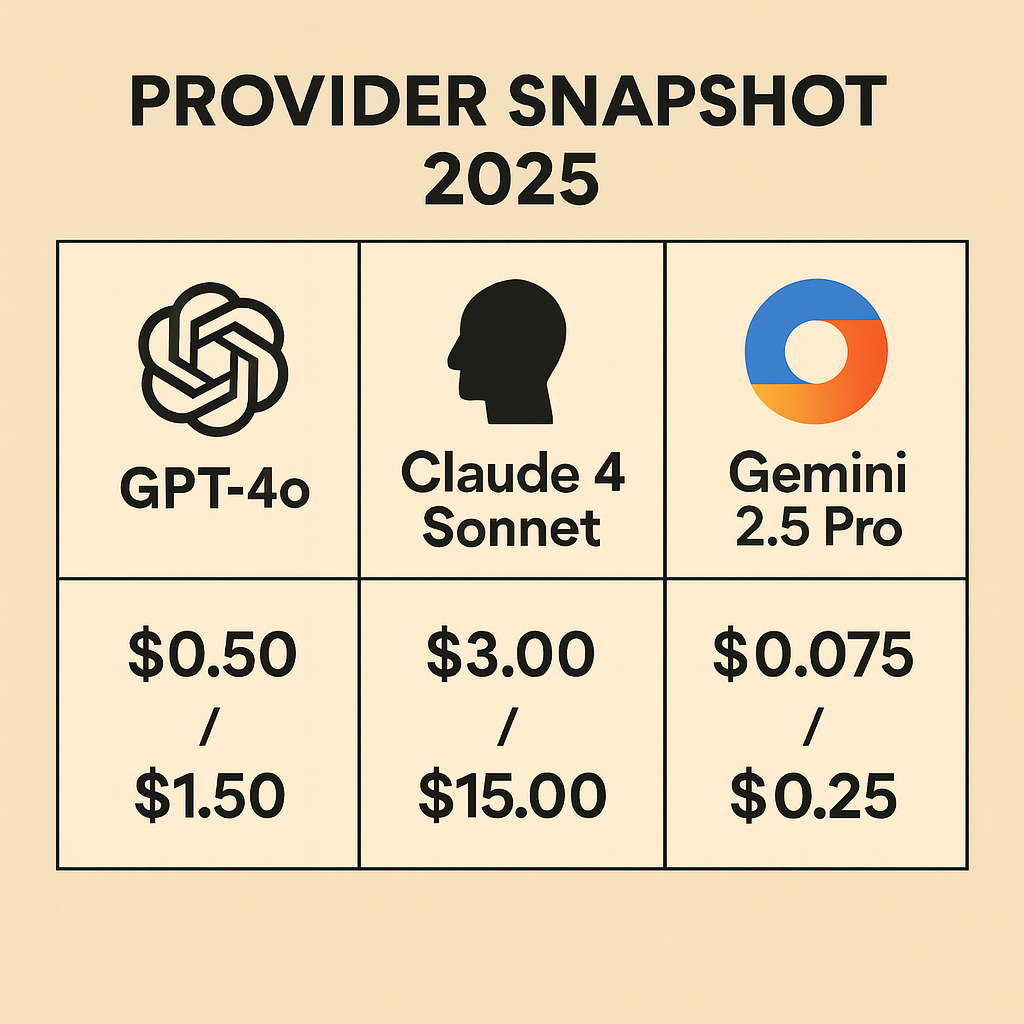

Provider Snapshot: Flagships, Specialists, and Open‑Source Contenders

| Tier | Best Fit | Approx. Cost* | Example Models |

|---|---|---|---|

| Flagship API (multimodal) | Burst traffic, rich media apps | $5 / M input, $15 / M output | GPT‑4o, Claude 4 Sonnet, Gemini 2.5 Pro |

| Specialist API (logic & code) | High‑volume reasoning or coding | $0.15 / M | OpenAI o3, o4‑mini‑high |

| Self‑Hosted — Large Open Source | Steady high volume, full control | ≈$0.000002 | Llama 4 Maverick 70B, Qwen 110B |

| Self‑Hosted — Efficient Open Source | Cost‑sensitive batch work | ≈$0.000001 | Llama 4 Scout 13B, Gemma 2B |

| Hybrid Router | Enterprises seeking balance | Blended | Routes tiers above based on workload |

Costs are per million tokens, based on mid‑2025 public pricing.

A router plus cache can still cut flagship calls by forty percent or more, often with zero quality loss.

How FinSecure Cut Costs by 83 Percent (Hypothetical)

A fintech serving one hundred million users switched from a pure GPT‑4 API to a hybrid stack:

- Router Model: Fine‑tuned Llama 4 Scout 13B classifies requests.

- Main Model: Fine‑tuned Llama 4 Maverick 70B handles complex tasks.

- Semantic Cache: Redis answers eighty percent of support questions instantly.

- Batching & Quantization: GPU throughput jumped from 200 to 1,500 tokens per second.

Results

| Metric | Before | After | Improvement |

|---|---|---|---|

| Monthly Run Cost | $30K | $5K | 83 % |

| API Calls to SoTA Model | 1M/day | <100K/day | 90 % |

| KYC Processing Time | 2 h | 10 min | 90 % |

| Time to ROI | — | 4 months | — |

Five Moves to Shrink Your Bill

- Route First, Escalate Second – Send easy work to a small model. Save the giant for the few queries that need depth.

- Cache Everything You Can – Exact match and semantic caches slash repeat traffic.

- Batch Offline Jobs – Group documents or messages, then run one big inference pass.

- Quantize Wisely – Drop to INT8 or lower when quality permits. Memory falls by half.

- Re‑evaluate Quarterly – Model price‑performance shifts every few months. Stay nimble.

Turn Tokens into Business Wins

Cost per token is only half the math. Tie each query business outcomes:

- Efficiency Gains - faster paperwork

- Revenue Lift – Higher conversion, bigger basket size.

- Direct Savings – Fewer retries, smaller support team.

- Experience Wins – Better CSAT, lower churn.

Look at TOTAL ROI not just token costs!

Consider innovation and time to market!

- Efficiency Gains – Shorter handle times, faster paperwork.

- Revenue Lift – Higher conversion, bigger basket size.

- Direct Savings – Fewer retries, smaller support team.

- Experience Wins – Better CSAT, lower churn.

ROI = (Annual Value – Annual Cost) ÷ Annual Cost. FinSecure hit breakeven in under five months because annual value dwarfed the new $60K run rate.

People, Pace, and the Price of Agility - Cost savings vs. Agility

- In-house model adoption requires premium staffing: LLM/ML engineers, MLOps specialists, etc.

- Opportunity cost consideration - Value perspective matters - Agility has real value

Consider: Opportunity cost, talent scarcity, and rapid model innovation often make managed services more practical.

- Hybrid approach for scale: As usage grows significantly, routing some traffic to in-house

Adopting open‑source models in‑house is not just a hardware decision; it is a staffing commitment. Fine‑tuning and serving large models demand scarce skill sets—ML engineers, MLOps specialists, and data scientists who command premium salaries. Every dollar spent hiring and retaining that talent is a dollar not spent building products.

When a managed LLM costs $5 to draft a legal brief that normally bills $1,000, the arithmetic changes. Agility matters.

The ability to swap to a newer, more capable model—sometimes overnight—lets you capture upside the moment it appears. That strategic flexibility can outweigh a lower cost‑per‑token on paper.

Opportunity cost, talent scarcity, and the pace of model innovation form a three‑way force that often tips the balance toward LLM‑as‑a‑service, especially for teams without deep AI infrastructure roots. Then again, if your solution starts to grow by leaps and bounds routing some of the use cases to an in-house open source model can easily save millions.

Escape the Cost Trap

LLMs unlock new products and faster service, yet they punish sloppy design. Treat them as living systems. Budget beyond the token. Start small, route smart, and tune often. When you do, a “bill shock” turns into an engine for long‑term growth.

References

- OpenAI GPT‑4o pricing, OpenAI Pricing Page (openai.com)

- Anthropic Claude 4 Sonnet pricing, Anthropic News Release (anthropic.com)

- Google Gemini 2.5 Pro pricing, Gemini API Pricing Docs (ai.google.dev)

- AWS p4d.24xlarge hourly rate, Vantage EC2 Instance Comparison (instances.vantage.sh)

About the Author

Rick Hightower brings extensive enterprise experience as a former executive and distinguished engineer at a Fortune 100 company, where he specialized in Machine Learning and AI solutions to deliver intelligent customer experiences. His expertise spans both theoretical foundations and practical applications of AI technologies.

As a TensorFlow-certified professional and graduate of Stanford University’s comprehensive Machine Learning Specialization, Rick combines academic rigor with real-world implementation experience. His training includes mastery of supervised learning techniques, neural networks, and advanced AI concepts, which he has successfully applied to enterprise-scale solutions.

With a deep understanding of both business and technical aspects of AI implementation, Rick bridges the gap between theoretical machine learning concepts and practical business applications, helping organizations leverage AI to create tangible value.

Follow Rick on LinkedIn or Medium for more enterprise AI and security insights.

TweetApache Spark Training

Kafka Tutorial

Akka Consulting

Cassandra Training

AWS Cassandra Database Support

Kafka Support Pricing

Cassandra Database Support Pricing

Non-stop Cassandra

Watchdog

Advantages of using Cloudurable™

Cassandra Consulting

Cloudurable™| Guide to AWS Cassandra Deploy

Cloudurable™| AWS Cassandra Guidelines and Notes

Free guide to deploying Cassandra on AWS

Kafka Training

Kafka Consulting

DynamoDB Training

DynamoDB Consulting

Kinesis Training

Kinesis Consulting

Kafka Tutorial PDF

Kubernetes Security Training

Redis Consulting

Redis Training

ElasticSearch / ELK Consulting

ElasticSearch Training

InfluxDB/TICK Training TICK Consulting