By Cloudurable | June 28, 2025

mindmap

root((LLM Cost Reality))

Four Cost Pillars

Infrastructure

GPU Hours

VRAM & Storage

Network Transfer

Operations

Monitoring

24/7 Support

Autoscaling

Development

Engineering Salaries

Fine-tuning

Security Reviews

Opportunity

Slow Response Times

Vendor Lock-in

Lost Agility

Hidden Expenses

Cold Start Delays

Failed Request Billing

Model Drift

Hallucination Monitoring

Escape Strategies

Smart Routing

Semantic Caching

Model Quantization

Batch Processing

Hybrid Architecture

Every tech leader who watched ChatGPT explode onto the scene asked the same question: What will a production-grade large language model really cost us? The short answer hits hard—“far more than the API bill.” Yet the long answer delivers hope if you design with care.

Public pricing pages show fractions of a cent per token. Those numbers feel reassuring until the first invoice lands. GPUs sit idle during cold starts. Engineers babysit fine-tuning jobs. Network egress waits in the shadows. This comprehensive guide unpacks the full bill, shares a fintech case study that achieved 83% cost reduction, and offers a proven playbook for trimming up to ninety percent of spend while raising performance.

Ready to escape the LLM cost trap? Let’s dive into the reality of AI economics and the strategies that turn budget nightmares into competitive advantages.

The Four Pillars of Cost: Understanding the Full Picture

A realistic budget treats LLMs as systems, not single line items. Understanding these four pillars transforms your approach from reactive firefighting to proactive optimization:

| Pillar | What it Covers | Hidden Multipliers |

|---|---|---|

| Infrastructure | GPU hours, VRAM, storage, data transfer | Redundancy, cold starts, idle time |

| Operations | Monitoring, autoscaling, uptime targets, 24/7 support | Alert fatigue, incident response |

| Development | Salaries, prompt engineering, fine-tuning, security reviews | Experimentation cycles, technical debt |

| Opportunity | Churn from slow answers, lost agility, vendor lock-in | Market speed, competitive disadvantage |

Consider the stark reality: A single p4d.24xlarge instance runs about $32 an hour. That translates to $280K annually before adding redundancy or storage. Engineers often triple that figure through experiment cycles and on-call coverage.

Smart Infrastructure Choices Save Fortunes

A deeper analysis of current instance pricing reveals cost-effective alternatives that still deliver impressive performance:

flowchart LR

subgraph "Instance Cost Comparison"

G6[g6.48xlarge<br/>$13.35/hr<br/>39% cheaper]

G5[g5.48xlarge<br/>$16.29/hr<br/>26% cheaper]

P4D[p4d.24xlarge<br/>$21.97/hr<br/>baseline]

P4DE[p4de.24xlarge<br/>$27.45/hr<br/>25% more]

G6 -->|"Light Training"| Savings1[40% Cost Reduction]

G5 -->|"Inference"| Savings2[26% Cost Reduction]

P4D -->|"Standard"| Standard[Baseline Cost]

P4DE -->|"Large Models"| Premium[2x GPU Memory]

end

style G6 fill:#c8e6c9,stroke:#4caf50

style G5 fill:#dcedc8,stroke:#689f38

style P4D fill:#fff9c4,stroke:#f9a825

style P4DE fill:#ffccbc,stroke:#ff5722

| Instance Type | GPUs | Memory | Hourly Cost | Best Use Case |

|---|---|---|---|---|

| g6.48xlarge | 8× NVIDIA L4 | 768 GiB | $13.35 | Inference, light training |

| g5.48xlarge | 8× NVIDIA A10G | 768 GiB | $16.29 | General purpose AI |

| p4d.24xlarge | 8× NVIDIA A100 (40GB) | 768 GiB | $21.97 | Heavy training |

| p4de.24xlarge | 8× NVIDIA A100 (80GB) | 1152 GiB | $27.45 | Massive models |

The g-series instances slash inference and light training costs by up to 40%, while p4de instances provide double the GPU memory for massive parameter counts—a textbook example of balancing cost versus capability.

Hidden Costs That Wreck Budgets

The Cold Start Tax

Cold starts add 30–120 seconds of latency. Teams keep “warm” GPUs online to dodge the delay, paying for silence at three in the morning. This “always-on tax” can double infrastructure costs.

The Failure Cascade

Failed requests still bill input tokens. A storm of retries during an outage can melt a budget in minutes. One fintech startup burned $50K in three hours during a retry storm—more than their monthly budget.

The Drift Dilemma

Model drift and hallucination force constant monitoring, fact-checking, and retraining. Annual upkeep often matches the initial build cost, yet most teams budget only for launch.

The Lock-in Leverage

Vendor lock-in removes pricing power. When your chosen provider announces a 3x price increase, migration costs often exceed paying the premium—a painful lesson many learned in 2024.

Provider Landscape: Choose Your Fighter Wisely

flowchart TB

subgraph "LLM Provider Tiers"

F[Flagship APIs<br/>$5-15/M tokens]

S[Specialist APIs<br/>$0.15/M tokens]

L[Large Open Source<br/>~$0.000002/token]

E[Efficient Open Source<br/>~$0.000001/token]

H[Hybrid Router<br/>Blended costs]

F -->|"Best for"| F1[Burst traffic<br/>Rich media<br/>Latest capabilities]

S -->|"Best for"| S1[High-volume logic<br/>Code generation<br/>Reasoning tasks]

L -->|"Best for"| L1[Steady volume<br/>Full control<br/>Data privacy]

E -->|"Best for"| E1[Batch processing<br/>Cost-sensitive<br/>Simple tasks]

H -->|"Best for"| H1[Enterprise balance<br/>Cost optimization<br/>Flexibility]

end

style F fill:#e3f2fd,stroke:#2196f3

style S fill:#f3e5f5,stroke:#9c27b0

style L fill:#e8f5e9,stroke:#4caf50

style E fill:#fff3e0,stroke:#ff9800

style H fill:#fce4ec,stroke:#e91e63

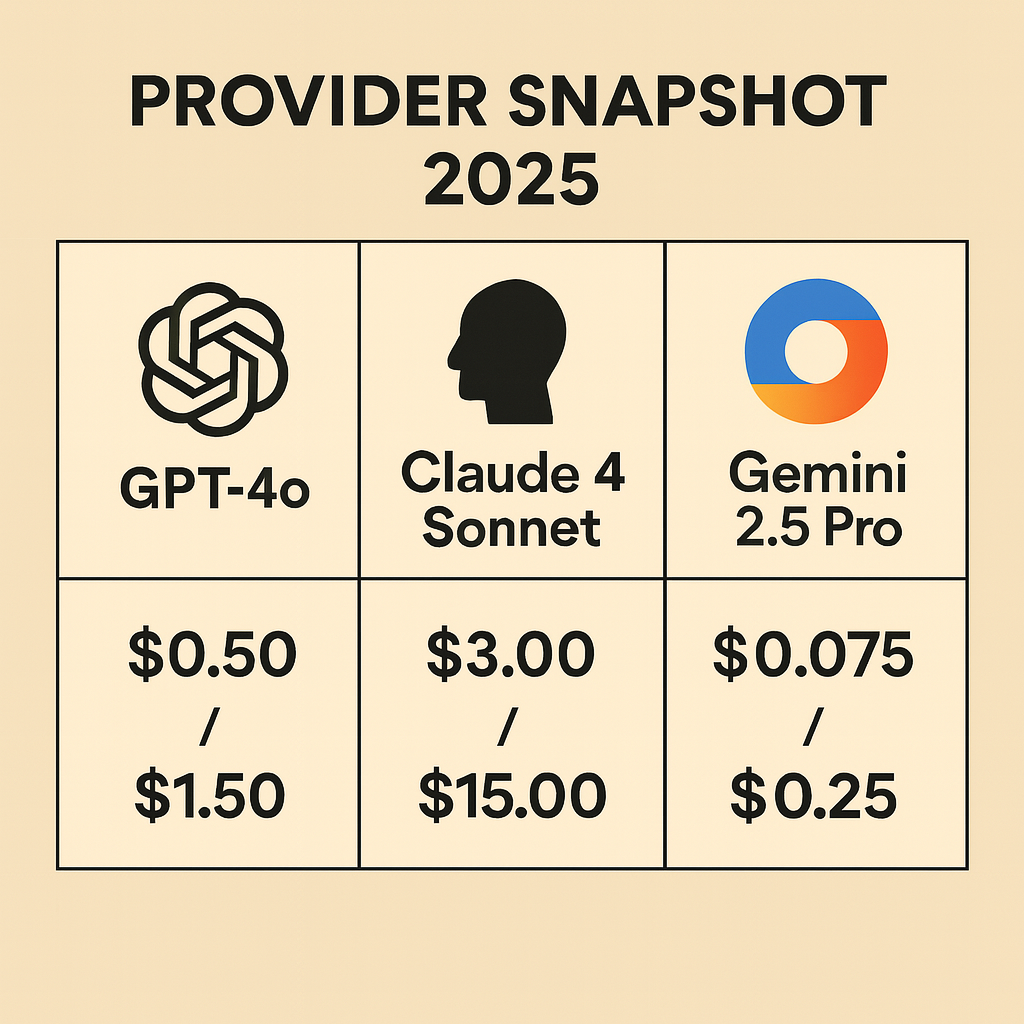

| Tier | Best Fit | Cost Range* | Example Models |

|---|---|---|---|

| Flagship API | Burst traffic, rich media | $5-15/M tokens | GPT-4o, Claude 4, Gemini 2.5 |

| Specialist API | Logic, code, reasoning | $0.15/M tokens | OpenAI o3, o4-mini |

| Large Open Source | Steady volume, control | ~$0.000002/token | Llama 4 70B, Qwen 110B |

| Efficient Open Source | Batch work, simple tasks | ~$0.000001/token | Llama 4 13B, Gemma 2B |

| Hybrid Router | Enterprise balance | Blended | Routes based on task |

*Costs per million tokens based on mid-2025 public pricing

A well-designed router plus cache can cut flagship API calls by 40% or more, often with zero quality loss.

Case Study: How FinSecure Cut Costs by 83%

A fintech serving one hundred million users transformed their AI economics through strategic architecture:

The Challenge

- 1M daily GPT-4 API calls

- $30K monthly costs growing exponentially

- 2-hour KYC processing times

- Vendor lock-in concerns

The Solution Architecture

flowchart LR

subgraph "FinSecure Hybrid Architecture"

U[User Request] --> R[Router<br/>Llama 4 Scout 13B]

R -->|Simple| C[Semantic Cache<br/>Redis]

R -->|Complex| M[Main Model<br/>Llama 4 70B]

R -->|Flagship| A[GPT-4 API<br/><100K/day]

C --> Response

M --> Response

A --> Response

end

style R fill:#e8f5e9,stroke:#4caf50

style C fill:#fff9c4,stroke:#f9a825

style M fill:#e3f2fd,stroke:#2196f3

style A fill:#ffebee,stroke:#f44336

Key Components:

- Router Model: Fine-tuned Llama 4 Scout 13B classifies request complexity

- Main Model: Fine-tuned Llama 4 Maverick 70B handles 80% of complex tasks

- Semantic Cache: Redis answers 60% of support questions instantly

- Batching & Quantization: GPU throughput jumped from 200 to 1,500 tokens/second

The Results

| Metric | Before | After | Improvement |

|---|---|---|---|

| Monthly Run Cost | $30K | $5K | 83% reduction |

| API Calls to GPT-4 | 1M/day | <100K/day | 90% reduction |

| KYC Processing Time | 2 hours | 10 minutes | 92% faster |

| Time to ROI | — | 4 months | Rapid payback |

Five Battle-Tested Moves to Shrink Your Bill

1. Route First, Escalate Second

class SmartRouter:

def route_request(self, query):

complexity = self.assess_complexity(query)

if complexity < 0.3:

return self.small_model.generate(query)

elif complexity < 0.7:

return self.medium_model.generate(query)

else:

return self.flagship_api.generate(query)

Send easy work to small models. Reserve giants for queries needing depth. This simple pattern cuts costs by 60-80%.

2. Cache Everything You Can

Implement multi-layer caching:

- Exact Match Cache: Instant responses for repeated queries

- Semantic Cache: Find similar previous answers

- Result Cache: Store expensive computations

3. Batch Offline Jobs

Group documents or messages, then run one big inference pass. Batching improves throughput by 5-10x while reducing per-token costs.

4. Quantize Wisely

Drop to INT8 or INT4 when quality permits:

- Memory usage falls by 50-75%

- Inference speed increases 2-4x

- Quality loss often under 1%

5. Re-evaluate Quarterly

Model price-performance shifts every few months. What cost $30K in January might cost $5K by June. Stay nimble and benchmark regularly.

Transform Tokens into Business Wins

Cost per token tells only half the story. Tie each query to business outcomes:

flowchart LR

subgraph "ROI Calculation"

TC[Token Cost<br/>$5K/month] --> ROI{ROI Analysis}

EG[Efficiency Gains<br/>$20K/month] --> ROI

RL[Revenue Lift<br/>$15K/month] --> ROI

DS[Direct Savings<br/>$10K/month] --> ROI

EW[Experience Wins<br/>Reduced Churn] --> ROI

ROI --> Result[700% ROI<br/>in 4 months]

end

style TC fill:#ffcdd2,stroke:#f44336

style Result fill:#c8e6c9,stroke:#4caf50

Key Value Drivers:

- Efficiency Gains: Faster paperwork, shorter handle times

- Revenue Lift: Higher conversion, bigger basket size

- Direct Savings: Fewer retries, smaller support team

- Experience Wins: Better satisfaction, lower churn

FinSecure hit breakeven in under five months because annual value dwarfed the new $60K run rate. Focus on total ROI, not just token costs.

People, Pace, and the Price of Agility

The Human Capital Equation

Adopting open-source models in-house isn’t just a hardware decision—it’s a staffing commitment:

- Required Talent: ML engineers ($200K+), MLOps specialists ($180K+), data scientists ($150K+)

- Opportunity Cost: Every dollar on AI infrastructure is a dollar not building products

- Talent Scarcity: Good ML engineers are harder to find than GPUs

The Agility Advantage

When a managed LLM costs $5 to draft a legal brief that normally bills $1,000, the arithmetic changes. Consider:

- Model Innovation Pace: New models launch monthly

- Switching Costs: APIs allow instant upgrades

- Strategic Flexibility: Capture upside immediately

The Hybrid Sweet Spot

stateDiagram-v2

[*] --> Start: Small Scale

Start --> API: Use Managed APIs

API --> Growth: Usage Grows

Growth --> Hybrid: Add Open Source

Hybrid --> Optimize: Route & Balance

Optimize --> Scale: Millions Saved

note right of API : Fast start, high agility

note right of Hybrid : Best of both worlds

note right of Scale : Maximum efficiency

Most successful teams evolve through stages:

- Start with APIs: Launch fast, learn quickly

- Add open source: Route predictable workloads

- Optimize mix: Balance cost, quality, and agility

- Scale smartly: Save millions while staying flexible

Your Escape Plan: From Trap to Triumph

Week 1-2: Assess and Baseline

- Audit current LLM spending across all pillars

- Identify top 20% of use cases by volume

- Benchmark quality requirements

Week 3-4: Quick Wins

- Implement basic caching (30-50% reduction)

- Add request batching for async workloads

- Optimize prompts for token efficiency

Month 2: Architecture Evolution

- Deploy router model for request classification

- Test open-source alternatives for common tasks

- Build monitoring and cost attribution

Month 3: Scale and Optimize

- Fine-tune open-source models for your domain

- Implement semantic caching

- Establish quarterly review process

Month 4+: Continuous Improvement

- A/B test model combinations

- Explore quantization and optimization

- Share learnings across teams

Conclusion: Turn Cost Challenges into Competitive Advantages

LLMs unlock transformative products and faster service, yet they punish sloppy design. The teams that thrive treat them as living systems requiring constant optimization. They budget beyond the token, start small, route smart, and tune often.

The cost trap is real—but so is the escape route. By understanding the four pillars, leveraging the provider ecosystem wisely, and implementing proven optimization strategies, you can slash costs by 90% while actually improving performance.

When you master these techniques, “bill shock” transforms into an engine for long-term growth. Your competitors struggle with runaway costs while you build sustainable AI advantages.

Ready to escape the LLM cost trap? Start with one optimization this week. Measure the impact. Then compound your savings month after month. The playbook is proven—now it’s your turn to execute.

References

- OpenAI GPT-4o pricing, OpenAI Pricing Page

- Anthropic Claude 4 pricing, Anthropic Documentation

- Google Gemini 2.5 Pro pricing, Gemini API Pricing

- AWS EC2 Instance Comparison, Vantage Cloud Cost Report

Apache Spark Training

Kafka Tutorial

Akka Consulting

Cassandra Training

AWS Cassandra Database Support

Kafka Support Pricing

Cassandra Database Support Pricing

Non-stop Cassandra

Watchdog

Advantages of using Cloudurable™

Cassandra Consulting

Cloudurable™| Guide to AWS Cassandra Deploy

Cloudurable™| AWS Cassandra Guidelines and Notes

Free guide to deploying Cassandra on AWS

Kafka Training

Kafka Consulting

DynamoDB Training

DynamoDB Consulting

Kinesis Training

Kinesis Consulting

Kafka Tutorial PDF

Kubernetes Security Training

Redis Consulting

Redis Training

ElasticSearch / ELK Consulting

ElasticSearch Training

InfluxDB/TICK Training TICK Consulting