January 9, 2025

🚀 What’s New in This 2025 Update

Major Updates and Changes

- Kafka 4.0.0 Architecture - Complete removal of ZooKeeper dependency

- KRaft Mode - Kafka’s native consensus protocol now mandatory

- Performance Enhancements - Faster rebalancing and failover

- New Consumer Group Protocol - KIP-848 as default

- Cloud-Native Features - Docker images and BYOC support

- Java Requirements - Java 17 for brokers, Java 11+ for clients

Deprecated Features

- ❌ ZooKeeper - Completely removed in Kafka 4.0.0

- ❌ Legacy Wire Formats - Pre-0.10.x formats no longer supported

- ❌ Java 8 - No longer supported

- ❌ –zookeeper CLI flags - Removed from all admin tools

Ready to master Apache Kafka’s revolutionary architecture? Let’s dive into the distributed streaming platform that powers real-time data at scale.

Kafka Architecture

mindmap

root((Kafka 4.0 Architecture))

Core Components

Records

Topics

Partitions

Brokers

Data Flow

Producers

Consumers

Consumer Groups

Storage

Logs

Segments

Compaction

Distributed System

KRaft Consensus

Replication

Clusters

Modern Features

Cloud-Native

Streaming

Connect

Kafka revolutionizes data streaming with Records, Topics, Consumers, Producers, Brokers, Logs, Partitions, and Clusters. Records contain key (optional), value, headers, and timestamp—all immutable for data integrity. Think of a Kafka Topic as a named stream of records ("/orders", "/user-signups")—your data highway. Each topic maintains a Log (on-disk storage) split into partitions and segments for scalability. The Producer API streams data in, while the Consumer API streams data out. Brokers (Kafka servers) form clusters that handle millions of messages per second.

Cloudurable provides Kafka training, Kafka consulting, Kafka support and helps setting up Kafka clusters in AWS.

Topics, Producers and Consumers

flowchart TB

subgraph Kafka Cluster

direction TB

T1[Topic: Orders]

T2[Topic: User Signups]

T3[Topic: Payments]

B1[Broker 1]

B2[Broker 2]

B3[Broker 3]

T1 --> B1

T2 --> B2

T3 --> B3

end

P1[Producer 1] -->|Writes| T1

P2[Producer 2] -->|Writes| T2

P3[Producer 3] -->|Writes| T3

C1[Consumer Group A] -->|Reads| T1

C2[Consumer Group B] -->|Reads| T2

C3[Consumer Group C] -->|Reads| T3

classDef default fill:#bbdefb,stroke:#1976d2,stroke-width:1px,color:#333333

class P1,P2,P3,C1,C2,C3,T1,T2,T3,B1,B2,B3 default

Step-by-Step Explanation:

- Producers write messages to specific topics

- Topics distribute across multiple brokers for scalability

- Consumer groups read from topics independently

- Each broker handles multiple topic partitions

- Data flows from producers through topics to consumers

Core Kafka with KRaft

KRaft: The New Heart of Kafka

Kafka 4.0 eliminates ZooKeeper entirely. KRaft (Kafka Raft) handles:

- Leadership election for brokers and partitions

- Metadata management across the cluster

- Configuration storage and propagation

- Service discovery for dynamic broker management

This architectural shift delivers faster failover, simplified operations, and reduced operational complexity. No more ZooKeeper means one less system to monitor, secure, and scale.

Producer, Consumer, Topic Details

Producers write to Topics. Consumers read from Topics. Simple? The magic happens underneath.

Each topic’s log structure enables:

- Sequential writes - Blazing fast disk I/O

- Parallel reads - Multiple consumers process simultaneously

- Configurable retention - Time or size-based cleanup

- Offset tracking - Consumers control their position

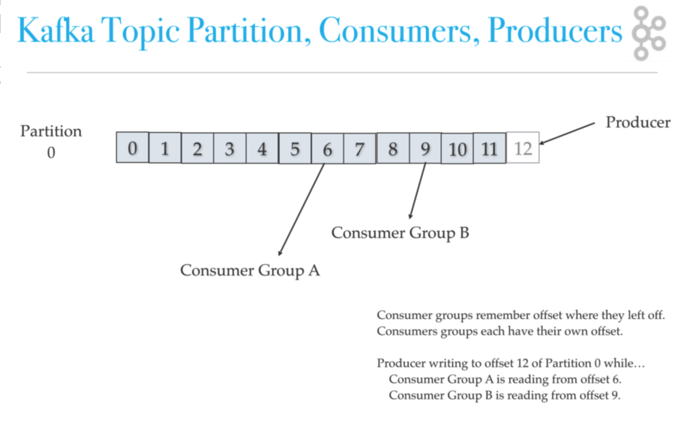

Topic logs split into partitions spread across nodes. Consumers in a group coordinate to process partitions in parallel. Kafka replicates partitions for fault tolerance.

Topic Partition, Consumer Groups, and Offsets

classDiagram

class Topic {

+name: string

+partitions: Partition[]

+replicationFactor: number

+retentionMs: long

+addPartition(): void

+getPartition(id: number): Partition

}

class Partition {

+id: number

+leader: Broker

+replicas: Broker[]

+logSegments: Segment[]

+highWatermark: long

+appendRecord(record: Record): void

}

class ConsumerGroup {

+groupId: string

+members: Consumer[]

+coordinator: Broker

+protocol: string

+rebalance(): void

+commitOffset(partition: number, offset: long): void

}

class Producer {

+clientId: string

+acks: string

+compressionType: string

+send(record: Record): Future

+flush(): void

}

Topic "1" *-- "many" Partition

ConsumerGroup "many" -- "many" Partition : reads from

Producer "many" -- "many" Partition : writes to

Step-by-Step Explanation:

- Topics contain multiple partitions for parallelism

- Each partition has a leader broker and replicas

- Consumer groups coordinate partition assignment

- Producers can write to multiple partitions

- The new KIP-848 protocol optimizes rebalancing

Scale and Speed

Ever wondered how Kafka handles billions of messages? The secret sauce:

Write Performance:

- Sequential disk writes reach 700+ MB/second

- Partitioning enables parallel writes across brokers

- Zero-copy transfer minimizes CPU overhead

Read Performance:

- Consumer groups parallelize processing

- Batch fetching reduces network overhead

- Smart caching leverages OS page cache

Horizontal Scaling:

- Add brokers to increase capacity

- Partition count determines parallelism

- Automatic load distribution via KRaft

Kafka Brokers

A Kafka cluster scales from 3 to 1,000+ brokers. Each broker:

- Has a unique ID

- Stores partition replicas

- Handles client connections

- Participates in KRaft consensus

Bootstrap any client by connecting to any broker—the cluster topology propagates automatically.

Cluster, Failover, and ISRs

stateDiagram-v2

[*] --> Healthy: All replicas in sync

Healthy --> LeaderFailure: Leader broker crashes

LeaderFailure --> ElectingLeader: KRaft consensus

ElectingLeader --> NewLeader: ISR promoted

NewLeader --> Recovering: Sync replicas

Recovering --> Healthy: All replicas caught up

state Healthy {

[*] --> Normal

Normal --> Writing: Producer sends

Writing --> Replicating: To ISRs

Replicating --> Normal: Acknowledged

}

classDef healthy fill:#c8e6c9,stroke:#43a047

classDef failure fill:#ffcdd2,stroke:#e53935

classDef election fill:#bbdefb,stroke:#1976d2

classDef recovering fill:#fff9c4,stroke:#f9a825

class Healthy healthy

class LeaderFailure failure

class ElectingLeader,NewLeader election

class Recovering recovering

Step-by-Step Explanation:

- Healthy state: Leader handles writes, replicates to ISRs

- Leader failure triggers automatic failover

- KRaft consensus elects new leader from ISRs

- New leader accepts writes immediately

- Cluster recovers to full replication

Kafka achieves high availability through:

- Replication Factor - Set to 3+ for production

- ISRs (In-Sync Replicas) - Replicas caught up with leader

- Min ISRs - Configurable write availability guarantee

- Unclean Leader Election - Trade-off between availability and consistency

Failover vs. Disaster Recovery

Failover (Automatic):

- Replication handles rack/AZ failures

- RF=3 survives single AZ outage

- Sub-second leader election with KRaft

- Zero data loss with proper configuration

Disaster Recovery (Manual):

- MirrorMaker 2.0 for cross-region replication

- Active-passive or active-active setups

- RPO/RTO depends on replication lag

- Automated failover possible with additional tooling

Modern Kafka Architecture with KRaft

flowchart TB

subgraph "Kafka 4.0 Cluster"

direction TB

subgraph "KRaft Controllers"

KC1[Controller 1<br>Leader]

KC2[Controller 2<br>Follower]

KC3[Controller 3<br>Follower]

KC1 -.->|Raft Consensus| KC2

KC1 -.->|Raft Consensus| KC3

end

subgraph "Kafka Brokers"

B1[Broker 1]

B2[Broker 2]

B3[Broker 3]

B4[Broker 4]

end

subgraph "Metadata"

MD[Cluster Metadata<br>Topics, Partitions, ACLs]

end

KC1 -->|Manages| MD

MD -->|Propagates to| B1

MD -->|Propagates to| B2

MD -->|Propagates to| B3

MD -->|Propagates to| B4

end

P[Producers] -->|Write| B1

P -->|Write| B2

C[Consumers] -->|Read| B3

C -->|Read| B4

classDef controller fill:#e1bee7,stroke:#8e24aa,stroke-width:2px,color:#333333

classDef broker fill:#bbdefb,stroke:#1976d2,stroke-width:1px,color:#333333

classDef client fill:#c8e6c9,stroke:#43a047,stroke-width:1px,color:#333333

class KC1,KC2,KC3 controller

class B1,B2,B3,B4 broker

class P,C client

Step-by-Step Explanation:

- KRaft controllers form a Raft consensus group

- Controller leader manages all metadata

- Metadata propagates to all brokers

- Brokers handle client requests independently

- No external coordination service needed

Kafka Topics Architecture

Ready to explore deeper? Continue reading about Kafka Topics Architecture to understand partitions, parallel processing, and advanced configurations.

Related Content

- What is Kafka?

- Kafka Architecture

- Kafka Topic Architecture

- Kafka Consumer Architecture

- Kafka Producer Architecture

- Kafka Architecture and Low Level Design

- Kafka and Schema Registry

- Kafka Ecosystem

- Kafka vs. JMS

- Kafka versus Kinesis

- Kafka Tutorial: Command Line

- Kafka Tutorial: Failover

- Kafka Tutorial

- Kafka Producer Example

- Kafka Consumer Example

- Kafka Log Compaction

About Cloudurable

Accelerate your Kafka journey with expert guidance. Cloudurable provides Kafka training, Kafka consulting, Kafka support and helps setting up Kafka clusters in AWS.

Check out our new GoLang course. We provide onsite Go Lang training which is instructor led.

TweetApache Spark Training

Kafka Tutorial

Akka Consulting

Cassandra Training

AWS Cassandra Database Support

Kafka Support Pricing

Cassandra Database Support Pricing

Non-stop Cassandra

Watchdog

Advantages of using Cloudurable™

Cassandra Consulting

Cloudurable™| Guide to AWS Cassandra Deploy

Cloudurable™| AWS Cassandra Guidelines and Notes

Free guide to deploying Cassandra on AWS

Kafka Training

Kafka Consulting

DynamoDB Training

DynamoDB Consulting

Kinesis Training

Kinesis Consulting

Kafka Tutorial PDF

Kubernetes Security Training

Redis Consulting

Redis Training

ElasticSearch / ELK Consulting

ElasticSearch Training

InfluxDB/TICK Training TICK Consulting