January 9, 2025

🚀 What’s New in This 2025 Update

Major Updates and Changes

- KRaft-Only Architecture - ZooKeeper completely eliminated

- Raft Consensus Replication - Native leadership election

- Java 17 Requirement - Modern JVM optimizations

- Protocol Cleanup - Removed pre-0.10.x formats

- Dynamic KRaft Quorums - Add/remove controllers without downtime

- Improved Atomic Writes - Enhanced exactly-once semantics

Deprecated Features

- ❌ ZooKeeper coordination - Fully removed

- ❌ Java 8 support - Minimum Java 11/17

- ❌ Legacy wire protocols - Pre-0.10.x formats gone

- ❌ Old replication mechanisms - Replaced by Raft

Ready to understand how Kafka achieves its legendary performance? Let’s dive deep into the engineering decisions that make Kafka the backbone of modern data infrastructure.

Kafka Architecture: Low-Level Design

mindmap

root((Low-Level Design))

Persistence

Sequential I/O

Page Cache

Zero Copy

Log Segments

Network

Binary Protocol

Request Pipelining

Batch Processing

Compression

Replication

Raft Consensus

ISR Management

Leader Election

Atomic Writes

Performance

OS Optimization

JVM Tuning

Thread Models

Memory Management

Building on our Kafka architecture series including topics, producers, consumers, and log compaction, this article reveals the engineering brilliance behind Kafka’s performance.

Kafka Design Motivation

LinkedIn engineering created Kafka to solve a fundamental problem: handling massive real-time data streams efficiently. The design goals shaped every architectural decision:

- High throughput - Millions of messages per second

- Low latency - Sub-millisecond message delivery

- Horizontal scalability - Add nodes to increase capacity

- Fault tolerance - Survive multiple failures

- Durability - Never lose committed data

- Operational simplicity - Easy to run at scale

flowchart TB

subgraph DesignPrinciples["Design Principles"]

P1[Distributed<br>Commit Log]

P2[Sequential<br>I/O]

P3[Zero<br>Copy]

P4[Batching<br>Everywhere]

P5[Smart<br>Clients]

end

subgraph Results["Results"]

R1[700+ MB/s<br>Per Broker]

R2[< 5ms<br>Latency]

R3[Millions of<br>Partitions]

R4[Petabyte<br>Scale]

end

P1 --> R3

P2 --> R1

P3 --> R2

P4 --> R1

P5 --> R4

classDef principle fill:#bbdefb,stroke:#1976d2,stroke-width:1px,color:#333333

classDef result fill:#c8e6c9,stroke:#43a047,stroke-width:1px,color:#333333

class P1,P2,P3,P4,P5 principle

class R1,R2,R3,R4 result

Cloudurable provides Kafka training, Kafka consulting, Kafka support and helps setting up Kafka clusters in AWS.

Persistence: Embrace the Filesystem

Kafka’s genius begins with its storage strategy: trust the operating system.

Sequential I/O: The Speed Secret

flowchart LR

subgraph RandomIO["Random I/O (Slow)"]

D1[Disk] -->|Seek| H1[Head]

H1 -->|Read| B1[Block 42]

B1 -->|Seek| H2[Head]

H2 -->|Read| B2[Block 7]

B2 -->|Seek| H3[Head]

H3 -->|Read| B3[Block 99]

end

subgraph SequentialIO["Sequential I/O (Fast)"]

D2[Disk] -->|Read| S1[Block 1]

S1 -->|Read| S2[Block 2]

S2 -->|Read| S3[Block 3]

S3 -->|Continue| S4[...]

end

RandomIO -->|~100 IOPS| Slow[Slow: Seek Time Dominates]

SequentialIO -->|~600 MB/s| Fast[Fast: No Seeks]

classDef random fill:#ffcdd2,stroke:#e53935,stroke-width:1px,color:#333333

classDef sequential fill:#c8e6c9,stroke:#43a047,stroke-width:1px,color:#333333

class D1,H1,H2,H3,B1,B2,B3 random

class D2,S1,S2,S3,S4 sequential

Performance Numbers:

- Sequential writes: 600+ MB/s on SATA SSD, 2+ GB/s on NVMe

- Random writes: 100-200 IOPS (0.4-0.8 MB/s)

- 1500x faster for sequential access!

OS Page Cache: Free Performance

// Kafka doesn't manage its own cache

// Instead, it leverages the OS page cache

// Write path - OS buffers in page cache

fileChannel.write(ByteBuffer.wrap(messageBytes));

// Read path - OS serves from memory if cached

fileChannel.read(buffer, offset);

// Zero-copy transfer - never enters JVM

fileChannel.transferTo(position, count, socketChannel);

Benefits:

- No GC pressure - Data stays outside JVM heap

- Warm cache on restart - OS cache survives process restart

- Automatic management - OS handles eviction optimally

- Read-ahead optimization - OS prefetches sequential data

Log-Structured Storage

classDiagram

class TopicPartition {

+topic: String

+partition: Int

+logDir: Path

+segments: List~LogSegment~

+activeSegment: LogSegment

+append(records: Records): void

+read(offset: Long, maxBytes: Int): Records

}

class LogSegment {

+baseOffset: Long

+file: FileChannel

+index: OffsetIndex

+timeIndex: TimeIndex

+maxSegmentSize: Long

+append(records: Records): void

+read(offset: Long): Records

+close(): void

}

class OffsetIndex {

+entries: MappedByteBuffer

+lookup(offset: Long): Position

+append(offset: Long, position: Int): void

}

class TimeIndex {

+entries: MappedByteBuffer

+lookup(timestamp: Long): Offset

+append(timestamp: Long, offset: Long): void

}

TopicPartition "1" *-- "many" LogSegment

LogSegment "1" *-- "1" OffsetIndex

LogSegment "1" *-- "1" TimeIndex

Producer Load Balancing and Batching

Smart Client Design

Kafka producers are “smart clients” that:

- Discover cluster topology - Query any broker for metadata

- Route directly to leaders - No proxy layer needed

- Batch intelligently - Accumulate records by partition

- Handle failures - Retry with exponential backoff

Advanced Batching Strategy

stateDiagram-v2

[*] --> Accumulating: Producer sends

state Accumulating {

[*] --> Collecting

Collecting --> BatchReady: Size limit OR

Collecting --> BatchReady: Time limit

BatchReady --> Compressing: Optional

Compressing --> Ready

}

Accumulating --> Sending: Batch ready

state Sending {

[*] --> InFlight

InFlight --> WaitingAck: Network send

WaitingAck --> Success: ACK received

WaitingAck --> Retry: Failure

Retry --> InFlight: Backoff

}

Sending --> [*]: Complete

classDef accumulating fill:#bbdefb,stroke:#1976d2,stroke-width:1px,color:#333333

classDef sending fill:#e1bee7,stroke:#8e24aa,stroke-width:1px,color:#333333

class Accumulating,Collecting,BatchReady,Compressing,Ready accumulating

class Sending,InFlight,WaitingAck,Success,Retry sending

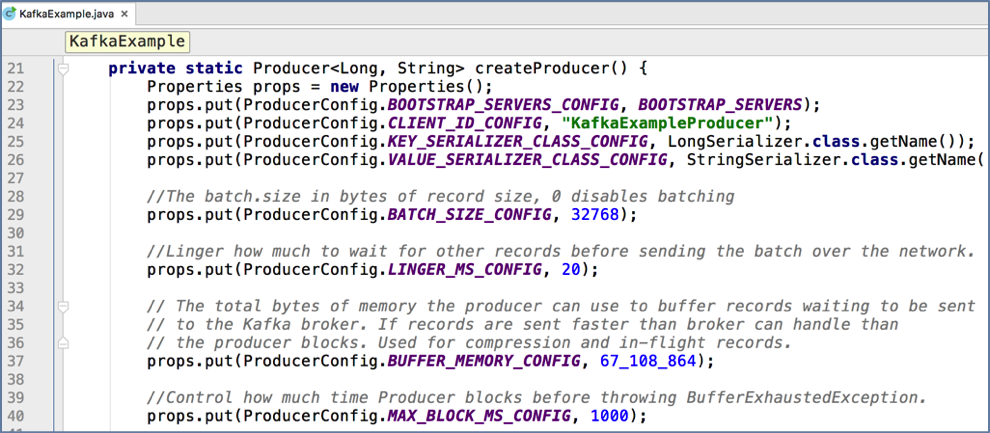

Batching Configuration:

// Batch size - larger = better throughput

props.put("batch.size", 16384); // 16KB default

// Linger time - wait for more records

props.put("linger.ms", 10); // 10ms delay

// Memory buffer for batching

props.put("buffer.memory", 33554432); // 32MB

// Compression - batch level

props.put("compression.type", "lz4");

Producer Batching Architecture

Network Protocol and Zero-Copy

Binary Protocol Efficiency

flowchart TB

subgraph ProducerRequest["Producer Request"]

H1[Request Header<br>16 bytes]

P1[Partition 1<br>Compressed Batch]

P2[Partition 2<br>Compressed Batch]

P3[Partition 3<br>Compressed Batch]

end

subgraph NetworkTransfer["Network Transfer"]

TCP[TCP Connection]

SSL[Optional TLS]

POOL[Connection Pool]

end

subgraph BrokerProcessing["Broker Processing"]

NIO[NIO Thread]

APPEND[Append to Log]

ZERO[Zero-Copy to Followers]

end

H1 --> TCP

P1 --> TCP

P2 --> TCP

P3 --> TCP

TCP --> NIO

NIO --> APPEND

APPEND --> ZERO

classDef request fill:#bbdefb,stroke:#1976d2,stroke-width:1px,color:#333333

classDef network fill:#fff9c4,stroke:#f9a825,stroke-width:1px,color:#333333

classDef broker fill:#e1bee7,stroke:#8e24aa,stroke-width:1px,color:#333333

class H1,P1,P2,P3 request

class TCP,SSL,POOL network

class NIO,APPEND,ZERO broker

Zero-Copy Transfer

// Traditional copy (4 copies, 2 context switches)

// 1. Read: Disk → Kernel buffer

// 2. Copy: Kernel → User buffer (context switch)

// 3. Copy: User → Kernel buffer (context switch)

// 4. Write: Kernel → Network

// Kafka zero-copy (2 copies, 0 context switches)

// 1. Read: Disk → Kernel buffer

// 2. Transfer: Kernel → Network (DMA)

public long sendfile(FileChannel file, long position,

long count, WritableByteChannel socket) {

return file.transferTo(position, count, socket);

}

Modern Replication with Raft

KRaft Consensus Architecture

flowchart TB

subgraph KRaftCluster["KRaft Controller Cluster"]

C1[Controller 1<br>Leader<br>Term: 5]

C2[Controller 2<br>Follower]

C3[Controller 3<br>Follower]

C1 -->|AppendEntries| C2

C1 -->|AppendEntries| C3

C2 -.->|Vote| C1

C3 -.->|Vote| C1

end

subgraph RaftLog["Raft Log"]

L1[Entry 1: Create Topic]

L2[Entry 2: Elect Leader P0]

L3[Entry 3: Update ISR]

L4[Entry 4: Broker Join]

L1 --> L2 --> L3 --> L4

end

subgraph Brokers["Kafka Brokers"]

B1[Broker 1]

B2[Broker 2]

B3[Broker 3]

B4[Broker 4]

end

C1 -->|Metadata| B1

C1 -->|Metadata| B2

C1 -->|Metadata| B3

C1 -->|Metadata| B4

classDef leader fill:#c8e6c9,stroke:#43a047,stroke-width:2px,color:#333333

classDef follower fill:#bbdefb,stroke:#1976d2,stroke-width:1px,color:#333333

classDef log fill:#e1bee7,stroke:#8e24aa,stroke-width:1px,color:#333333

class C1 leader

class C2,C3 follower

class L1,L2,L3,L4 log

Raft vs ZooKeeper Comparison

| Aspect | ZooKeeper Era | KRaft Era |

|---|---|---|

| Failover Time | 30+ seconds | < 1 second |

| Metadata Consistency | Eventual | Strong |

| Operational Complexity | High (2 systems) | Low (1 system) |

| Max Partitions | ~200K | Millions |

| Network Hops | 2-3 | 1 |

Producer Durability and Exactly-Once

Durability Levels

stateDiagram-v2

[*] --> Producer: Send message

state "acks=0" as acks0 {

Producer --> Network: Fire & forget

Network --> [*]: No confirmation

}

state "acks=1" as acks1 {

Producer --> Leader: Send

Leader --> LogWrite: Append

LogWrite --> Producer: ACK

}

state "acks=all" as acksall {

Producer --> Leader: Send

Leader --> Replicate: Copy to ISRs

Replicate --> AllISRs: Wait for all

AllISRs --> Producer: ACK

}

classDef fast fill:#fff9c4,stroke:#f9a825,stroke-width:1px,color:#333333

classDef balanced fill:#bbdefb,stroke:#1976d2,stroke-width:1px,color:#333333

classDef safe fill:#c8e6c9,stroke:#43a047,stroke-width:1px,color:#333333

class acks0 fast

class acks1 balanced

class acksall safe

Exactly-Once Semantics

// Initialize transactional producer

producer.initTransactions();

try {

// Start transaction

producer.beginTransaction();

// Send multiple messages atomically

producer.send(new ProducerRecord<>("accounts", "debit", amount));

producer.send(new ProducerRecord<>("accounts", "credit", amount));

// Send consumer offsets in same transaction

producer.sendOffsetsToTransaction(offsets, consumerGroupId);

// Commit atomically

producer.commitTransaction();

} catch (ProducerFencedException | OutOfOrderSequenceException e) {

// Fatal errors - producer is zombied

producer.close();

} catch (KafkaException e) {

// Abort and retry

producer.abortTransaction();

}

Performance Optimizations

JVM and OS Tuning

# JVM Settings for Kafka (Java 17)

export KAFKA_HEAP_OPTS="-Xms6g -Xmx6g"

export KAFKA_JVM_PERFORMANCE_OPTS="-XX:+UseG1GC \

-XX:MaxGCPauseMillis=20 \

-XX:InitiatingHeapOccupancyPercent=35 \

-XX:+ExplicitGCInvokesConcurrent \

-XX:+UseStringDeduplication"

# OS Tuning (Linux)

# Increase file descriptors

ulimit -n 100000

# Network tuning

sysctl -w net.core.rmem_max=134217728

sysctl -w net.core.wmem_max=134217728

sysctl -w net.ipv4.tcp_rmem="4096 87380 134217728"

sysctl -w net.ipv4.tcp_wmem="4096 65536 134217728"

# Disk scheduler for SSDs

echo noop > /sys/block/sda/queue/scheduler

Thread Model

classDiagram

class KafkaBroker {

+acceptorThreads: Thread[]

+networkThreads: Thread[]

+ioThreads: Thread[]

+backgroundThreads: Thread[]

}

class AcceptorThread {

+port: Int

+accept(): SocketChannel

}

class NetworkThread {

+selector: Selector

+processRequests(): void

+sendResponses(): void

}

class IOThread {

+requestQueue: BlockingQueue

+handleProduce(): void

+handleFetch(): void

}

class BackgroundThread {

+logCleaner: LogCleaner

+replicaFetcher: ReplicaFetcher

+performMaintenance(): void

}

KafkaBroker "1" *-- "1+" AcceptorThread

KafkaBroker "1" *-- "N" NetworkThread

KafkaBroker "1" *-- "M" IOThread

KafkaBroker "1" *-- "many" BackgroundThread

Quotas and Multi-Tenancy

Resource Management

// Producer quotas

bin/kafka-configs.sh --alter --add-config 'producer_byte_rate=1048576' \

--entity-type users --entity-name alice

// Consumer quotas

bin/kafka-configs.sh --alter --add-config 'consumer_byte_rate=2097152' \

--entity-type users --entity-name bob

// Request quotas (CPU time)

bin/kafka-configs.sh --alter --add-config 'request_percentage=50' \

--entity-type clients --entity-name mobile-app

Low-Level Architecture Review

How does Kafka achieve high throughput?

Through sequential I/O, zero-copy transfers, batching, compression, and leveraging OS page cache instead of managing its own cache.

What makes KRaft better than ZooKeeper?

Native integration, sub-second failover, support for millions of partitions, reduced operational complexity, and strong consistency guarantees.

How does exactly-once work?

Idempotent producers with sequence numbers, transactional APIs for atomic writes across partitions, and transaction coordinators managing state.

Why is batching critical?

Amortizes network overhead, improves compression ratios, enables efficient sequential writes, and maximizes throughput.

What are the durability trade-offs?

- acks=0: Maximum speed, no durability

- acks=1: Balanced performance and safety

- acks=all: Maximum durability, higher latency

How does Kafka handle slow consumers?

Pull-based model prevents overwhelming consumers, quotas limit resource usage, and consumer lag monitoring enables proactive intervention.

Best Practices for 2025

- Leverage KRaft - Simpler operations, better performance

- Use Java 17 - Modern GC, better performance

- Enable compression - LZ4 for speed, ZSTD for ratio

- Tune OS settings - File descriptors, network buffers

- Monitor everything - JMX metrics are comprehensive

- Plan capacity - Disk, network, CPU in that order

- Test failure scenarios - Validate durability settings

- Use transactions wisely - Only when needed

- Optimize batch sizes - Based on message patterns

- Keep producers close - Minimize network latency

Related Content

- What is Kafka?

- Kafka Architecture

- Kafka Topic Architecture

- Kafka Consumer Architecture

- Kafka Producer Architecture

- Kafka Low Level Design

- Kafka Log Compaction

- Kafka and Schema Registry

- Kafka Ecosystem

- Kafka vs. JMS

- Kafka versus Kinesis

- Kafka Command Line Tutorial

- Kafka Failover Tutorial

About Cloudurable

Master Kafka’s internals with expert guidance. Cloudurable provides Kafka training, Kafka consulting, Kafka support and helps setting up Kafka clusters in AWS.

Check out our new GoLang course. We provide onsite Go Lang training which is instructor led.

TweetApache Spark Training

Kafka Tutorial

Akka Consulting

Cassandra Training

AWS Cassandra Database Support

Kafka Support Pricing

Cassandra Database Support Pricing

Non-stop Cassandra

Watchdog

Advantages of using Cloudurable™

Cassandra Consulting

Cloudurable™| Guide to AWS Cassandra Deploy

Cloudurable™| AWS Cassandra Guidelines and Notes

Free guide to deploying Cassandra on AWS

Kafka Training

Kafka Consulting

DynamoDB Training

DynamoDB Consulting

Kinesis Training

Kinesis Consulting

Kafka Tutorial PDF

Kubernetes Security Training

Redis Consulting

Redis Training

ElasticSearch / ELK Consulting

ElasticSearch Training

InfluxDB/TICK Training TICK Consulting