January 9, 2025

🚀 What’s New in This 2025 Update

Major Updates and Changes

- KRaft-Based Metadata Management - Direct partition control without ZooKeeper

- Raft Consensus for Leader Election - Deterministic, fast failover

- Enhanced ISR Management - Real-time replica state tracking

- Faster Topic Operations - Reduced metadata propagation delays

- Improved Partition Assignment - Efficient rebalancing strategies

- Centralized Controller - Single source of truth for metadata

Deprecated Features

- ❌ ZooKeeper-based leader election - Replaced by Raft

- ❌ Legacy metadata management - KRaft is mandatory

- ❌ Old partition reassignment tools - Updated for KRaft

Ready to master the backbone of Kafka’s scalability? Let’s explore how topics and partitions power distributed streaming.

Kafka Topic Architecture - Replication, Failover and Parallel Processing

mindmap

root((Kafka Topic Architecture))

Topics & Logs

Named Streams

Partitioned Logs

Segment Files

Retention Policies

Partitions

Parallel Processing

Ordered Sequences

Key-Based Routing

Load Distribution

Replication

Leader-Follower Model

ISR Management

Fault Tolerance

Data Durability

KRaft Integration

Raft Consensus

Metadata Management

Leader Election

Controller Quorum

Building on Kafka Architecture, this deep dive reveals how Kafka achieves massive scale through intelligent partitioning and rock-solid replication.

Topics transform into distributed, fault-tolerant logs through partitions—the secret to Kafka’s performance and reliability.

Kafka Topics, Logs, and Partitions

A Kafka topic represents a named stream of records—think orders, payments, or user-events. But here’s where it gets powerful:

Topics → Logs → Partitions → Segments

Each topic maintains an append-only log split into:

- Partitions - Parallel units of scale

- Segments - Time or size-based file chunks

- Replicas - Copies for fault tolerance

Topics support publish-subscribe messaging with multiple consumer groups reading at their own pace. Partitions unlock:

- Speed - Parallel writes and reads

- Scalability - Distribute across servers

- Size - Exceed single server limits

Cloudurable provides Kafka training, Kafka consulting, Kafka support and helps setting up Kafka clusters in AWS.

Kafka Topic Partitions: The Unit of Parallelism

Partitions are Kafka’s superpower. Each partition:

- Stores records in order of arrival

- Assigns offsets - Sequential IDs for each record

- Routes by key - Same key → Same partition

- Enables parallelism - One consumer per partition

flowchart TB

subgraph Topic["Topic: User Events"]

subgraph P0["Partition 0"]

R0[Offset 0: Login]

R1[Offset 1: Browse]

R2[Offset 2: Purchase]

end

subgraph P1["Partition 1"]

R3[Offset 0: Signup]

R4[Offset 1: Profile]

R5[Offset 2: Settings]

end

subgraph P2["Partition 2"]

R6[Offset 0: Search]

R7[Offset 1: Cart]

R8[Offset 2: Checkout]

end

end

Producer -->|Key: user123| P0

Producer -->|Key: user456| P1

Producer -->|Key: user789| P2

C1[Consumer 1] -->|Read| P0

C2[Consumer 2] -->|Read| P1

C3[Consumer 3] -->|Read| P2

classDef partition fill:#e1bee7,stroke:#8e24aa,stroke-width:1px,color:#333333

classDef record fill:#fff9c4,stroke:#f9a825,stroke-width:1px,color:#333333

classDef client fill:#bbdefb,stroke:#1976d2,stroke-width:1px,color:#333333

class P0,P1,P2 partition

class R0,R1,R2,R3,R4,R5,R6,R7,R8 record

class Producer,C1,C2,C3 client

Step-by-Step Explanation:

- Producer routes records to partitions by key hash

- Each partition maintains its own offset sequence

- Consumers read partitions independently

- Ordering guaranteed within each partition

- Parallel processing across partitions

Partition Ordering and Cardinality

Key Concepts:

- Order per partition - Not across partitions

- Immutable sequences - Append-only design

- Sequential offsets - Unique within partition

- Structured commit log - Durable storage

Why This Matters:

// Same key = Same partition = Preserved order

producer.send(new ProducerRecord<>("orders", "customer-123", order1));

producer.send(new ProducerRecord<>("orders", "customer-123", order2));

producer.send(new ProducerRecord<>("orders", "customer-123", order3));

// All three orders for customer-123 go to same partition in order

// Different keys = Potentially different partitions = No order guarantee

producer.send(new ProducerRecord<>("orders", "customer-123", orderA));

producer.send(new ProducerRecord<>("orders", "customer-456", orderB));

// orderB might be processed before orderA

Topic Partition Layout and Offsets

Kafka Topic Partition Replication

Fault tolerance through intelligent replication:

stateDiagram-v2

[*] --> Leader: Initial State

state Leader {

[*] --> Receiving

Receiving --> Replicating: Write to log

Replicating --> Acknowledging: ISRs confirm

Acknowledging --> Committed: All ISRs synced

}

Leader --> FollowerPromotion: Leader fails

state FollowerPromotion {

[*] --> ElectingLeader

ElectingLeader --> CheckingISRs: KRaft consensus

CheckingISRs --> PromoteISR: Select most caught-up

PromoteISR --> NewLeader: Follower becomes leader

}

FollowerPromotion --> Leader: Resume operations

classDef leader fill:#c8e6c9,stroke:#43a047,stroke-width:1px,color:#333333

classDef election fill:#bbdefb,stroke:#1976d2,stroke-width:1px,color:#333333

class Leader,Receiving,Replicating,Acknowledging,Committed leader

class FollowerPromotion,ElectingLeader,CheckingISRs,PromoteISR,NewLeader election

Step-by-Step Explanation:

- Leader receives all writes for a partition

- Leader replicates to follower ISRs

- Commits occur after ISR acknowledgment

- KRaft manages leader election on failure

- Most caught-up ISR becomes new leader

Replication Configuration:

- Replication Factor - Total copies (leader + followers)

- Min ISRs - Minimum replicas for writes

- Unclean Leader Election - Allow/deny non-ISR promotion

Replication: Leaders, Followers, and ISRs with KRaft

KRaft revolutionizes Kafka’s replication management:

classDiagram

class KRaftController {

+metadata: ClusterMetadata

+raftLog: RaftLog

+electLeader(partition): Leader

+updateISRs(partition, replicas): void

+handleBrokerFailure(brokerId): void

}

class PartitionMetadata {

+topic: string

+partitionId: int

+leader: Broker

+replicas: Broker[]

+isr: Broker[]

+epochId: int

}

class Broker {

+brokerId: int

+host: string

+port: int

+rack: string

+isAlive(): boolean

}

class RaftConsensus {

+currentTerm: int

+votedFor: int

+log: Entry[]

+requestVote(): boolean

+appendEntries(): boolean

}

KRaftController "1" --> "many" PartitionMetadata : manages

PartitionMetadata "1" --> "many" Broker : tracks

KRaftController "1" --> "1" RaftConsensus : uses

KRaft Benefits:

- Faster failover - Sub-second leader election

- Strong consistency - Raft guarantees single leader

- Real-time ISR tracking - Instant replica state updates

- No split-brain - Impossible with Raft consensus

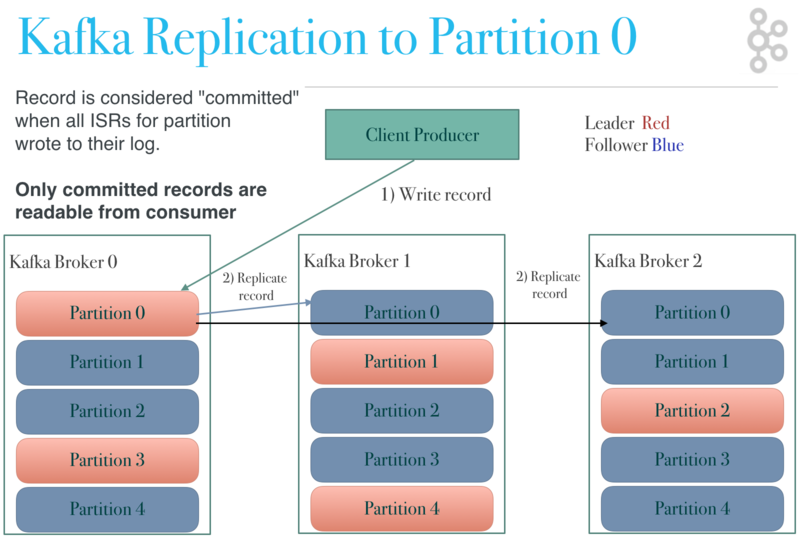

Replication to Partition 0

Records achieve “committed” status only after all ISRs acknowledge writes. Consumers see only committed records, ensuring durability.

Replication to Partition 1

Different partitions can have leaders on different brokers, distributing load across the cluster.

Modern Partition Management with KRaft

flowchart TB

subgraph KRaftQuorum["KRaft Controller Quorum"]

KC1[Controller 1<br>Leader]

KC2[Controller 2<br>Follower]

KC3[Controller 3<br>Follower]

end

subgraph Operations["Topic Operations"]

Create[Create Topic]

Alter[Alter Partitions]

Delete[Delete Topic]

Reassign[Reassign Replicas]

end

subgraph Brokers["Kafka Brokers"]

B1[Broker 1]

B2[Broker 2]

B3[Broker 3]

B4[Broker 4]

end

Operations -->|Request| KC1

KC1 -->|Raft Log| KC2

KC1 -->|Raft Log| KC3

KC1 -->|Metadata Update| B1

KC1 -->|Metadata Update| B2

KC1 -->|Metadata Update| B3

KC1 -->|Metadata Update| B4

classDef controller fill:#e1bee7,stroke:#8e24aa,stroke-width:2px,color:#333333

classDef operation fill:#fff9c4,stroke:#f9a825,stroke-width:1px,color:#333333

classDef broker fill:#bbdefb,stroke:#1976d2,stroke-width:1px,color:#333333

class KC1,KC2,KC3 controller

class Create,Alter,Delete,Reassign operation

class B1,B2,B3,B4 broker

Step-by-Step Explanation:

- All topic operations go through KRaft controller leader

- Controller replicates changes via Raft log

- Metadata updates propagate to all brokers

- Strong consistency guaranteed by Raft

- No ZooKeeper dependency

Best Practices for Topics and Partitions in 2025

1. Right-Size Your Partitions

# Good: Balanced partition count

topics:

user-events:

partitions: 50 # Matches expected consumer count

replication: 3

min.insync.replicas: 2

2. Favor Fewer Topics, More Partitions

- Prefer: 1 topic with 100 partitions

- Avoid: 100 topics with 1 partition each

- Reason: Lower metadata overhead

3. Plan for Peak Load

- Partitions = Max expected consumers

- Account for growth (2x headroom)

- Monitor partition lag continuously

4. Optimize Replication

- RF=3 for production (survives 2 failures)

- min.insync.replicas = RF-1

- Rack-aware placement for AZ failures

5. Key Design Strategies

- Ensure even distribution - Avoid hot partitions

- Use semantic keys - customer-id, user-id

- Consider ordering needs - Same key for related events

6. Monitor Critical Metrics

- Under-replicated partitions

- ISR shrink/expand rate

- Leader election frequency

- Partition lag by consumer group

Kafka Topic Architecture Review

What is an ISR?

An In-Sync Replica that’s caught up with the leader. KRaft tracks ISR state in real-time, enabling instant failover to the most current replica.

How does Kafka scale consumers?

Through partitions—each consumer in a group gets exclusive access to assigned partitions. More partitions = more parallel consumers.

What are leaders and followers?

Leaders handle all reads/writes for a partition. Followers replicate the leader’s log. KRaft ensures exactly one leader per partition through Raft consensus.

How does consumer failover work?

When a consumer dies, its partitions redistribute among surviving group members. The new KIP-848 protocol minimizes disruption during rebalancing.

How does broker failover work?

KRaft controllers detect broker failure instantly. New partition leaders are elected from ISRs via Raft consensus, typically completing in under a second.

Performance Tips

- Pre-create topics with proper partition counts

- Use compression at the producer level

- Tune segment sizes based on retention needs

- Enable unclean.leader.election=false for data safety

- Place replicas across failure domains

Next Steps

Continue your journey with Kafka Producer Architecture to understand how producers intelligently route data to partitions.

Related Content

- What is Kafka?

- Kafka Architecture

- Kafka Topic Architecture

- Kafka Consumer Architecture

- Kafka Producer Architecture

- Kafka Low Level Design

- Kafka and Schema Registry

- Kafka Ecosystem

- Kafka vs. JMS

- Kafka versus Kinesis

- Kafka Command Line Tutorial

- Kafka Failover Tutorial

- Kafka Producer Java Example

- Kafka Consumer Java Example

About Cloudurable

Scale your Kafka deployment with confidence. Cloudurable provides Kafka training, Kafka consulting, Kafka support and helps setting up Kafka clusters in AWS.

Check out our new GoLang course. We provide onsite Go Lang training which is instructor led.

TweetApache Spark Training

Kafka Tutorial

Akka Consulting

Cassandra Training

AWS Cassandra Database Support

Kafka Support Pricing

Cassandra Database Support Pricing

Non-stop Cassandra

Watchdog

Advantages of using Cloudurable™

Cassandra Consulting

Cloudurable™| Guide to AWS Cassandra Deploy

Cloudurable™| AWS Cassandra Guidelines and Notes

Free guide to deploying Cassandra on AWS

Kafka Training

Kafka Consulting

DynamoDB Training

DynamoDB Consulting

Kinesis Training

Kinesis Consulting

Kafka Tutorial PDF

Kubernetes Security Training

Redis Consulting

Redis Training

ElasticSearch / ELK Consulting

ElasticSearch Training

InfluxDB/TICK Training TICK Consulting